Monodeep Kar

Is Finer Better? The Limits of Microscaling Formats in Large Language Models

Jan 26, 2026Abstract:Microscaling data formats leverage per-block tensor quantization to enable aggressive model compression with limited loss in accuracy. Unlocking their potential for efficient training and inference necessitates hardware-friendly implementations that handle matrix multiplications in a native format and adopt efficient error-mitigation strategies. Herein, we report the emergence of a surprising behavior associated with microscaling quantization, whereas the output of a quantized model degrades as block size is decreased below a given threshold. This behavior clashes with the expectation that a smaller block size should allow for a better representation of the tensor elements. We investigate this phenomenon both experimentally and theoretically, decoupling the sources of quantization error behind it. Experimentally, we analyze the distributions of several Large Language Models and identify the conditions driving the anomalous behavior. Theoretically, we lay down a framework showing remarkable agreement with experimental data from pretrained model distributions and ideal ones. Overall, we show that the anomaly is driven by the interplay between narrow tensor distributions and the limited dynamic range of the quantized scales. Based on these insights, we propose the use of FP8 unsigned E5M3 (UE5M3) as a novel hardware-friendly format for the scales in FP4 microscaling data types. We demonstrate that UE5M3 achieves comparable performance to the conventional FP8 unsigned E4M3 scales while obviating the need of global scaling operations on weights and activations.

Application Inference using Machine Learning based Side Channel Analysis

Jul 09, 2019

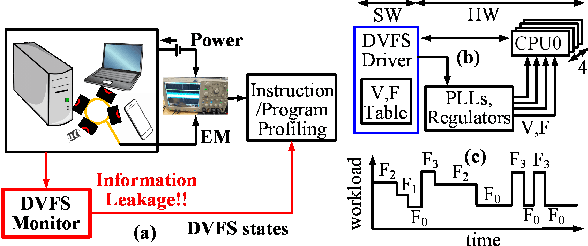

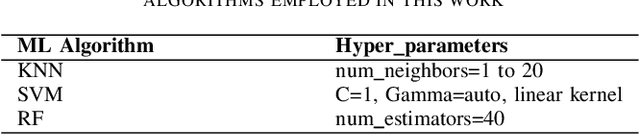

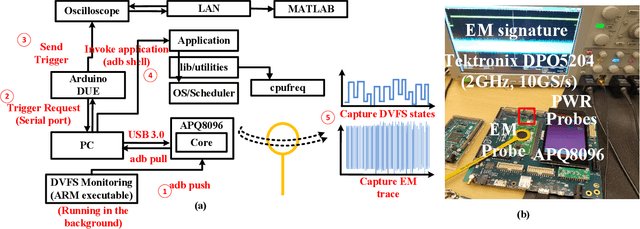

Abstract:The proliferation of ubiquitous computing requires energy-efficient as well as secure operation of modern processors. Side channel attacks are becoming a critical threat to security and privacy of devices embedded in modern computing infrastructures. Unintended information leakage via physical signatures such as power consumption, electromagnetic emission (EM) and execution time have emerged as a key security consideration for SoCs. Also, information published on purpose at user privilege level accessible through software interfaces results in software only attacks. In this paper, we used a supervised learning based approach for inferring applications executing on android platform based on features extracted from EM side-channel emissions and software exposed dynamic voltage frequency scaling(DVFS) states. We highlight the importance of machine learning based approach in utilizing these multi-dimensional features on a complex SoC, against profiling-based approaches. We also show that learning the instantaneous frequency states polled from onboard frequency driver (cpufreq) is adequate to identify a known application and flag potentially malicious unknown application. The experimental results on benchmarking applications running on ARMv8 processor in Snapdragon 820 board demonstrates early detection of these apps, and atleast 85% accuracy in detecting unknown applications. Overall, the highlight is to utilize a low-complexity path to application inference attacks through learning instantaneous frequency states pattern of CPU core.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge