Mohammed Imamul Hassan Bhuiyan

Power Transformer Fault Diagnosis with Intrinsic Time-scale Decomposition and XGBoost Classifier

Oct 21, 2021

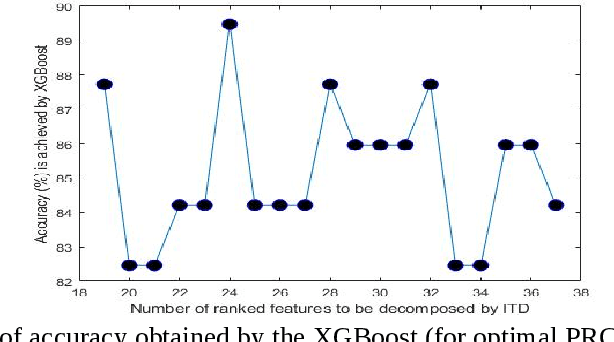

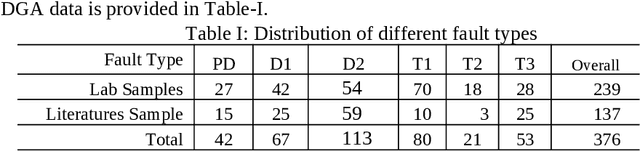

Abstract:An intrinsic time-scale decomposition (ITD) based method for power transformer fault diagnosis is proposed. Dissolved gas analysis (DGA) parameters are ranked according to their skewness, and then ITD based features extraction is performed. An optimal set of PRC features are determined by an XGBoost classifier. For classification purpose, an XGBoost classifier is used to the optimal PRC features set. The proposed method's performance in classification is studied using publicly available DGA data of 376 power transformers and employing an XGBoost classifier. The Proposed method achieves more than 95% accuracy and high sensitivity and F1-score, better than conventional methods and some recent machine learning-based fault diagnosis approaches. Moreover, it gives better Cohen Kappa and F1-score as compared to the recently introduced EMD-based hierarchical technique for fault diagnosis in power transformers.

An EMD-based Method for the Detection of Power Transformer Faults with a Hierarchical Ensemble Classifier

Oct 21, 2021

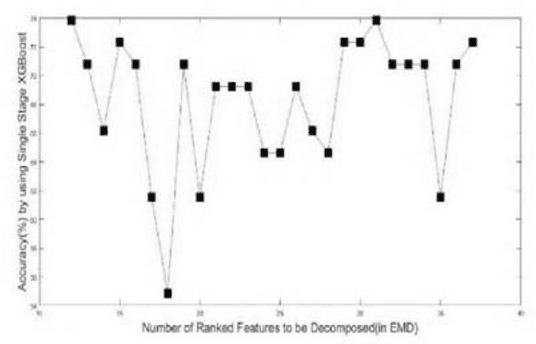

Abstract:In this paper, an Empirical Mode Decomposition-based method is proposed for the detection of transformer faults from Dissolve gas analysis (DGA) data. Ratio-based DGA parameters are ranked using their skewness. Optimal sets of intrinsic mode function coefficients are obtained from the ranked DGA parameters. A Hierarchical classification scheme employing XGBoost is presented for classifying the features to identify six different categories of transformer faults. Performance of the Proposed Method is studied for publicly available DGA data of 377 transformers. It is shown that the proposed method can yield more than 90% sensitivity and accuracy in the detection of transformer faults, a superior performance as compared to conventional methods as well as several existing machine learning-based techniques.

Weighted Contourlet Parametric (WCP) Feature Based Breast Tumor Classification from B-Mode Ultrasound Image

Feb 11, 2021

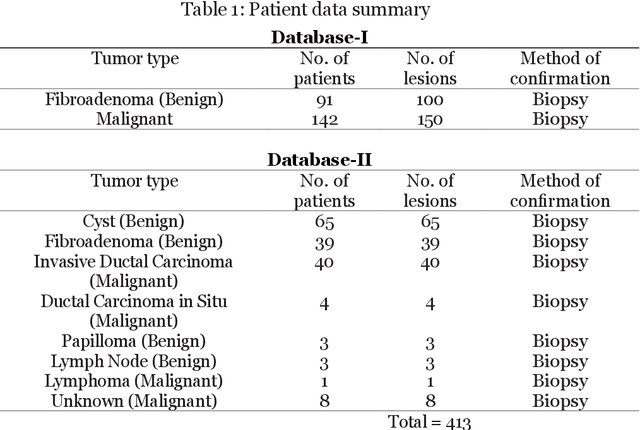

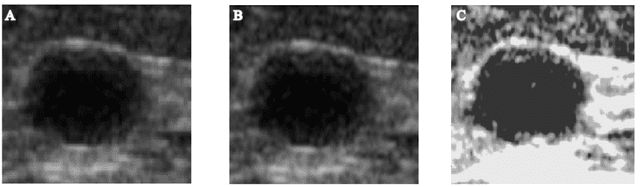

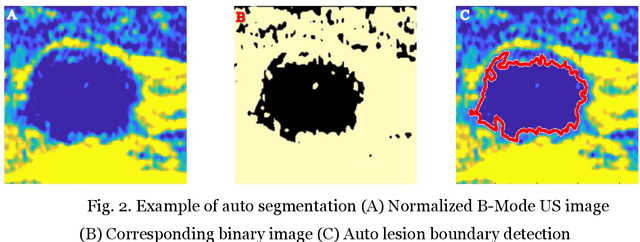

Abstract:Automated detection of breast tumor in early stages using B-Mode Ultrasound image is crucial for preventing widespread breast cancer specially among women. This paper is primarily focusing on the classification of breast tumors through statistical modelling such as Rician inverse Gaussian (RiIG) pdf of contourlet transformed B-Mode image of breast tumors which is not reported yet in other earlier works. The suitability of RiIG distribution in modeling the contourlet coefficients is illustrated and compared with that of Nakagami distribution. The proposed method consists of pre-processing to remove the speckle noise, segmentation of the lesion region, contourlet transform on the B-Mode Ultrasound image and using the corresponding contourlet sub-band coefficients and the RiIG parameters, production of contourlet parametric (CP) images and weighted contourlet parametric (WCP) images. A number of geometrical, statistical, and texture features are calculated from B-Mode and the contourlet parametric images. In order to classify the features, seven different classifiers are employed. The proposed approach is applied to two different datasets (Mendeley Data and Dataset B) those are available publicly. It is shown that with parametric images, accuracies in the range of 94-97% are achieved for different classifiers. Specifically, with the support vector machine and k-nearest-neighbor classifier, very high accuracies of 97.2% and 97.55% can be obtained for the Mendeley Data and Dataset B,respectively, using the weighted contourlet parametric images.The reported classification performance is also compared with that of other works using the datasets employed in this paper. It is seen that the proposed approach using weighted contourlet parametric images can provide a superior performance.

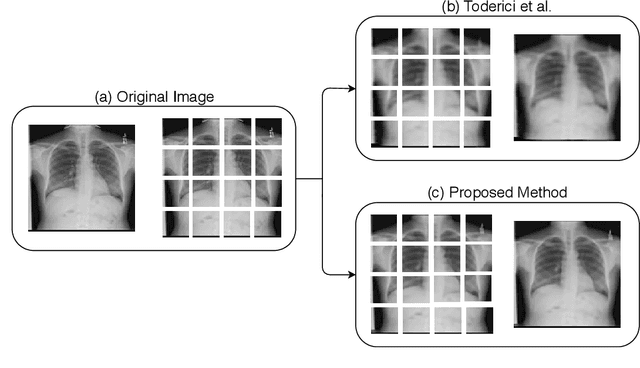

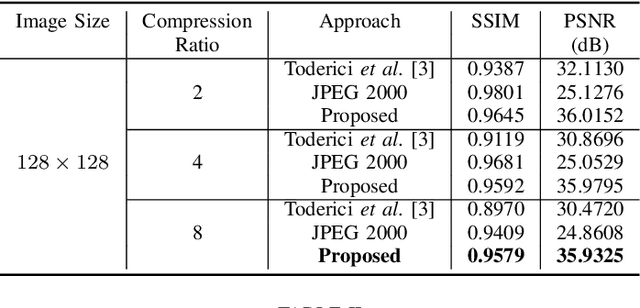

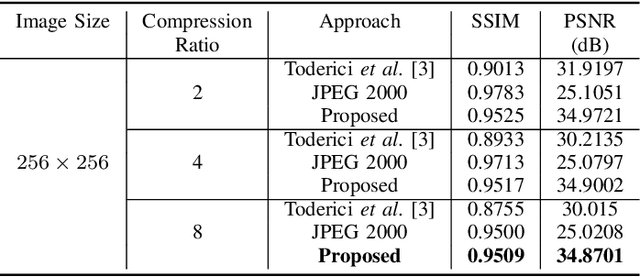

X-Ray Image Compression Using Convolutional Recurrent Neural Networks

May 09, 2019

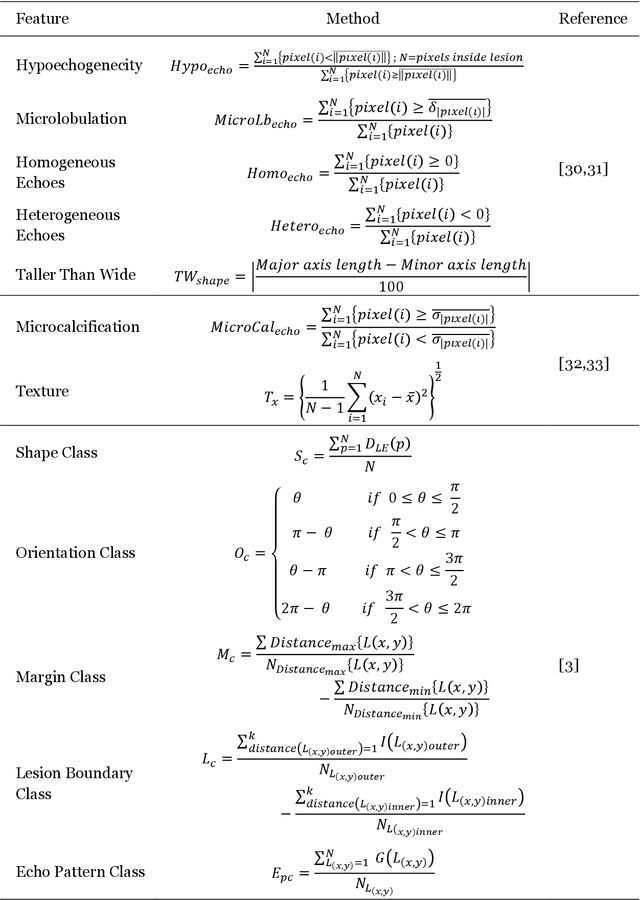

Abstract:In the advent of a digital health revolution, vast amounts of clinical data are being generated, stored and processed on a daily basis. This has made the storage and retrieval of large volumes of health-care data, especially, high-resolution medical images, particularly challenging. Effective image compression for medical images thus plays a vital role in today's healthcare information system, particularly in teleradiology. In this work, an X-ray image compression method based on a Convolutional Recurrent Neural Networks RNN-Conv is presented. The proposed architecture can provide variable compression rates during deployment while it requires each network to be trained only once for a specific dimension of X-ray images. The model uses a multi-level pooling scheme that learns contextualized features for effective compression. We perform our image compression experiments on the National Institute of Health (NIH) ChestX-ray8 dataset and compare the performance of the proposed architecture with a state-of-the-art RNN based technique and JPEG 2000. The experimental results depict improved compression performance achieved by the proposed method in terms of Structural Similarity Index (SSIM) and Peak Signal-to-Noise Ratio (PSNR) metrics. To the best of our knowledge, this is the first reported evaluation on using a deep convolutional RNN for medical image compression.

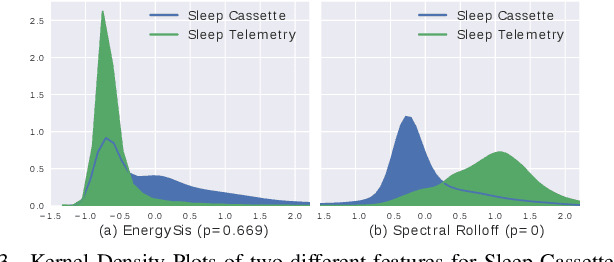

End-to-end Sleep Staging with Raw Single Channel EEG using Deep Residual ConvNets

Apr 23, 2019

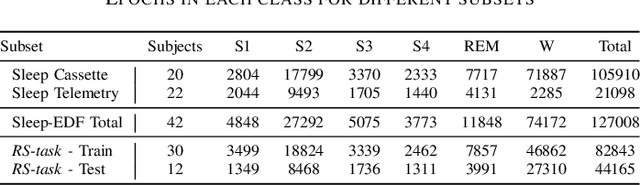

Abstract:Humans approximately spend a third of their life sleeping, which makes monitoring sleep an integral part of well-being. In this paper, a 34-layer deep residual ConvNet architecture for end-to-end sleep staging is proposed. The network takes raw single channel electroencephalogram (Fpz-Cz) signal as input and yields hypnogram annotations for each 30s segments as output. Experiments are carried out for two different scoring standards (5 and 6 stage classification) on the expanded PhysioNet Sleep-EDF dataset, which contains multi-source data from hospital and household polysomnography setups. The performance of the proposed network is compared with that of the state-of-the-art algorithms in patient independent validation tasks. The experimental results demonstrate the superiority of the proposed network compared to the best existing method, providing a relative improvement in epoch-wise average accuracy of 6.8% and 6.3% on the household data and multi-source data, respectively. Codes are made publicly available on Github.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge