Mohammad-R Akbarzadeh-T

Universal Swarm Computing by Nanorobots

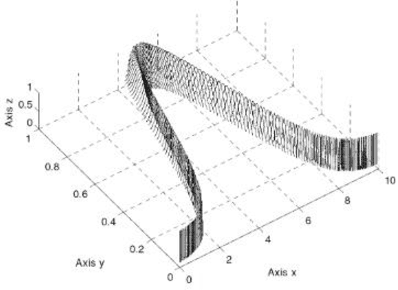

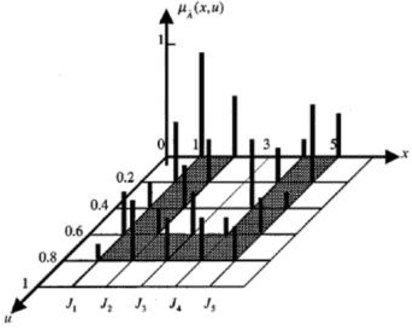

Nov 22, 2021Abstract:Realization of universal computing units for nanorobots is highly promising in creating new and wide arrays of applications, particularly in the realm of distributed computation. However, such realization is also a challenging problem due to the physical limitations of nanometer-sized designs such as in computation, sensory and perception as well as actuation. This paper proposes a theoretical foundation for solving this problem based on a novel notion of distributed swarm computing by basis agents (BAs). The proposed BA is an abstract model for nanorobots that can compute a very simple basis function called B-function. It is mathematically shown here that a swarm of BAs has the universal function approximation property and can accurately approximate functions. It is then analytically demonstrated that a swarm of BAs can be easily reprogrammed to compute desired functions simply by adjusting the concentrations of BAs in the environment. We further propose a specific structure for BAs which enable them to perform distributed computing such as in the aqueous environment of living tissues and nanomedicine. The hardware complexity of this structure aims to remain low to be more reasonably realizable by today technology. Finally, the performance of the proposed approach is illustrated by a simulation example.

Robust Control of Nanoscale Drug Delivery System in Atherosclerosis: A Mathematical Approach

Nov 22, 2021

Abstract:This paper proposes a mathematical approach for robust control of a nanoscale drug delivery system in treatment of atherosclerosis. First, a new nonlinear lumped model is introduced for mass transport in the arterial wall, and its accuracy is evaluated in comparison with the original distributed-parameter model. Then, based on the notion of sliding-mode control, an abstract model is designed for a smart drug delivery nanoparticle. In contrast to the competing strategies on nanorobotics, the proposed nanoparticles carry simpler hardware to penetrate the interior arterial wall and become more technologically feasible. Finally, from this lumped model and the nonlinear control theory, the overall system's stability is mathematically proven in the presence of uncertainty. Simulation results on a well-known model, and comparisons with earlier benchmark approaches, reveals that even when the LDL concentration in the lumen is high, the proposed nanoscale drug delivery system successfully reduces the drug consumption levels by as much as 16% and the LDL level in the Endothelium, Intima, Internal Elastic Layer (IEL) and Media layers of an unhealthy arterial wall by as much as 14.6%, 50.5%, 51.8%, and 64.4%, respectively.

Interval Probabilistic Fuzzy WordNet

Apr 04, 2021

Abstract:WordNet lexical-database groups English words into sets of synonyms called "synsets." Synsets are utilized for several applications in the field of text-mining. However, they were also open to criticism because although, in reality, not all the members of a synset represent the meaning of that synset with the same degree, in practice, they are considered as members of the synset, identically. Thus, the fuzzy version of synsets, called fuzzy-synsets (or fuzzy word-sense classes) were proposed and studied. In this study, we discuss why (type-1) fuzzy synsets (T1 F-synsets) do not properly model the membership uncertainty, and propose an upgraded version of fuzzy synsets in which membership degrees of word-senses are represented by intervals, similar to what in Interval Type 2 Fuzzy Sets (IT2 FS) and discuss that IT2 FS theoretical framework is insufficient for analysis and design of such synsets, and propose a new concept, called Interval Probabilistic Fuzzy (IPF) sets. Then we present an algorithm for constructing the IPF synsets in any language, given a corpus and a word-sense-disambiguation system. Utilizing our algorithm and the open-American-online-corpus (OANC) and UKB word-sense-disambiguation, we constructed and published the IPF synsets of WordNet for English language.

An Algorithm for Fuzzification of WordNets, Supported by a Mathematical Proof

Jun 07, 2020Abstract:WordNet-like Lexical Databases (WLDs) group English words into sets of synonyms called "synsets." Although the standard WLDs are being used in many successful Text-Mining applications, they have the limitation that word-senses are considered to represent the meaning associated to their corresponding synsets, to the same degree, which is not generally true. In order to overcome this limitation, several fuzzy versions of synsets have been proposed. A common trait of these studies is that, to the best of our knowledge, they do not aim to produce fuzzified versions of the existing WLD's, but build new WLDs from scratch, which has limited the attention received from the Text-Mining community, many of whose resources and applications are based on the existing WLDs. In this study, we present an algorithm for constructing fuzzy versions of WLDs of any language, given a corpus of documents and a word-sense disambiguation (WSD) system for that language. Then, using the Open-American-National-Corpus and UKB WSD as algorithm inputs, we construct and publish online the fuzzified version of English WordNet (FWN). We also propose a theoretical (mathematical) proof of the validity of its results.

A Linear-complexity Multi-biometric Forensic Document Analysis System, by Fusing the Stylome and Signature Modalities

Jan 26, 2019

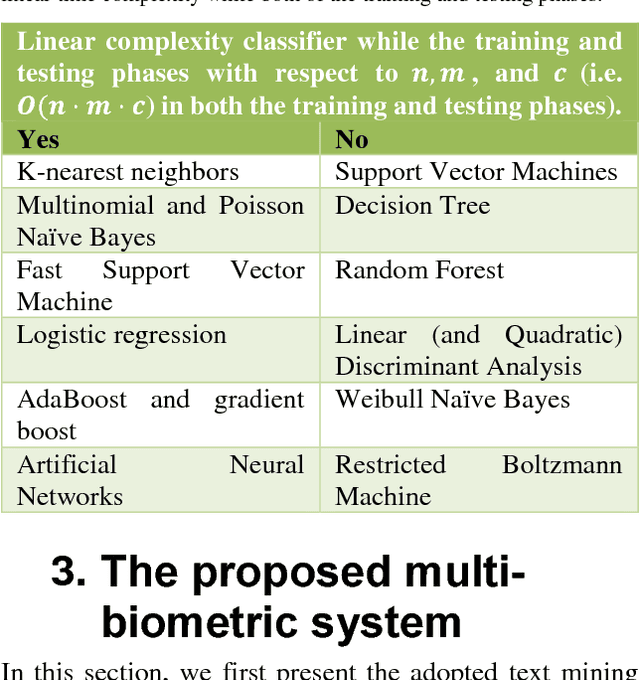

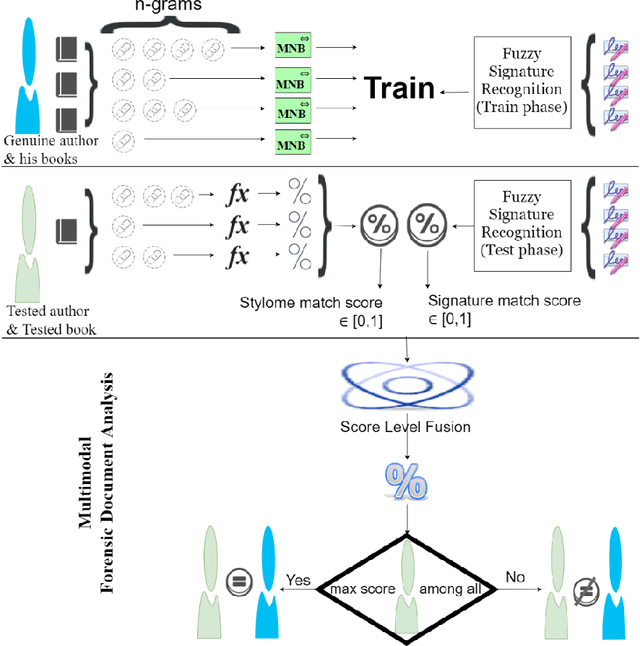

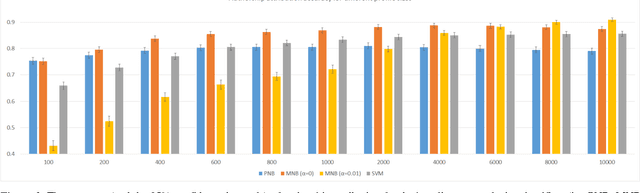

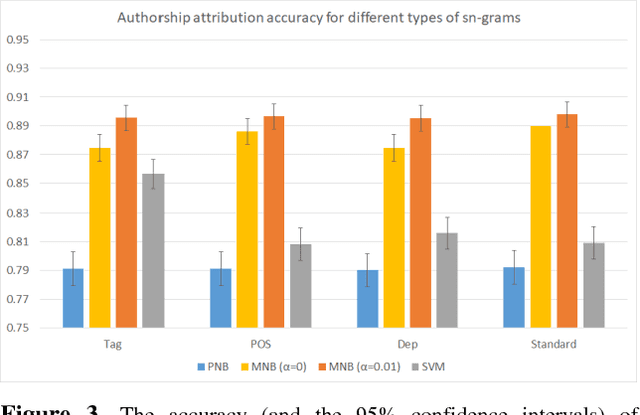

Abstract:Forensic Document Analysis (FDA) addresses the problem of finding the authorship of a given document. Identification of the document writer via a number of its modalities (e.g. handwriting, signature, linguistic writing style (i.e. stylome), etc.) has been studied in the FDA state-of-the-art. But, no research is conducted on the fusion of stylome and signature modalities. In this paper, we propose such a bimodal FDA system (which has vast applications in judicial, police-related, and historical documents analysis) with a focus on time-complexity. The proposed bimodal system can be trained and tested with linear time complexity. For this purpose, we first revisit Multinomial Na\"ive Bayes (MNB), as the best state-of-the-art linear-complexity authorship attribution system and, then, prove its superior accuracy to the well-known linear-complexity classifiers in the state-of-the-art. Then, we propose a fuzzy version of MNB for being fused with a state-of-the-art well-known linear-complexity fuzzy signature recognition system. For the evaluation purposes, we construct a chimeric dataset, composed of signatures and textual contents of different letters. Despite its linear-complexity, the proposed multi-biometric system is proven to meaningfully improve its state-of-the-art unimodal counterparts, regarding the accuracy, F-Score, Detection Error Trade-off (DET), Cumulative Match Characteristics (CMC), and Match Score Histograms (MSH) evaluation metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge