Mohammad Mahdi Behzadi

Weakly-Supervised Deep Learning Model for Prostate Cancer Diagnosis and Gleason Grading of Histopathology Images

Dec 25, 2022Abstract:Prostate cancer is the most common cancer in men worldwide and the second leading cause of cancer death in the United States. One of the prognostic features in prostate cancer is the Gleason grading of histopathology images. The Gleason grade is assigned based on tumor architecture on Hematoxylin and Eosin (H&E) stained whole slide images (WSI) by the pathologists. This process is time-consuming and has known interobserver variability. In the past few years, deep learning algorithms have been used to analyze histopathology images, delivering promising results for grading prostate cancer. However, most of the algorithms rely on the fully annotated datasets which are expensive to generate. In this work, we proposed a novel weakly-supervised algorithm to classify prostate cancer grades. The proposed algorithm consists of three steps: (1) extracting discriminative areas in a histopathology image by employing the Multiple Instance Learning (MIL) algorithm based on Transformers, (2) representing the image by constructing a graph using the discriminative patches, and (3) classifying the image into its Gleason grades by developing a Graph Convolutional Neural Network (GCN) based on the gated attention mechanism. We evaluated our algorithm using publicly available datasets, including TCGAPRAD, PANDA, and Gleason 2019 challenge datasets. We also cross validated the algorithm on an independent dataset. Results show that the proposed model achieved state-of-the-art performance in the Gleason grading task in terms of accuracy, F1 score, and cohen-kappa. The code is available at https://github.com/NabaviLab/Prostate-Cancer.

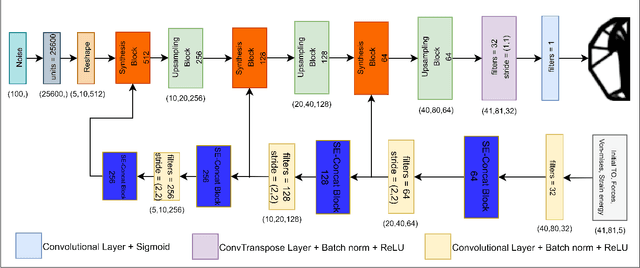

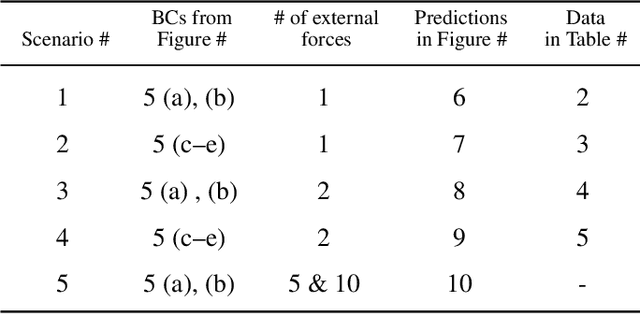

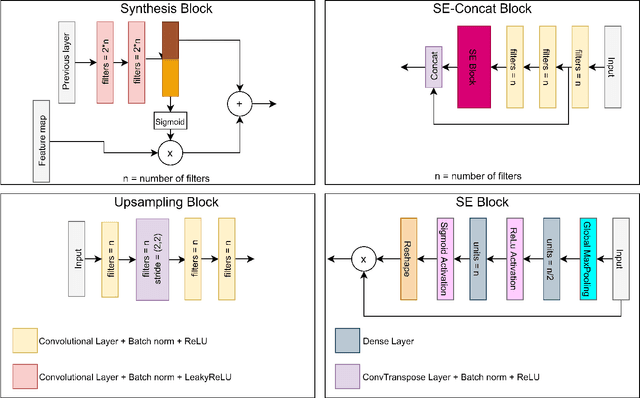

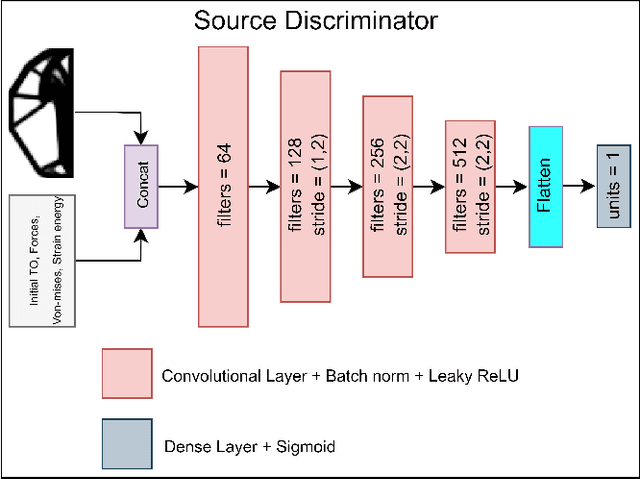

GANTL: Towards Practical and Real-Time Topology Optimization with Conditional GANs and Transfer Learning

May 07, 2021

Abstract:Many machine learning methods have been recently developed to circumvent the high computational cost of the gradient-based topology optimization. These methods typically require extensive and costly datasets for training, have a difficult time generalizing to unseen boundary and loading conditions and to new domains, and do not take into consideration topological constraints of the predictions, which produces predictions with inconsistent topologies. We present a deep learning method based on generative adversarial networks for generative design exploration. The proposed method combines the generative power of conditional GANs with the knowledge transfer capabilities of transfer learning methods to predict optimal topologies for unseen boundary conditions. We also show that the knowledge transfer capabilities embedded in the design of the proposed algorithm significantly reduces the size of the training dataset compared to the traditional deep learning neural or adversarial networks. Moreover, we formulate a topological loss function based on the bottleneck distance obtained from the persistent diagram of the structures and demonstrate a significant improvement in the topological connectivity of the predicted structures. We use numerous examples to explore the efficiency and accuracy of the proposed approach for both seen and unseen boundary conditions in 2D.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge