Mohamed ElHelw

Improved LiDAR Odometry and Mapping using Deep Semantic Segmentation and Novel Outliers Detection

Mar 05, 2024Abstract:Perception is a key element for enabling intelligent autonomous navigation. Understanding the semantics of the surrounding environment and accurate vehicle pose estimation are essential capabilities for autonomous vehicles, including self-driving cars and mobile robots that perform complex tasks. Fast moving platforms like self-driving cars impose a hard challenge for localization and mapping algorithms. In this work, we propose a novel framework for real-time LiDAR odometry and mapping based on LOAM architecture for fast moving platforms. Our framework utilizes semantic information produced by a deep learning model to improve point-to-line and point-to-plane matching between LiDAR scans and build a semantic map of the environment, leading to more accurate motion estimation using LiDAR data. We observe that including semantic information in the matching process introduces a new type of outlier matches to the process, where matching occur between different objects of the same semantic class. To this end, we propose a novel algorithm that explicitly identifies and discards potential outliers in the matching process. In our experiments, we study the effect of improving the matching process on the robustness of LiDAR odometry against high speed motion. Our experimental evaluations on KITTI dataset demonstrate that utilizing semantic information and rejecting outliers significantly enhance the robustness of LiDAR odometry and mapping when there are large gaps between scan acquisition poses, which is typical for fast moving platforms.

RSDiff: Remote Sensing Image Generation from Text Using Diffusion Model

Sep 03, 2023Abstract:Satellite imagery generation and super-resolution are pivotal tasks in remote sensing, demanding high-quality, detailed images for accurate analysis and decision-making. In this paper, we propose an innovative and lightweight approach that employs two-stage diffusion models to gradually generate high-resolution Satellite images purely based on text prompts. Our innovative pipeline comprises two interconnected diffusion models: a Low-Resolution Generation Diffusion Model (LR-GDM) that generates low-resolution images from text and a Super-Resolution Diffusion Model (SRDM) conditionally produced. The LR-GDM effectively synthesizes low-resolution by (computing the correlations of the text embedding and the image embedding in a shared latent space), capturing the essential content and layout of the desired scenes. Subsequently, the SRDM takes the generated low-resolution image and its corresponding text prompts and efficiently produces the high-resolution counterparts, infusing fine-grained spatial details and enhancing visual fidelity. Experiments are conducted on the commonly used dataset, Remote Sensing Image Captioning Dataset (RSICD). Our results demonstrate that our approach outperforms existing state-of-the-art (SoTA) models in generating satellite images with realistic geographical features, weather conditions, and land structures while achieving remarkable super-resolution results for increased spatial precision.

STG-MTL: Scalable Task Grouping for Multi-Task Learning Using Data Map

Jul 07, 2023Abstract:Multi-Task Learning (MTL) is a powerful technique that has gained popularity due to its performance improvement over traditional Single-Task Learning (STL). However, MTL is often challenging because there is an exponential number of possible task groupings, which can make it difficult to choose the best one, and some groupings might produce performance degradation due to negative interference between tasks. Furthermore, existing solutions are severely suffering from scalability issues, limiting any practical application. In our paper, we propose a new data-driven method that addresses these challenges and provides a scalable and modular solution for classification task grouping based on hand-crafted features, specifically Data Maps, which capture the training behavior for each classification task during the MTL training. We experiment with the method demonstrating its effectiveness, even on an unprecedented number of tasks (up to 100).

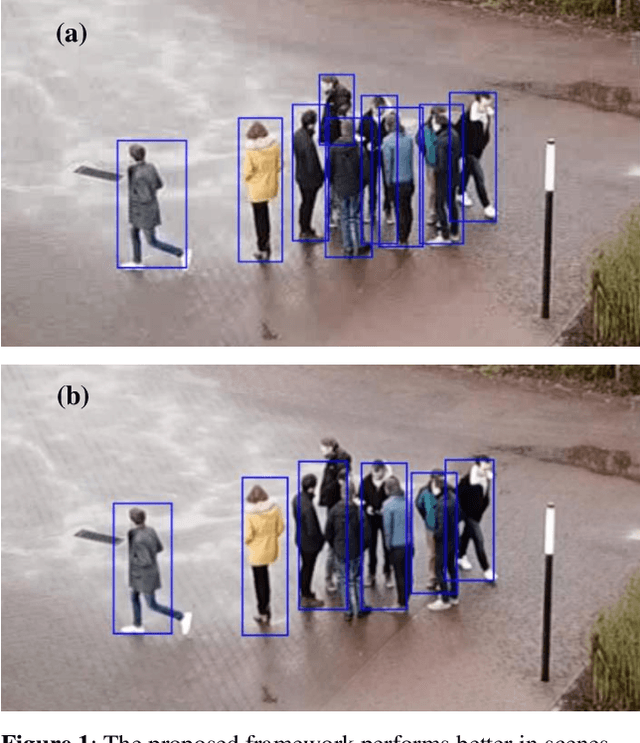

Robust Real-time Pedestrian Detection in Aerial Imagery on Jetson TX2

May 16, 2019

Abstract:Detection of pedestrians in aerial imagery captured by drones has many applications including intersection monitoring, patrolling, and surveillance, to name a few. However, the problem is involved due to continuouslychanging camera viewpoint and object appearance as well as the need for lightweight algorithms to run on on-board embedded systems. To address this issue, the paper proposes a framework for pedestrian detection in videos based on the YOLO object detection network [6] while having a high throughput of more than 5 FPS on the Jetson TX2 embedded board. The framework exploits deep learning for robust operation and uses a pre-trained model without the need for any additional training which makes it flexible to apply on different setups with minimum amount of tuning. The method achieves ~81 mAP when applied on a sample video from the Embedded Real-Time Inference (ERTI) Challenge where pedestrians are monitored by a UAV.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge