Mingfei Wang

Generating Adjacency Matrix for Video-Query based Video Moment Retrieval

Aug 19, 2020

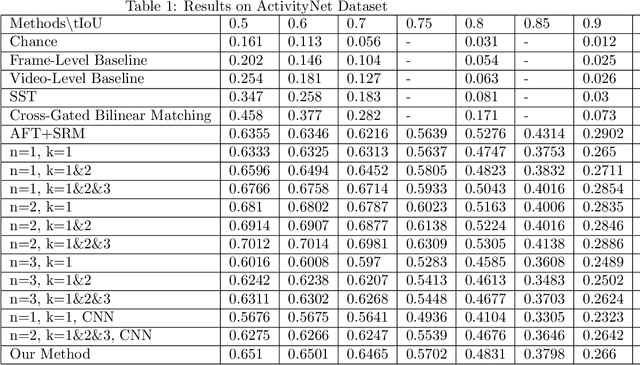

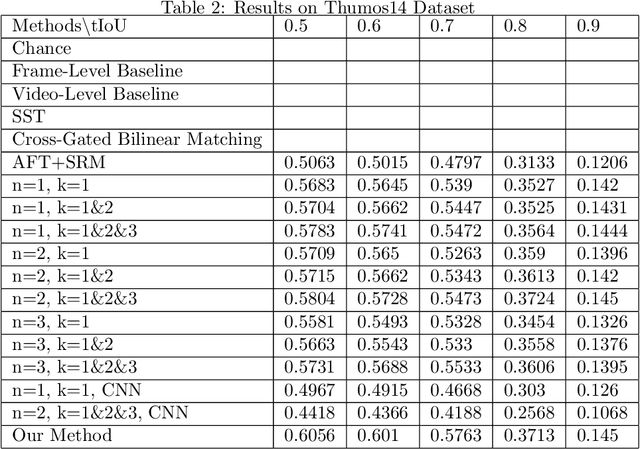

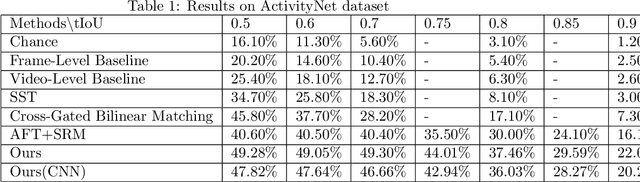

Abstract:In this paper, we continue our work on Video-Query based Video Moment retrieval task. Based on using graph convolution to extract intra-video and inter-video frame features, we improve the method by using similarity-metric based graph convolution, whose weighted adjacency matrix is achieved by calculating similarity metric between features of any two different timesteps in the graph. Experiments on ActivityNet v1.2 and Thumos14 dataset shows the effectiveness of this improvement, and it outperforms the state-of-the-art methods.

Graph Neural Network for Video-Query based Video Moment Retrieval

Jul 20, 2020

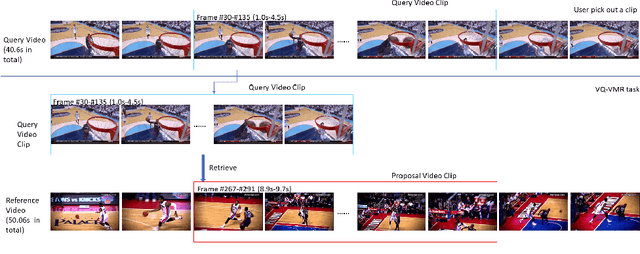

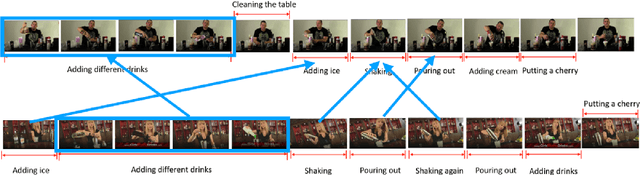

Abstract:In this paper, we focus on Video Query based Video Moment Retrieval (VQ-VMR) task, which uses a query video clip as input to retrieve a semantic relative video clip in another untrimmed long video. we find that in VQ-VMR datasets, there exists a phenomenon showing that there does not exist consistent relationship between feature similarity by frame and feature similarity by video, which affects the feature fusion among frames. However, existing VQ-VMR methods do not fully consider it. Taking this phenomenon into account, in this article, we treat video features as a graph by concatenating the query video feature and proposal video feature along time dimension, where each timestep is treated as a node, each row of the feature matrix is treated as feature of each node. Then, with the power of graph neural networks, we propose a Multi-Graph Feature Fusion Module to fuse the relation feature of this graph. After evaluating our method on ActivityNet v1.2 dataset and Thumos14 dataset, we find that our proposed method outperforms the state of art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge