Milad Taleby Ahvanooey

Classification of ADHD Patients Using Kernel Hierarchical Extreme Learning Machine

Jun 28, 2022

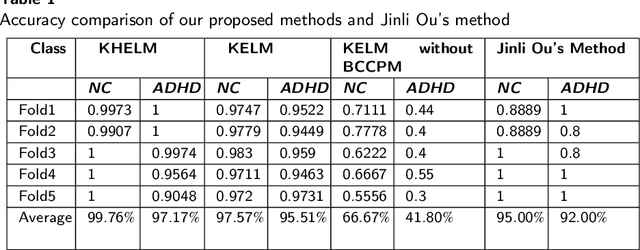

Abstract:Recently, the application of deep learning models to diagnose neuropsychiatric diseases from brain imaging data has received more and more attention. However, in practice, exploring interactions in brain functional connectivity based on operational magnetic resonance imaging data is critical for studying mental illness. Since Attention-Deficit and Hyperactivity Disorder (ADHD) is a type of chronic disease that is very difficult to diagnose in the early stages, it is necessary to improve the diagnosis accuracy of such illness using machine learning models treating patients before the critical condition. In this study, we utilize the dynamics of brain functional connectivity to model features from medical imaging data, which can extract the differences in brain function interactions between Normal Control (NC) and ADHD. To meet that requirement, we employ the Bayesian connectivity change-point model to detect brain dynamics using the local binary encoding approach and kernel hierarchical extreme learning machine for classifying features. To verify our model, we experimented with it on several real-world children's datasets, and our results achieved superior classification rates compared to the state-of-the-art models.

A New Gene Selection Algorithm using Fuzzy-Rough Set Theory for Tumor Classification

Mar 26, 2020

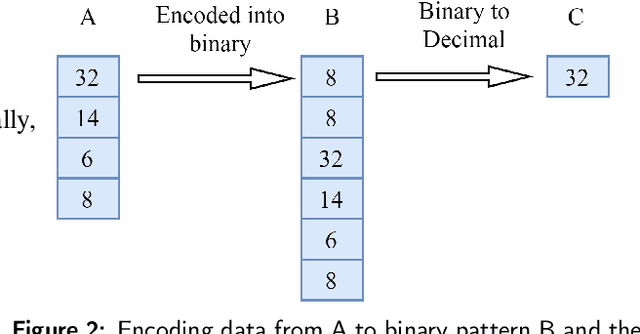

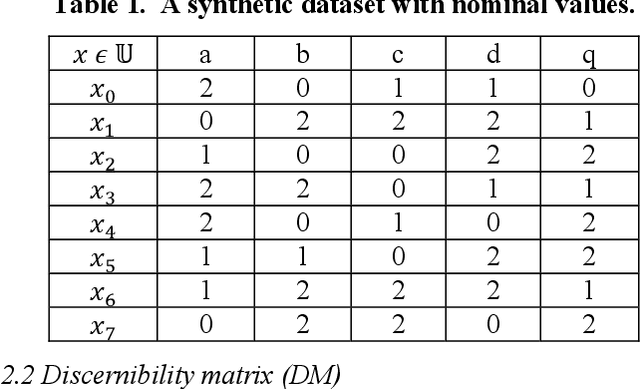

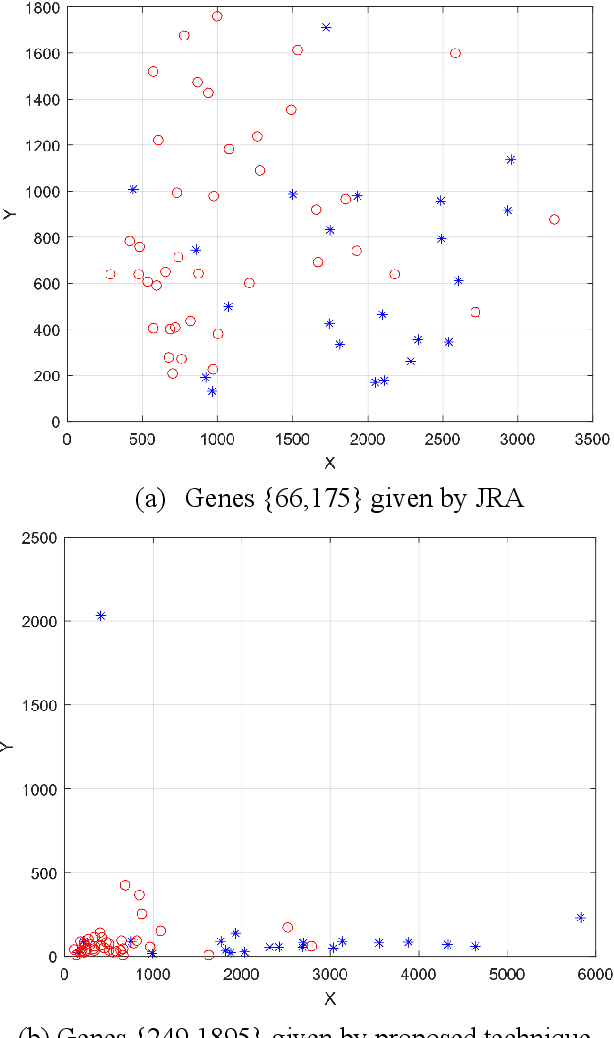

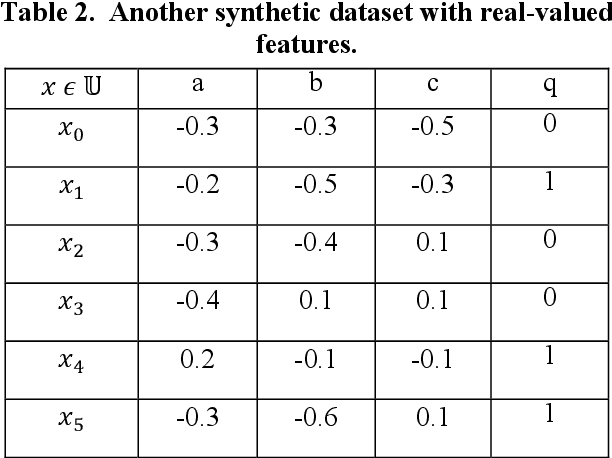

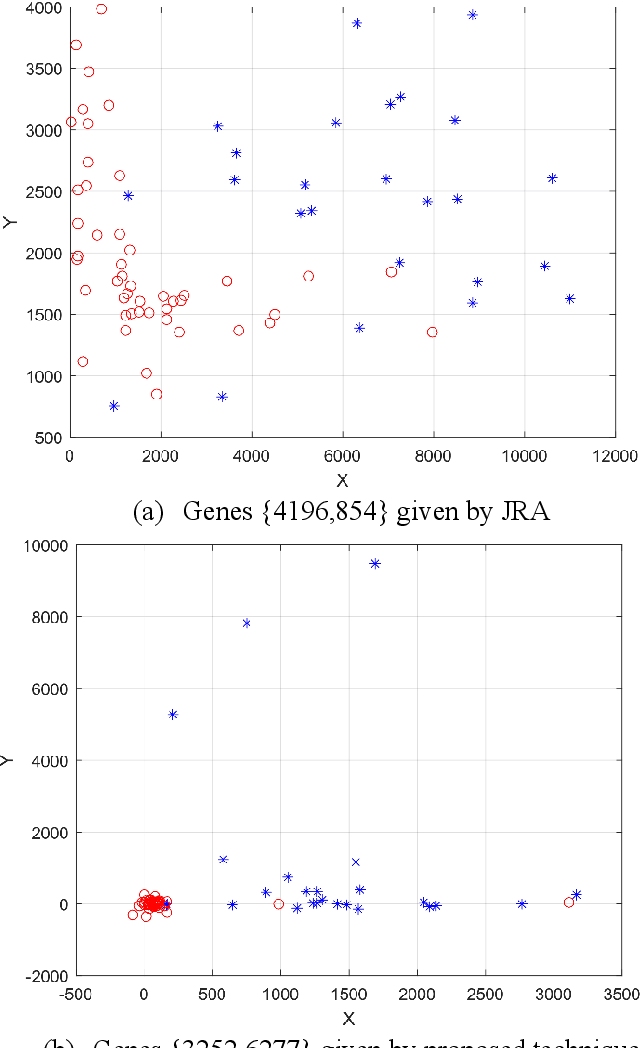

Abstract:In statistics and machine learning, feature selection is the process of picking a subset of relevant attributes for utilizing in a predictive model. Recently, rough set-based feature selection techniques, that employ feature dependency to perform selection process, have been drawn attention. Classification of tumors based on gene expression is utilized to diagnose proper treatment and prognosis of the disease in bioinformatics applications. Microarray gene expression data includes superfluous feature genes of high dimensionality and smaller training instances. Since exact supervised classification of gene expression instances in such high-dimensional problems is very complex, the selection of appropriate genes is a crucial task for tumor classification. In this study, we present a new technique for gene selection using a discernibility matrix of fuzzy-rough sets. The proposed technique takes into account the similarity of those instances that have the same and different class labels to improve the gene selection results, while the state-of-the art previous approaches only address the similarity of instances with different class labels. To meet that requirement, we extend the Johnson reducer technique into the fuzzy case. Experimental results demonstrate that this technique provides better efficiency compared to the state-of-the-art approaches.

* 10 pages, 3 figures, 6 Tables

An Overview of Two Age Synthesis and Estimation Techniques

Jan 26, 2020

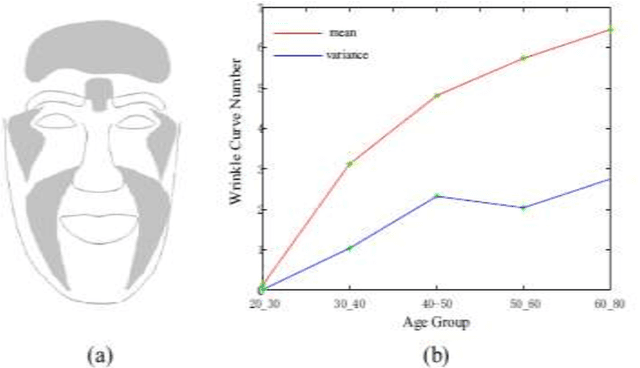

Abstract:Age estimation is a technique for predicting human ages from digital facial images, which analyzes a person's face image and estimates his/her age based on the year measure. Nowadays, intelligent age estimation and age synthesis have become particularly prevalent research topics in computer vision and face verification systems. Age synthesis is defined to render a facial image aesthetically with rejuvenating and natural aging effects on the person's face. Age estimation is defined to label a facial image automatically with the age group (year range) or the exact age (year) of the person's face. In this case study, we overview the existing models, popular techniques, system performances, and technical challenges related to the facial image-based age synthesis and estimation topics. The main goal of this review is to provide an easy understanding and promising future directions with systematic discussions.

An Effective Automatic Image Annotation Model Via Attention Model and Data Equilibrium

Jan 26, 2020

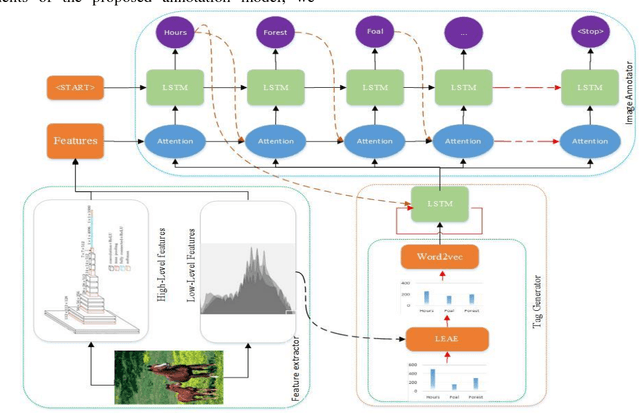

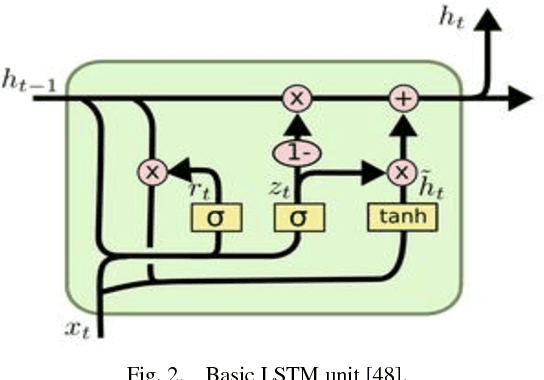

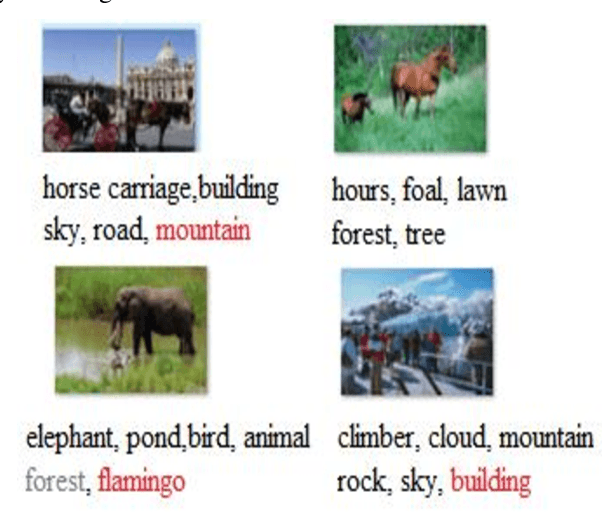

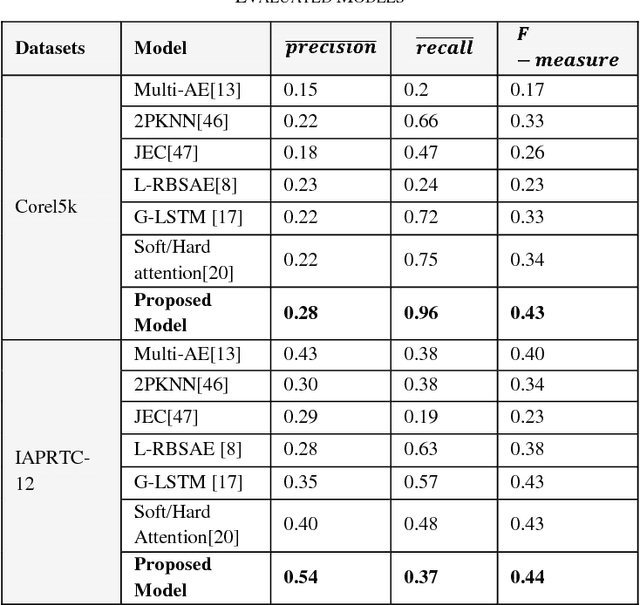

Abstract:Nowadays, a huge number of images are available. However, retrieving a required image for an ordinary user is a challenging task in computer vision systems. During the past two decades, many types of research have been introduced to improve the performance of the automatic annotation of images, which are traditionally focused on content-based image retrieval. Although, recent research demonstrates that there is a semantic gap between content-based image retrieval and image semantics understandable by humans. As a result, existing research in this area has caused to bridge the semantic gap between low-level image features and high-level semantics. The conventional method of bridging the semantic gap is through the automatic image annotation (AIA) that extracts semantic features using machine learning techniques. In this paper, we propose a novel AIA model based on the deep learning feature extraction method. The proposed model has three phases, including a feature extractor, a tag generator, and an image annotator. First, the proposed model extracts automatically the high and low-level features based on dual-tree continues wavelet transform (DT-CWT), singular value decomposition, distribution of color ton, and the deep neural network. Moreover, the tag generator balances the dictionary of the annotated keywords by a new log-entropy auto-encoder (LEAE) and then describes these keywords by word embedding. Finally, the annotator works based on the long-short-term memory (LSTM) network in order to obtain the importance degree of specific features of the image. The experiments conducted on two benchmark datasets confirm that the superiority of the proposed model compared to the previous models in terms of performance criteria.

* 9 pages, 3 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge