Mikhail Kiselev

Convolutional Spiking Neural Network for Image Classification

May 13, 2025Abstract:We consider an implementation of convolutional architecture in a spiking neural network (SNN) used to classify images. As in the traditional neural network, the convolutional layers form informational "features" used as predictors in the SNN-based classifier with CoLaNET architecture. Since weight sharing contradicts the synaptic plasticity locality principle, the convolutional weights are fixed in our approach. We describe a methodology for their determination from a representative set of images from the same domain as the classified ones. We illustrate and test our approach on a classification task from the NEOVISION2 benchmark.

A Digital Machine Learning Algorithm Simulating Spiking Neural Network CoLaNET

Mar 21, 2025Abstract:During last several years, our research team worked on development of a spiking neural network (SNN) architecture, which could be used in the wide range of supervised learning classification tasks. It should work under the condition, that all participating signals (the classified object description, correct class label and SNN decision) should have spiking nature. As a result, the CoLaNET (columnar layered network) SNN architecture was invented. The distinctive feature of this architecture is a combination of prototypical network structures corresponding to different classes and significantly distinctive instances of one class (=columns) and functionally differing populations of neurons inside columns (=layers). The other distinctive feature is a novel combination of anti-Hebbian and dopamine-modulated plasticity. While CoLaNET is relatively simple, it includes several hyperparameters. Their choice for particular classification tasks is not trivial. Besides that, specific features of the data classified (e.g. classification of separate pictures like in MNIST dataset vs. classifying objects in a continuous video stream) require certain modifications of CoLaNET structure. To solve these problems, the deep mathematical exploration of CoLaNET should be carried out. However, SNNs, being stochastic discrete systems, are usually very hard for exact mathematical analysis. To make it easier, I developed a continuous numeric (non-spiking) machine learning algorithm which approximates CoLaNET behavior with satisfactory accuracy. It is described in the paper. At present, it is being studied by exact analytic methods. We hope that the results of this study could be applied to direct calculation of CoLaNET hyperparameters and optimization of its structure.

Classifying Images with CoLaNET Spiking Neural Network -- the MNIST Example

Sep 12, 2024Abstract:In the present paper, it is shown how the columnar/layered CoLaNET spiking neural network (SNN) architecture can be used in supervised learning image classification tasks. Image pixel brightness is coded by the spike count during image presentation period. Image class label is indicated by activity of special SNN input nodes (one node per class). The CoLaNET classification accuracy is evaluated on the MNIST benchmark. It is demonstrated that CoLaNET is almost as accurate as the most advanced machine learning algorithms (not using convolutional approach).

CoLaNET -- A Spiking Neural Network with Columnar Layered Architecture for Classification

Sep 04, 2024Abstract:In the present paper, I describe a spiking neural network (SNN) architecture which, can be used in wide range of supervised learning classification tasks. It is assumed, that all participating signals (the classified object description, correct class label and SNN decision) have spiking nature. The distinctive feature of this architecture is a combination of prototypical network structures corresponding to different classes and significantly distinctive instances of one class (=columns) and functionally differing populations of neurons inside columns (=layers). The other distinctive feature is a novel combination of anti-Hebbian and dopamine-modulated plasticity. The plasticity rules are local and do not use the backpropagation principle. Besides that, as in my previous studies, I was guided by the requirement that the all neuron/plasticity models should be easily implemented on modern neurochips. I illustrate the high performance of my network on the MNIST benchmark.

From "What" to "When" -- a Spiking Neural Network Predicting Rare Events and Time to their Occurrence

Nov 09, 2023Abstract:In the reinforcement learning (RL) tasks, the ability to predict receiving reward in the near or more distant future means the ability to evaluate the current state as more or less close to the target state (labelled by the reward signal). In the present work, we utilize a spiking neural network (SNN) to predict time to the next target event (reward - in case of RL). In the context of SNNs, events are represented as spikes emitted by network neurons or input nodes. It is assumed that target events are indicated by spikes emitted by a special network input node. Using description of the current state encoded in the form of spikes from the other input nodes, the network should predict approximate time of the next target event. This research paper presents a novel approach to learning the corresponding predictive model by an SNN consisting of leaky integrate-and-fire (LIF) neurons. The proposed method leverages specially designed local synaptic plasticity rules and a novel columnar-layered SNN architecture. Similar to our previous works, this study places a strong emphasis on the hardware-friendliness of the proposed models, ensuring their efficient implementation on modern and future neuroprocessors. The approach proposed was tested on a simple reward prediction task in the context of one of the RL benchmark ATARI games, ping-pong. It was demonstrated that the SNN described in this paper gives superior prediction accuracy in comparison with precise machine learning techniques, such as decision tree algorithms and convolutional neural networks.

A Spiking Binary Neuron -- Detector of Causal Links

Sep 15, 2023Abstract:Causal relationship recognition is a fundamental operation in neural networks aimed at learning behavior, action planning, and inferring external world dynamics. This operation is particularly crucial for reinforcement learning (RL). In the context of spiking neural networks (SNNs), events are represented as spikes emitted by network neurons or input nodes. Detecting causal relationships within these events is essential for effective RL implementation. This research paper presents a novel approach to realize causal relationship recognition using a simple spiking binary neuron. The proposed method leverages specially designed synaptic plasticity rules, which are both straightforward and efficient. Notably, our approach accounts for the temporal aspects of detected causal links and accommodates the representation of spiking signals as single spikes or tight spike sequences (bursts), as observed in biological brains. Furthermore, this study places a strong emphasis on the hardware-friendliness of the proposed models, ensuring their efficient implementation on modern and future neuroprocessors. Being compared with precise machine learning techniques, such as decision tree algorithms and convolutional neural networks, our neuron demonstrates satisfactory accuracy despite its simplicity. In conclusion, we introduce a multi-neuron structure capable of operating in more complex environments with enhanced accuracy, making it a promising candidate for the advancement of RL applications in SNNs.

A Spiking Neural Network Learning Markov Chain

Sep 20, 2022

Abstract:In this paper, the question how spiking neural network (SNN) learns and fixes in its internal structures a model of external world dynamics is explored. This question is important for implementation of the model-based reinforcement learning (RL), the realistic RL regime where the decisions made by SNN and their evaluation in terms of reward/punishment signals may be separated by significant time interval and sequence of intermediate evaluation-neutral world states. In the present work, I formalize world dynamics as a Markov chain with unknown a priori state transition probabilities, which should be learnt by the network. To make this problem formulation more realistic, I solve it in continuous time, so that duration of every state in the Markov chain may be different and is unknown. It is demonstrated how this task can be accomplished by an SNN with specially designed structure and local synaptic plasticity rules. As an example, we show how this network motif works in the simple but non-trivial world where a ball moves inside a square box and bounces from its walls with a random new direction and velocity.

Neuromorphic Artificial Intelligence Systems

May 25, 2022

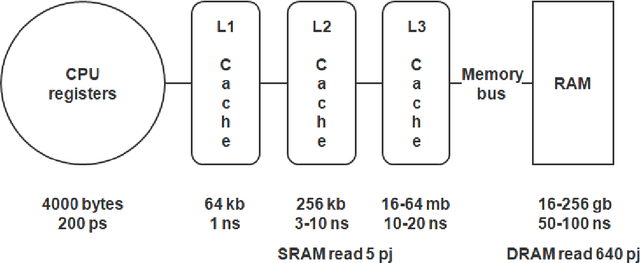

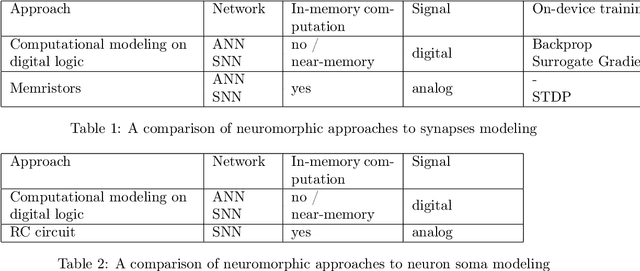

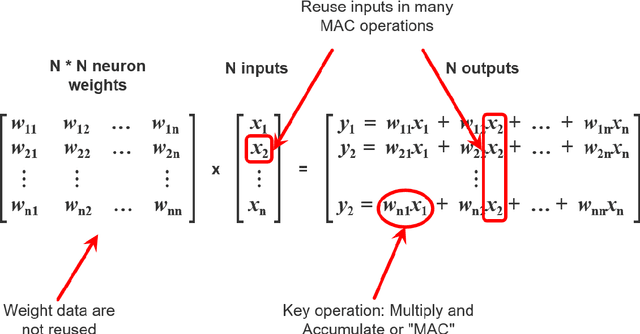

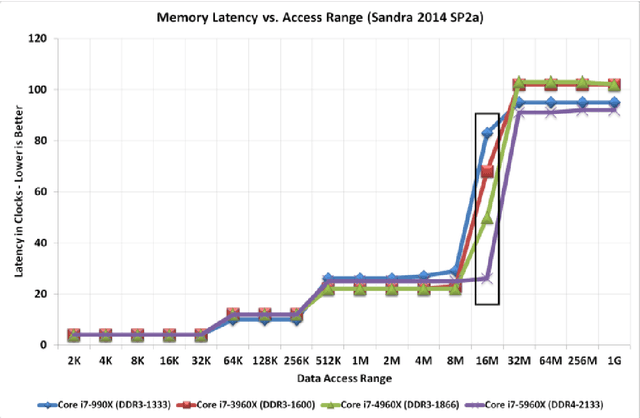

Abstract:Modern AI systems, based on von Neumann architecture and classical neural networks, have a number of fundamental limitations in comparison with the brain. This article discusses such limitations and the ways they can be mitigated. Next, it presents an overview of currently available neuromorphic AI projects in which these limitations are overcame by bringing some brain features into the functioning and organization of computing systems (TrueNorth, Loihi, Tianjic, SpiNNaker, BrainScaleS, NeuronFlow, DYNAP, Akida). Also, the article presents the principle of classifying neuromorphic AI systems by the brain features they use (neural networks, parallelism and asynchrony, impulse nature of information transfer, local learning, sparsity, analog and in-memory computing). In addition to new architectural approaches used in neuromorphic devices based on existing silicon microelectronics technologies, the article also discusses the prospects of using new memristor element base. Examples of recent advances in the use of memristors in euromorphic applications are also given.

A Spiking Neural Network Structure Implementing Reinforcement Learning

Apr 09, 2022

Abstract:At present, implementation of learning mechanisms in spiking neural networks (SNN) cannot be considered as a solved scientific problem despite plenty of SNN learning algorithms proposed. It is also true for SNN implementation of reinforcement learning (RL), while RL is especially important for SNNs because of its close relationship to the domains most promising from the viewpoint of SNN application such as robotics. In the present paper, I describe an SNN structure which, seemingly, can be used in wide range of RL tasks. The distinctive feature of my approach is usage of only the spike forms of all signals involved - sensory input streams, output signals sent to actuators and reward/punishment signals. Besides that, selecting the neuron/plasticity models, I was guided by the requirement that they should be easily implemented on modern neurochips. The SNN structure considered in the paper includes spiking neurons described by a generalization of the LIFAT (leaky integrate-and-fire neuron with adaptive threshold) model and a simple spike timing dependent synaptic plasticity model (a generalization of dopamine-modulated plasticity). My concept is based on very general assumptions about RL task characteristics and has no visible limitations on its applicability. To test it, I selected a simple but non-trivial task of training the network to keep a chaotically moving light spot in the view field of an emulated DVS camera. Successful solution of this RL problem by the SNN described can be considered as evidence in favor of efficiency of my approach.

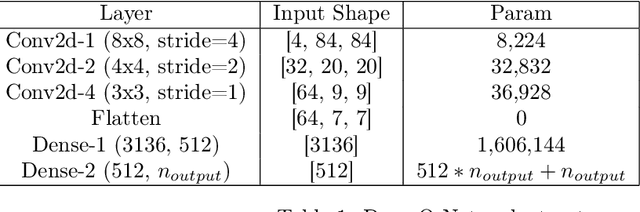

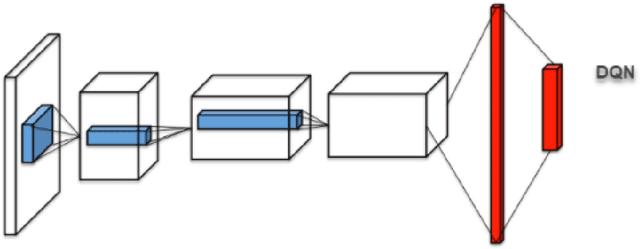

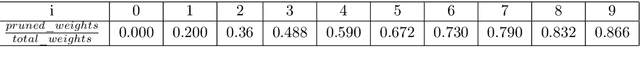

Neural Network Optimization for Reinforcement Learning Tasks Using Sparse Computations

Jan 07, 2022

Abstract:This article proposes a sparse computation-based method for optimizing neural networks for reinforcement learning (RL) tasks. This method combines two ideas: neural network pruning and taking into account input data correlations; it makes it possible to update neuron states only when changes in them exceed a certain threshold. It significantly reduces the number of multiplications when running neural networks. We tested different RL tasks and achieved 20-150x reduction in the number of multiplications. There were no substantial performance losses; sometimes the performance even improved.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge