Miguel Luengo-Oroz

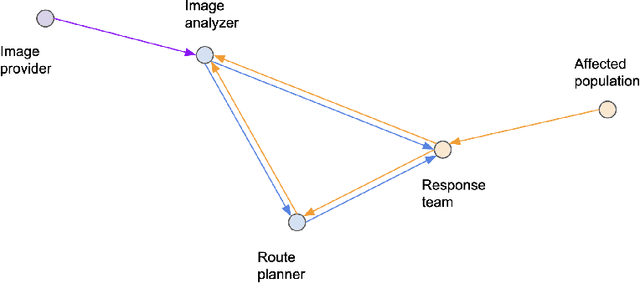

Multi-AI Complex Systems in Humanitarian Response

Aug 24, 2022

Abstract:AI is being increasingly used to aid response efforts to humanitarian emergencies at multiple levels of decision-making. Such AI systems are generally considered as stand-alone for decision support, with ethical assessments, guidelines and frameworks applied to them through this lens. However, as the prevalence of AI increases in this domain, such systems will interact through information flow networks created by interacting decision-making entities, leading to often ill-understood multi-AI complex systems. In this paper we describe how these multi-AI systems can arise, even in relatively simple real-world humanitarian response scenarios, and lead to potentially emergent and erratic erroneous behavior. We discuss how we can better work towards more trustworthy multi-AI systems by exploring some of their associated challenges and opportunities, and how we can design better mechanisms to understand and assess such systems. This paper is designed to be a first exposition on this topic in the field of humanitarian response, raising awareness, exploring the possible landscape of this domain, and providing a starting point for future work within the wider community.

* 7 pages, 1 figure

Ensuring the Inclusive Use of Natural Language Processing in the Global Response to COVID-19

Aug 11, 2021Abstract:Natural language processing (NLP) plays a significant role in tools for the COVID-19 pandemic response, from detecting misinformation on social media to helping to provide accurate clinical information or summarizing scientific research. However, the approaches developed thus far have not benefited all populations, regions or languages equally. We discuss ways in which current and future NLP approaches can be made more inclusive by covering low-resource languages, including alternative modalities, leveraging out-of-the-box tools and forming meaningful partnerships. We suggest several future directions for researchers interested in maximizing the positive societal impacts of NLP.

Considerations, Good Practices, Risks and Pitfalls in Developing AI Solutions Against COVID-19

Aug 13, 2020

Abstract:The COVID-19 pandemic has been a major challenge to humanity, with 12.7 million confirmed cases as of July 13th, 2020 [1]. In previous work, we described how Artificial Intelligence can be used to tackle the pandemic with applications at the molecular, clinical, and societal scales [2]. In the present follow-up article, we review these three research directions, and assess the level of maturity and feasibility of the approaches used, as well as their potential for operationalization. We also summarize some commonly encountered risks and practical pitfalls, as well as guidelines and best practices for formulating and deploying AI applications at different scales.

* 4 pages, 1 figure

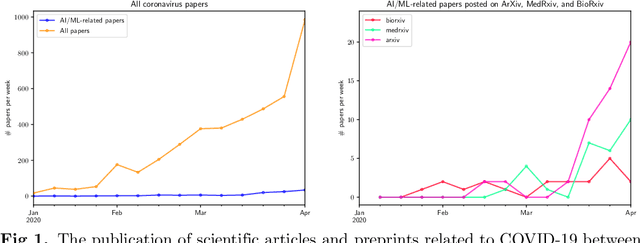

Mapping the Landscape of Artificial Intelligence Applications against COVID-19

Apr 23, 2020

Abstract:COVID-19, the disease caused by the SARS-CoV-2 virus, has been declared a pandemic by the World Health Organization, with over 2.5 million confirmed cases as of April 23, 2020. In this review, we present an overview of recent studies using Machine Learning and, more broadly, Artificial Intelligence, to tackle many aspects of the COVID-19 crisis at different scales including molecular, clinical, and societal applications. We also review datasets, tools, and resources needed to facilitate AI research. Finally, we discuss strategic considerations related to the operational implementation of projects, multidisciplinary partnerships, and open science. We highlight the need for international cooperation to maximize the potential of AI in this and future pandemics.

PulseSatellite: A tool using human-AI feedback loops for satellite image analysis in humanitarian contexts

Jan 29, 2020

Abstract:Humanitarian response to natural disasters and conflicts can be assisted by satellite image analysis. In a humanitarian context, very specific satellite image analysis tasks must be done accurately and in a timely manner to provide operational support. We present PulseSatellite, a collaborative satellite image analysis tool which leverages neural network models that can be retrained on-the fly and adapted to specific humanitarian contexts and geographies. We present two case studies, in mapping shelters and floods respectively, that illustrate the capabilities of PulseSatellite.

* 2 pages, 2 figures

Automated Speech Generation from UN General Assembly Statements: Mapping Risks in AI Generated Texts

Jun 05, 2019Abstract:Automated text generation has been applied broadly in many domains such as marketing and robotics, and used to create chatbots, product reviews and write poetry. The ability to synthesize text, however, presents many potential risks, while access to the technology required to build generative models is becoming increasingly easy. This work is aligned with the efforts of the United Nations and other civil society organisations to highlight potential political and societal risks arising through the malicious use of text generation software, and their potential impact on human rights. As a case study, we present the findings of an experiment to generate remarks in the style of political leaders by fine-tuning a pretrained AWD- LSTM model on a dataset of speeches made at the UN General Assembly. This work highlights the ease with which this can be accomplished, as well as the threats of combining these techniques with other technologies.

* 5 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge