Michel Daoud Yacoub

To Trust or Not to Trust: On Calibration in ML-based Resource Allocation for Wireless Networks

Jul 23, 2025

Abstract:In next-generation communications and networks, machine learning (ML) models are expected to deliver not only accurate predictions but also well-calibrated confidence scores that reflect the true likelihood of correct decisions. This paper studies the calibration performance of an ML-based outage predictor within a single-user, multi-resource allocation framework. We first establish key theoretical properties of this system's outage probability (OP) under perfect calibration. Importantly, we show that as the number of resources grows, the OP of a perfectly calibrated predictor approaches the expected output conditioned on it being below the classification threshold. In contrast, when only one resource is available, the system's OP equals the model's overall expected output. We then derive the OP conditions for a perfectly calibrated predictor. These findings guide the choice of the classification threshold to achieve a desired OP, helping system designers meet specific reliability requirements. We also demonstrate that post-processing calibration cannot improve the system's minimum achievable OP, as it does not introduce new information about future channel states. Additionally, we show that well-calibrated models are part of a broader class of predictors that necessarily improve OP. In particular, we establish a monotonicity condition that the accuracy-confidence function must satisfy for such improvement to occur. To demonstrate these theoretical properties, we conduct a rigorous simulation-based analysis using post-processing calibration techniques: Platt scaling and isotonic regression. As part of this framework, the predictor is trained using an outage loss function specifically designed for this system. Furthermore, this analysis is performed on Rayleigh fading channels with temporal correlation captured by Clarke's 2D model, which accounts for receiver mobility.

Outage Performance and Novel Loss Function for an ML-Assisted Resource Allocation: An Exact Analytical Framework

May 16, 2023Abstract:Machine Learning (ML) is a popular tool that will be pivotal in enabling 6G and beyond communications. This paper focuses on applying ML solutions to address outage probability issues commonly encountered in these systems. In particular, we consider a single-user multi-resource greedy allocation strategy, where an ML binary classification predictor assists in seizing an adequate resource. With no access to future channel state information, this predictor foresees each resource's likely future outage status. When the predictor encounters a resource it believes will be satisfactory, it allocates it to the user. Critically, the goal of the predictor is to ensure that a user avoids an unsatisfactory resource since this is likely to cause an outage. Our main result establishes exact and asymptotic expressions for this system's outage probability. With this, we formulate a theoretically optimal, differentiable loss function to train our predictor. We then compare predictors trained using this and traditional loss functions; namely, binary cross-entropy (BCE), mean squared error (MSE), and mean absolute error (MAE). Predictors trained using our novel loss function provide superior outage probability in all scenarios. Our loss function sometimes outperforms predictors trained with the BCE, MAE, and MSE loss functions by multiple orders of magnitude.

AI-Based Channel Prediction in D2D Links: An Empirical Validation

Jun 16, 2022

Abstract:Device-to-Device (D2D) communication propelled by artificial intelligence (AI) will be an allied technology that will improve system performance and support new services in advanced wireless networks (5G, 6G and beyond). In this paper, AI-based deep learning techniques are applied to D2D links operating at 5.8 GHz with the aim at providing potential answers to the following questions concerning the prediction of the received signal strength variations: i) how effective is the prediction as a function of the coherence time of the channel? and ii) what is the minimum number of input samples required for a target prediction performance? To this end, a variety of measurement environments and scenarios are considered, including an indoor open-office area, an outdoor open-space, line of sight (LOS), non-LOS (NLOS), and mobile scenarios. Four deep learning models are explored, namely long short-term memory networks (LSTMs), gated recurrent units (GRUs), convolutional neural networks (CNNs), and dense or feedforward networks (FFNs). Linear regression is used as a baseline model. It is observed that GRUs and LSTMs present equivalent performance, and both are superior when compared to CNNs, FFNs and linear regression. This indicates that GRUs and LSTMs are able to better account for temporal dependencies in the D2D data sets. We also provide recommendations on the minimum input lengths that yield the required performance given the channel coherence time. For instance, to predict 17 and 23 ms into the future, in indoor and outdoor LOS environments, respectively, an input length of 25 ms is recommended. This indicates that the bulk of the learning is done within the coherence time of the channel, and that large input lengths may not always be beneficial.

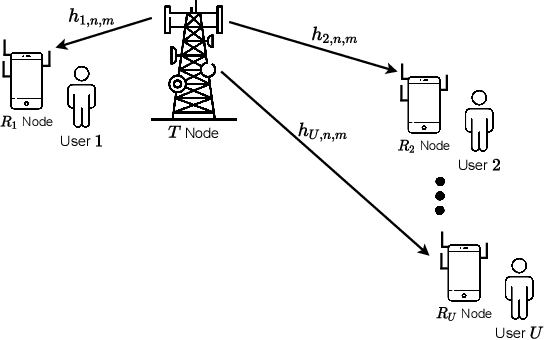

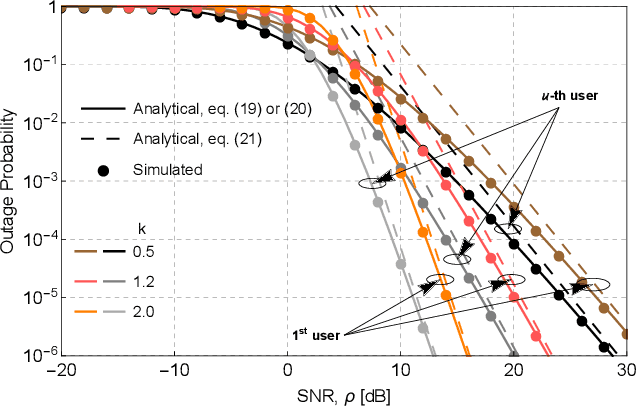

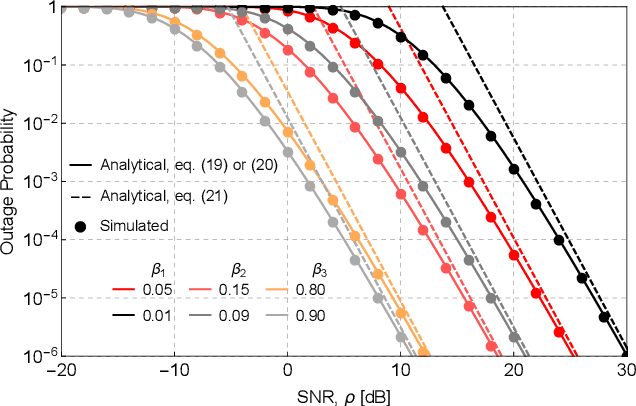

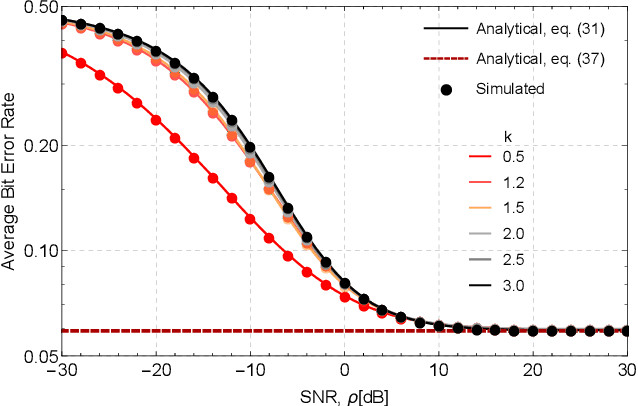

Performance analysis of downlink MIMO-NOMA systems over Weibull fading channels

May 24, 2022

Abstract:This work analyzes the performance of a downlink multiple-input multiple-output (MIMO) non-orthogonal multiple access (NOMA) multi-user communications system. To reduce hardware complexity and exploit antenna diversity, we consider a transmit antenna selection (TAS) scheme and equal-gain combining (EGC) receivers operating over independent and identically distributed (i.i.d.) Weibull fading channels. Performance metrics such as the outage probability (OP) and the average bit error rate (ABER) are derived in an exact manner. An asymptotic analysis for the OP and for the ABER is also carried out. Moreover, we obtain exact expressions for the probability density function (PDF) and the cumulative distribution function (CDF) of the end-to-end signal-to-noise ratio (SNR). Interestingly, our results indicate that, except for the first user (nearest user), in a high-SNR regime the ABER achieves a performance floor that depends solely on the user's power allocation coefficient and on the type of modulation, and not on the channel statistics or the amount of transmit and receive antennas. To the best of the authors' knowledge, no performance analyses have been reported in the literature for the considered scenario. The validity of all our expressions is confirmed via Monte-Carlo simulations.

Doppler Estimation for High-Velocity Targets Using Subpulse Processing and the Classic Chinese Remainder Theorem

Jan 27, 2021

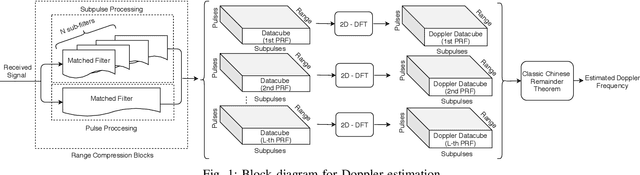

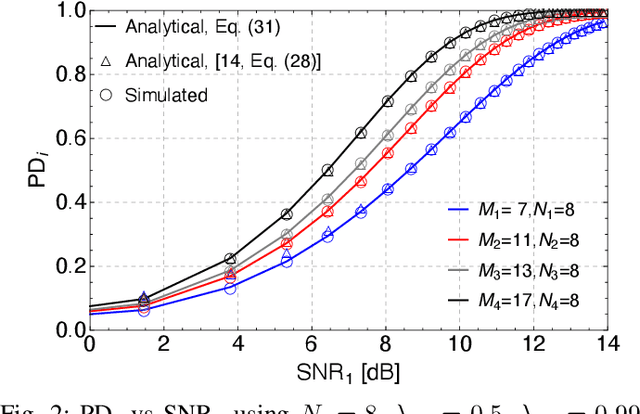

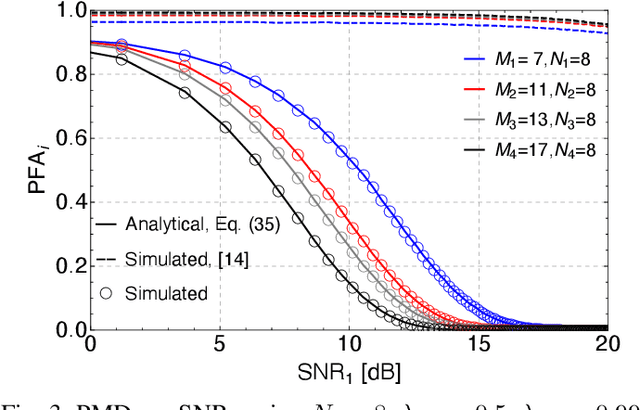

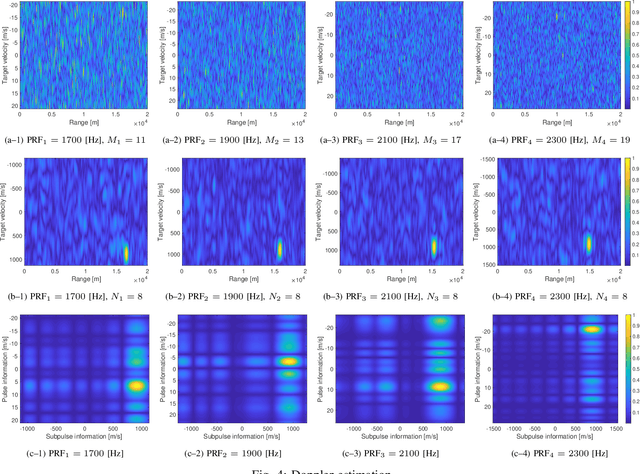

Abstract:In pulsed Doppler radars, the classic Chinese remainder theorem (CCRT) is a common method to resolve Doppler ambiguities caused by fast-moving targets. Another issue concerning high-velocity targets is related to the loss in the signal-to-noise ratio (SNR) after performing range compression. In particular, this loss can be partially mitigated by the use of subpulse processing (SP). Modern radars combine these techniques in order to reliably unfold the target velocity. However, the presence of background noise may compromise the Doppler estimates. Hence, a rigorous statistical analysis is imperative. In this work, we provide a comprehensive analysis on Doppler estimation. In particular, we derive novel closed-form expressions for the probability of detection (PD) and probability of false alarm (PFA). To this end, we consider the newly introduce SP along with the CCRT. A comparison analysis between SP and the classic pulse processing (PP) technique is also carried out. Numerical results and Monte-Carlo simulations corroborate the validity of our expressions and show that the SP-plus-CCRT technique helps to greatly reduce the PFA compared to previous studies, thereby improving radar detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge