Michalis Papakostas

MUSER: MUltimodal Stress Detection using Emotion Recognition as an Auxiliary Task

May 17, 2021

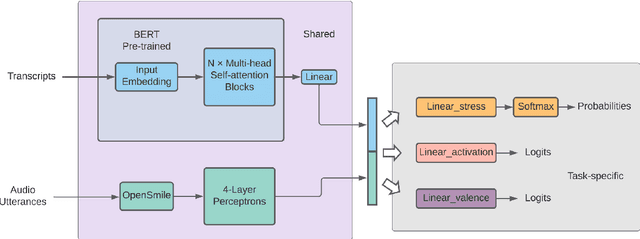

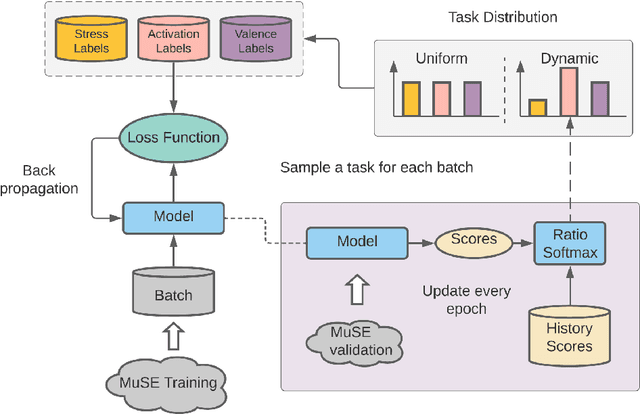

Abstract:The capability to automatically detect human stress can benefit artificial intelligent agents involved in affective computing and human-computer interaction. Stress and emotion are both human affective states, and stress has proven to have important implications on the regulation and expression of emotion. Although a series of methods have been established for multimodal stress detection, limited steps have been taken to explore the underlying inter-dependence between stress and emotion. In this work, we investigate the value of emotion recognition as an auxiliary task to improve stress detection. We propose MUSER -- a transformer-based model architecture and a novel multi-task learning algorithm with speed-based dynamic sampling strategy. Evaluations on the Multimodal Stressed Emotion (MuSE) dataset show that our model is effective for stress detection with both internal and external auxiliary tasks, and achieves state-of-the-art results.

Enhanced Facial Recognition Framework based on Skin Tone and False Alarm Rejection

Feb 14, 2017

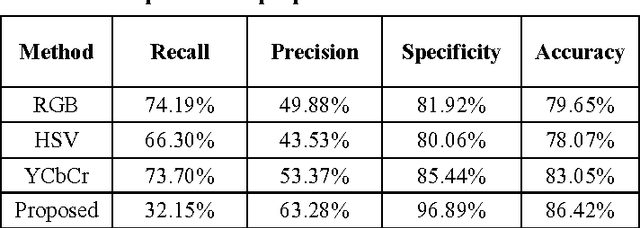

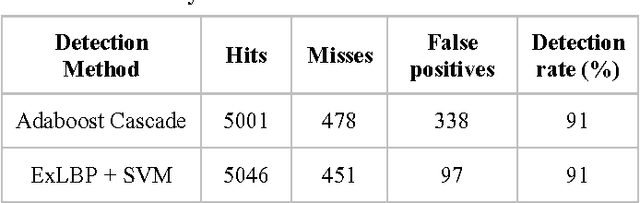

Abstract:Face detection is one of the challenging tasks in computer vision. Human face detection plays an essential role in the first stage of face processing applications such as face recognition, face tracking, image database management, etc. In these applications, face objects often come from an inconsequential part of images that contain variations, namely different illumination, poses, and occlusion. These variations can decrease face detection rate noticeably. Most existing face detection approaches are not accurate, as they have not been able to resolve unstructured images due to large appearance variations and can only detect human faces under one particular variation. Existing frameworks of face detection need enhancements to detect human faces under the stated variations to improve detection rate and reduce detection time. In this study, an enhanced face detection framework is proposed to improve detection rate based on skin color and provide a validation process. A preliminary segmentation of the input images based on skin color can significantly reduce search space and accelerate the process of human face detection. The primary detection is based on Haar-like features and the Adaboost algorithm. A validation process is introduced to reject non-face objects, which might occur during the face detection process. The validation process is based on two-stage Extended Local Binary Patterns. The experimental results on the CMU-MIT and Caltech 10000 datasets over a wide range of facial variations in different colors, positions, scales, and lighting conditions indicated a successful face detection rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge