Amir Ghaderi

Deep Forecast: Deep Learning-based Spatio-Temporal Forecasting

Jul 24, 2017

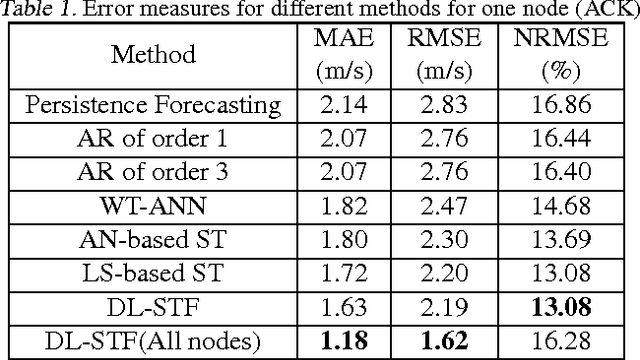

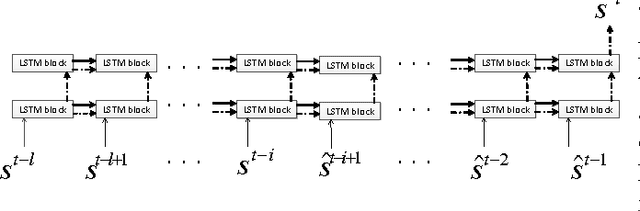

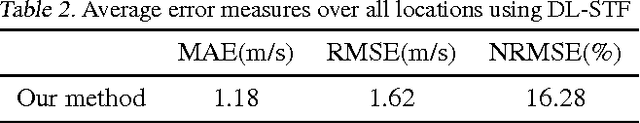

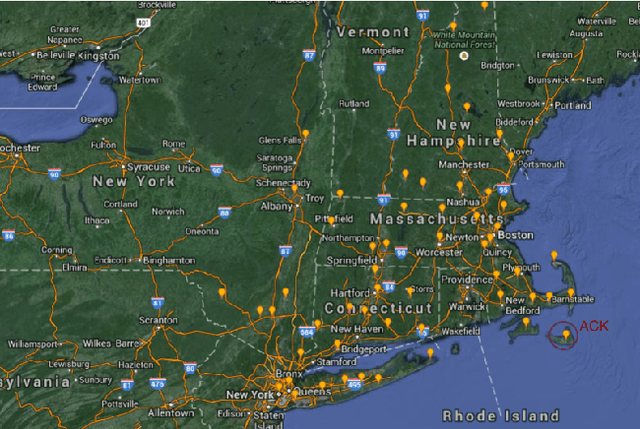

Abstract:The paper presents a spatio-temporal wind speed forecasting algorithm using Deep Learning (DL)and in particular, Recurrent Neural Networks(RNNs). Motivated by recent advances in renewable energy integration and smart grids, we apply our proposed algorithm for wind speed forecasting. Renewable energy resources (wind and solar)are random in nature and, thus, their integration is facilitated with accurate short-term forecasts. In our proposed framework, we model the spatiotemporal information by a graph whose nodes are data generating entities and its edges basically model how these nodes are interacting with each other. One of the main contributions of our work is the fact that we obtain forecasts of all nodes of the graph at the same time based on one framework. Results of a case study on recorded time series data from a collection of wind mills in the north-east of the U.S. show that the proposed DL-based forecasting algorithm significantly improves the short-term forecasts compared to a set of widely-used benchmarks models.

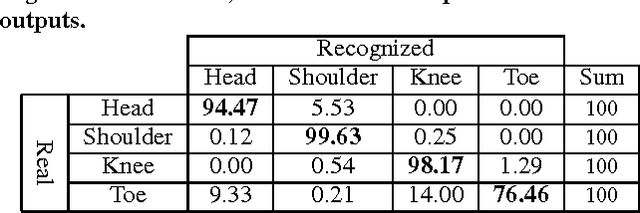

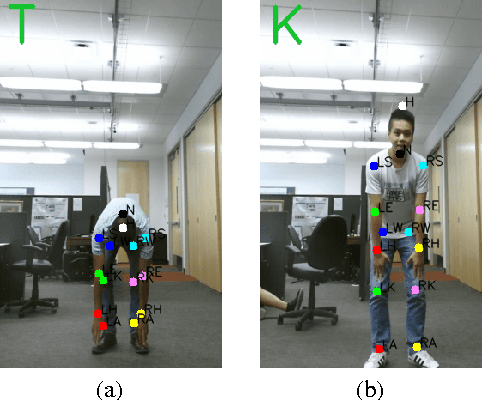

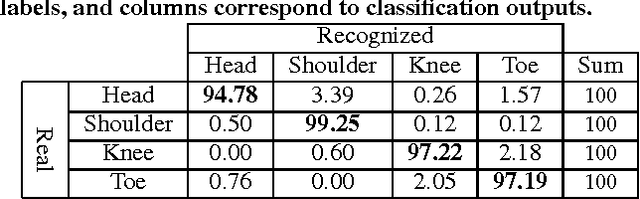

Improving the Accuracy of the CogniLearn System for Cognitive Behavior Assessment

Mar 25, 2017

Abstract:HTKS is a game-like cognitive assessment method, designed for children between four and eight years of age. During the HTKS assessment, a child responds to a sequence of requests, such as "touch your head" or "touch your toes". The cognitive challenge stems from the fact that the children are instructed to interpret these requests not literally, but by touching a different body part than the one stated. In prior work, we have developed the CogniLearn system, that captures data from subjects performing the HTKS game, and analyzes the motion of the subjects. In this paper we propose some specific improvements that make the motion analysis module more accurate. As a result of these improvements, the accuracy in recognizing cases where subjects touch their toes has gone from 76.46% in our previous work to 97.19% in this paper.

Scalable Deep Traffic Flow Neural Networks for Urban Traffic Congestion Prediction

Mar 03, 2017

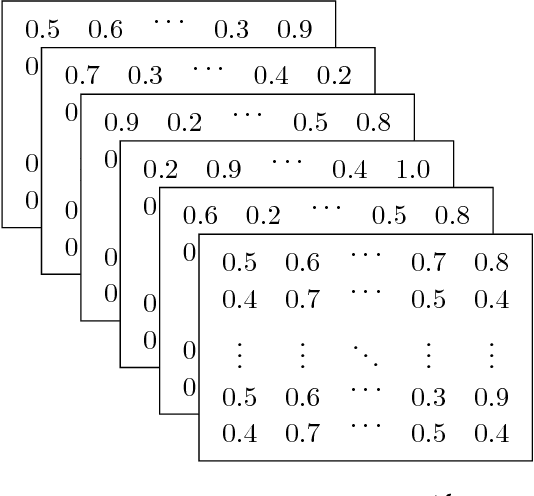

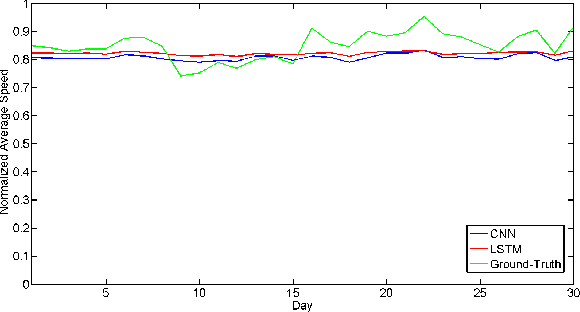

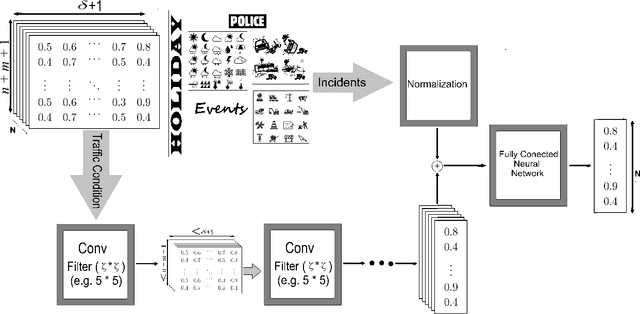

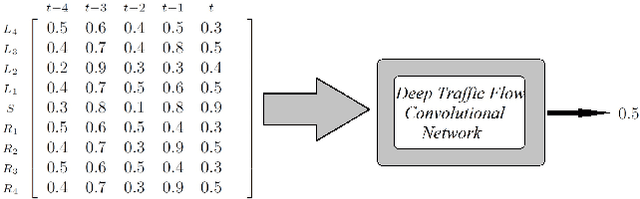

Abstract:Tracking congestion throughout the network road is a critical component of Intelligent transportation network management systems. Understanding how the traffic flows and short-term prediction of congestion occurrence due to rush-hour or incidents can be beneficial to such systems to effectively manage and direct the traffic to the most appropriate detours. Many of the current traffic flow prediction systems are designed by utilizing a central processing component where the prediction is carried out through aggregation of the information gathered from all measuring stations. However, centralized systems are not scalable and fail provide real-time feedback to the system whereas in a decentralized scheme, each node is responsible to predict its own short-term congestion based on the local current measurements in neighboring nodes. We propose a decentralized deep learning-based method where each node accurately predicts its own congestion state in real-time based on the congestion state of the neighboring stations. Moreover, historical data from the deployment site is not required, which makes the proposed method more suitable for newly installed stations. In order to achieve higher performance, we introduce a regularized Euclidean loss function that favors high congestion samples over low congestion samples to avoid the impact of the unbalanced training dataset. A novel dataset for this purpose is designed based on the traffic data obtained from traffic control stations in northern California. Extensive experiments conducted on the designed benchmark reflect a successful congestion prediction.

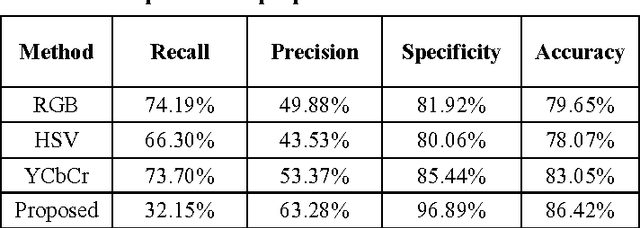

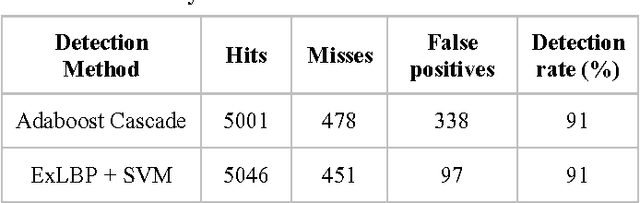

Enhanced Facial Recognition Framework based on Skin Tone and False Alarm Rejection

Feb 14, 2017

Abstract:Face detection is one of the challenging tasks in computer vision. Human face detection plays an essential role in the first stage of face processing applications such as face recognition, face tracking, image database management, etc. In these applications, face objects often come from an inconsequential part of images that contain variations, namely different illumination, poses, and occlusion. These variations can decrease face detection rate noticeably. Most existing face detection approaches are not accurate, as they have not been able to resolve unstructured images due to large appearance variations and can only detect human faces under one particular variation. Existing frameworks of face detection need enhancements to detect human faces under the stated variations to improve detection rate and reduce detection time. In this study, an enhanced face detection framework is proposed to improve detection rate based on skin color and provide a validation process. A preliminary segmentation of the input images based on skin color can significantly reduce search space and accelerate the process of human face detection. The primary detection is based on Haar-like features and the Adaboost algorithm. A validation process is introduced to reject non-face objects, which might occur during the face detection process. The validation process is based on two-stage Extended Local Binary Patterns. The experimental results on the CMU-MIT and Caltech 10000 datasets over a wide range of facial variations in different colors, positions, scales, and lighting conditions indicated a successful face detection rate.

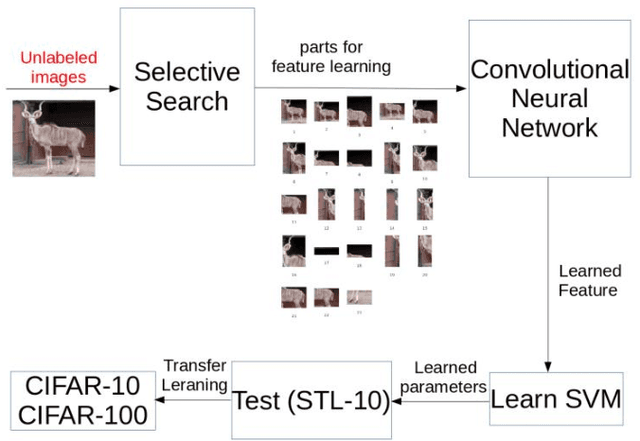

Selective Unsupervised Feature Learning with Convolutional Neural Network (S-CNN)

Jun 07, 2016

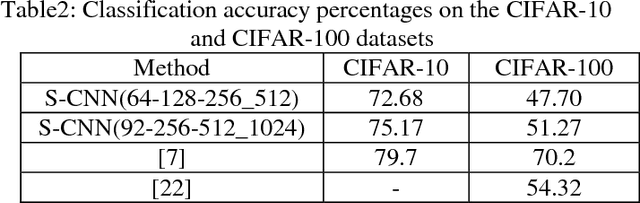

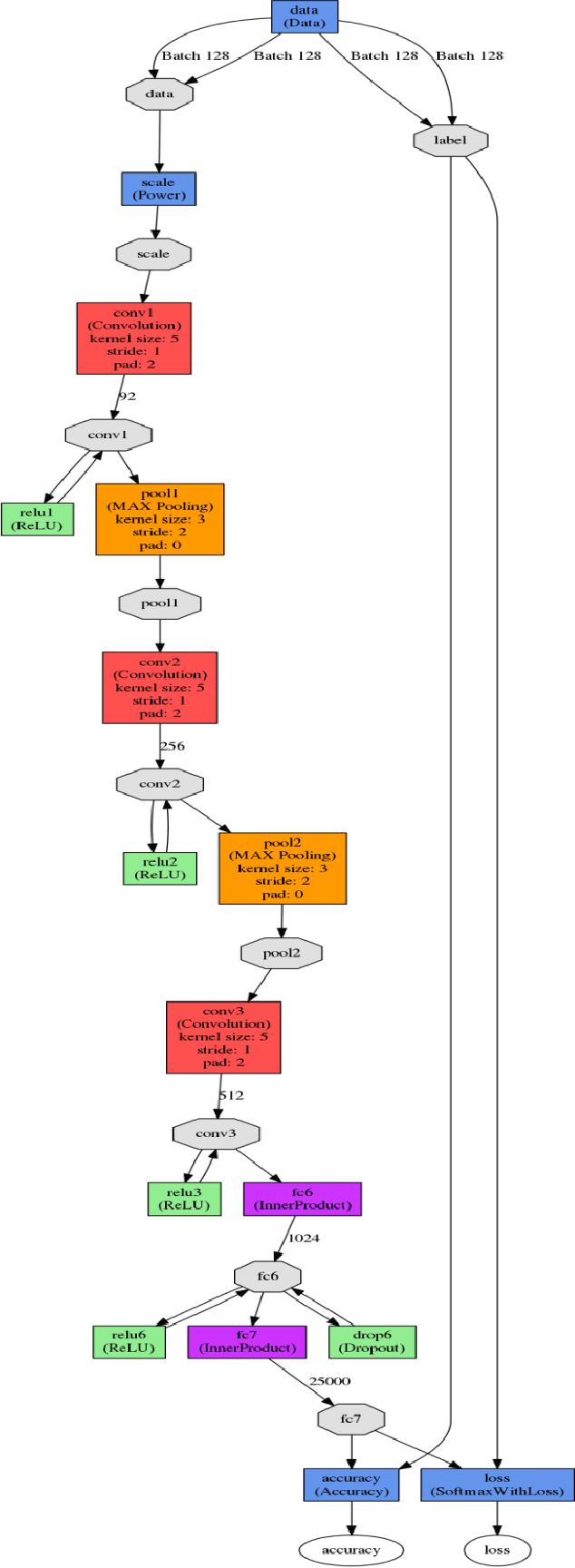

Abstract:Supervised learning of convolutional neural networks (CNNs) can require very large amounts of labeled data. Labeling thousands or millions of training examples can be extremely time consuming and costly. One direction towards addressing this problem is to create features from unlabeled data. In this paper we propose a new method for training a CNN, with no need for labeled instances. This method for unsupervised feature learning is then successfully applied to a challenging object recognition task. The proposed algorithm is relatively simple, but attains accuracy comparable to that of more sophisticated methods. The proposed method is significantly easier to train, compared to existing CNN methods, making fewer requirements on manually labeled training data. It is also shown to be resistant to overfitting. We provide results on some well-known datasets, namely STL-10, CIFAR-10, and CIFAR-100. The results show that our method provides competitive performance compared with existing alternative methods. Selective Convolutional Neural Network (S-CNN) is a simple and fast algorithm, it introduces a new way to do unsupervised feature learning, and it provides discriminative features which generalize well.

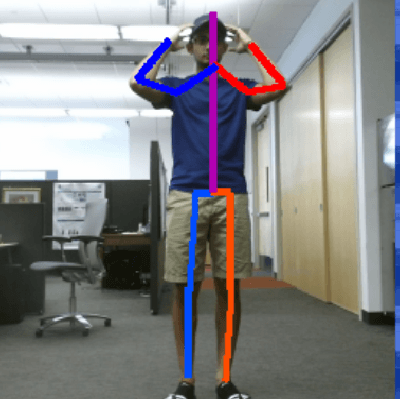

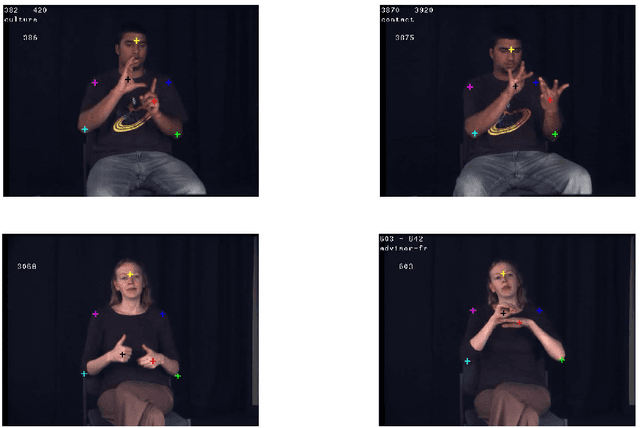

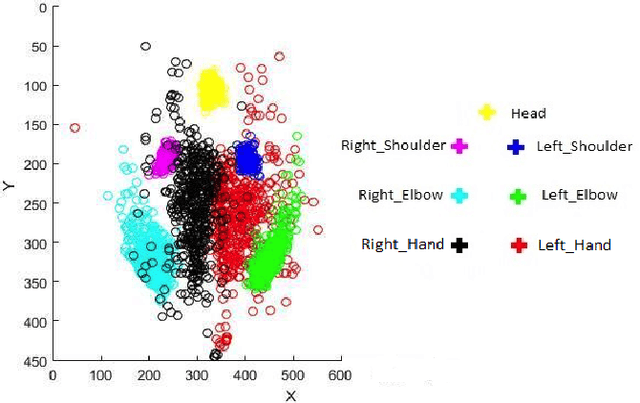

Evaluation of Deep Learning based Pose Estimation for Sign Language Recognition

Apr 19, 2016

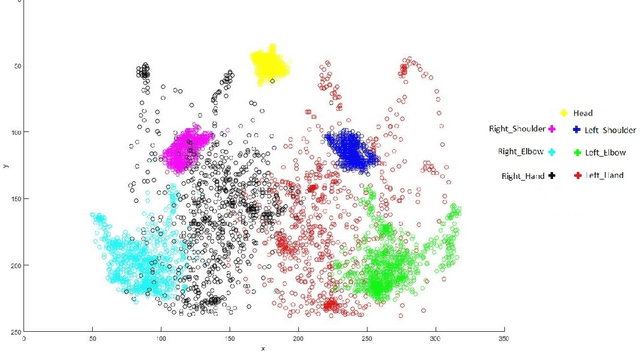

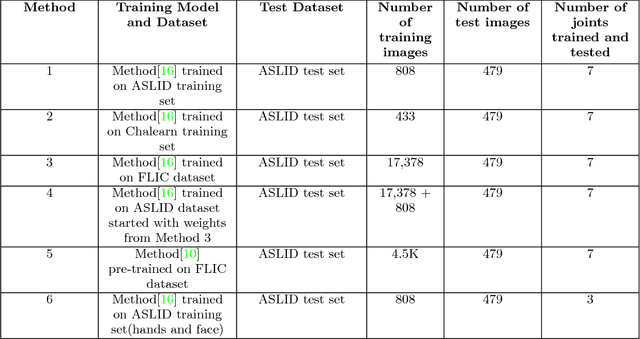

Abstract:Human body pose estimation and hand detection are two important tasks for systems that perform computer vision-based sign language recognition(SLR). However, both tasks are challenging, especially when the input is color videos, with no depth information. Many algorithms have been proposed in the literature for these tasks, and some of the most successful recent algorithms are based on deep learning. In this paper, we introduce a dataset for human pose estimation for SLR domain. We evaluate the performance of two deep learning based pose estimation methods, by performing user-independent experiments on our dataset. We also perform transfer learning, and we obtain results that demonstrate that transfer learning can improve pose estimation accuracy. The dataset and results from these methods can create a useful baseline for future works.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge