Michael Soloveitchik

Fully Convolutional Fractional Scaling

Mar 20, 2022

Abstract:We introduce a fully convolutional fractional scaling component, FCFS. Fully convolutional networks can be applied to any size input and previously did not support non-integer scaling. Our architecture is simple with an efficient single layer implementation. Examples and code implementations of three common scaling methods are published.

Conditional Frechet Inception Distance

Mar 21, 2021

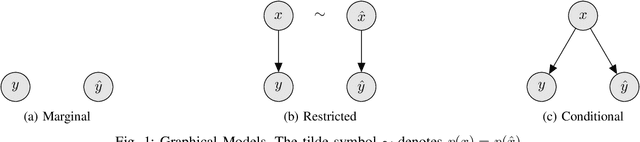

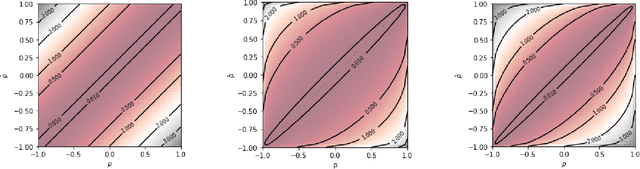

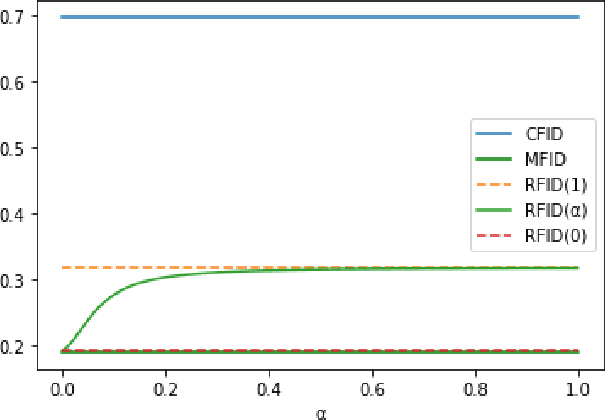

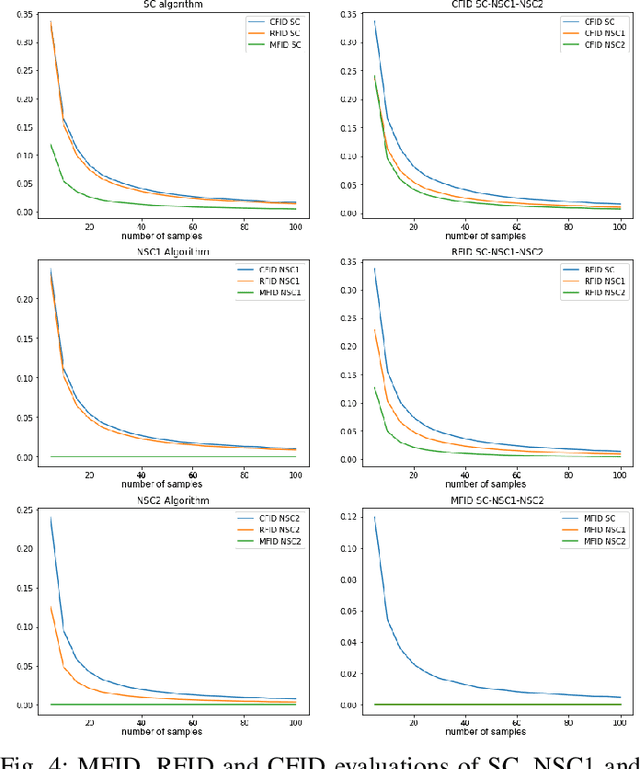

Abstract:We consider distance functions between conditional distributions functions. We focus on the Wasserstein metric and its Gaussian case known as the Frechet Inception Distance (FID).We develop conditional versions of these metrics, and analyze their relations. Then, we numerically compare the metrics inthe context of performance evaluation of conditional generative models. Our results show that the metrics are similar in classical models which are less susceptible to conditional collapse. But the conditional distances are more informative in modern unsuper-vised, semisupervised and unpaired models where learning the relations between the inputs and outputs is the main challenge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge