Michael Ridenhour

Flurry: a Fast Framework for Reproducible Multi-layered Provenance Graph Representation Learning

Mar 05, 2022

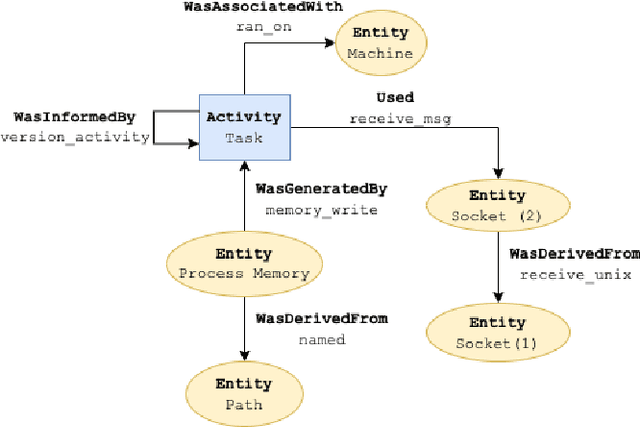

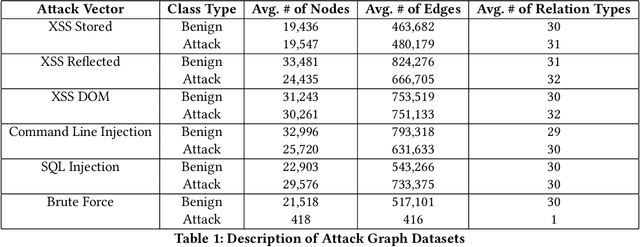

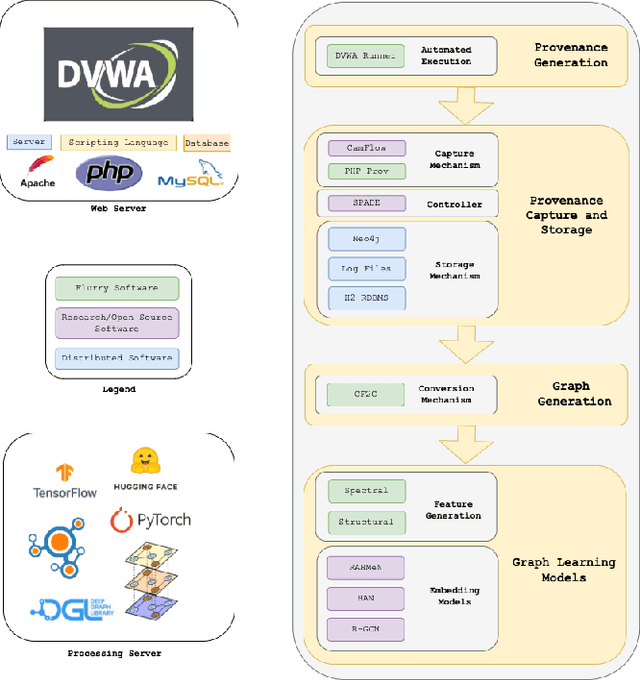

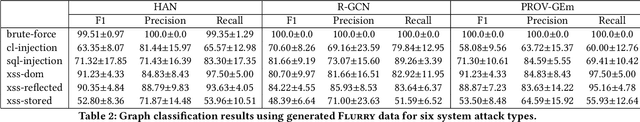

Abstract:Complex heterogeneous dynamic networks like knowledge graphs are powerful constructs that can be used in modeling data provenance from computer systems. From a security perspective, these attributed graphs enable causality analysis and tracing for analyzing a myriad of cyberattacks. However, there is a paucity in systematic development of pipelines that transform system executions and provenance into usable graph representations for machine learning tasks. This lack of instrumentation severely inhibits scientific advancement in provenance graph machine learning by hindering reproducibility and limiting the availability of data that are critical for techniques like graph neural networks. To fulfill this need, we present Flurry, an end-to-end data pipeline which simulates cyberattacks, captures provenance data from these attacks at multiple system and application layers, converts audit logs from these attacks into data provenance graphs, and incorporates this data with a framework for training deep neural models that supports preconfigured or custom-designed models for analysis in real-world resilient systems. We showcase this pipeline by processing data from multiple system attacks and performing anomaly detection via graph classification using current benchmark graph representational learning frameworks. Flurry provides a fast, customizable, extensible, and transparent solution for providing this much needed data to cybersecurity professionals.

Pay Attention to Relations: Multi-embeddings for Attributed Multiplex Networks

Mar 03, 2022

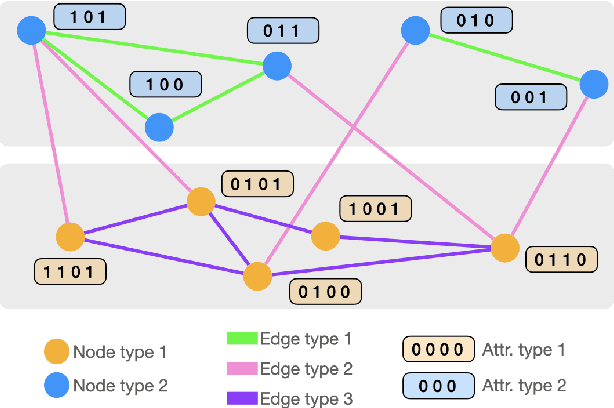

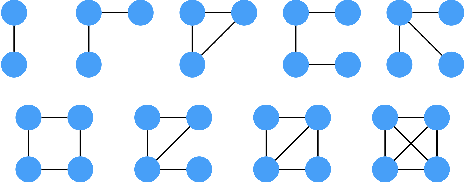

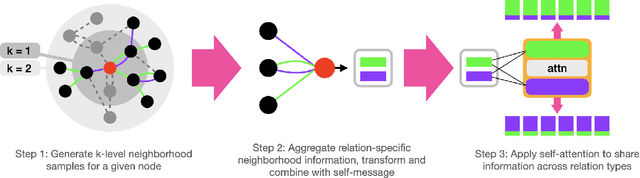

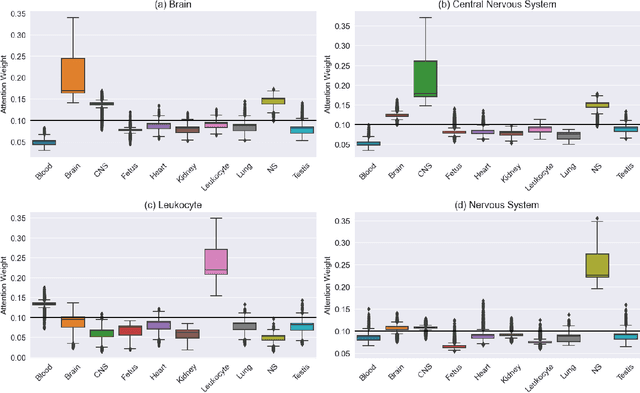

Abstract:Graph Convolutional Neural Networks (GCNs) have become effective machine learning algorithms for many downstream network mining tasks such as node classification, link prediction, and community detection. However, most GCN methods have been developed for homogenous networks and are limited to a single embedding for each node. Complex systems, often represented by heterogeneous, multiplex networks present a more difficult challenge for GCN models and require that such techniques capture the diverse contexts and assorted interactions that occur between nodes. In this work, we propose RAHMeN, a novel unified relation-aware embedding framework for attributed heterogeneous multiplex networks. Our model incorporates node attributes, motif-based features, relation-based GCN approaches, and relational self-attention to learn embeddings of nodes with respect to the various relations in a heterogeneous, multiplex network. In contrast to prior work, RAHMeN is a more expressive embedding framework that embraces the multi-faceted nature of nodes in such networks, producing a set of multi-embeddings that capture the varied and diverse contexts of nodes. We evaluate our model on four real-world datasets from Amazon, Twitter, YouTube, and Tissue PPIs in both transductive and inductive settings. Our results show that RAHMeN consistently outperforms comparable state-of-the-art network embedding models, and an analysis of RAHMeN's relational self-attention demonstrates that our model discovers interpretable connections between relations present in heterogeneous, multiplex networks.

Detecting Online Hate Speech: Approaches Using Weak Supervision and Network Embedding Models

Jul 24, 2020

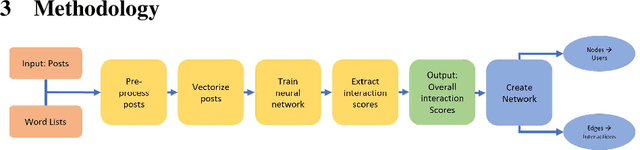

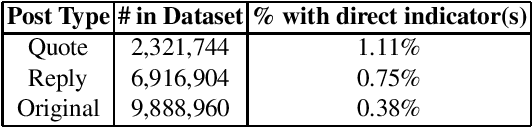

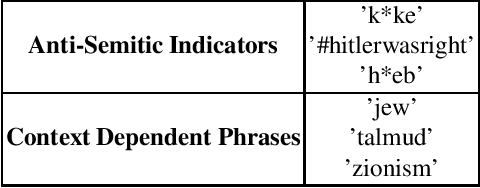

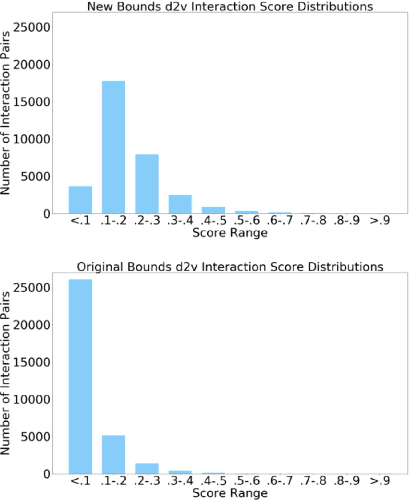

Abstract:The ubiquity of social media has transformed online interactions among individuals. Despite positive effects, it has also allowed anti-social elements to unite in alternative social media environments (eg. Gab.com) like never before. Detecting such hateful speech using automated techniques can allow social media platforms to moderate their content and prevent nefarious activities like hate speech propagation. In this work, we propose a weak supervision deep learning model that - (i) quantitatively uncover hateful users and (ii) present a novel qualitative analysis to uncover indirect hateful conversations. This model scores content on the interaction level, rather than the post or user level, and allows for characterization of users who most frequently participate in hateful conversations. We evaluate our model on 19.2M posts and show that our weak supervision model outperforms the baseline models in identifying indirect hateful interactions. We also analyze a multilayer network, constructed from two types of user interactions in Gab(quote and reply) and interaction scores from the weak supervision model as edge weights, to predict hateful users. We utilize the multilayer network embedding methods to generate features for the prediction task and we show that considering user context from multiple networks help achieving better predictions of hateful users in Gab. We receive up to 7% performance gain compared to single layer or homogeneous network embedding models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge