Michael Langberg

From Federated Learning to $\mathbb{X}$-Learning: Breaking the Barriers of Decentrality Through Random Walks

Sep 03, 2025Abstract:We provide our perspective on $\mathbb{X}$-Learning ($\mathbb{X}$L), a novel distributed learning architecture that generalizes and extends the concept of decentralization. Our goal is to present a vision for $\mathbb{X}$L, introducing its unexplored design considerations and degrees of freedom. To this end, we shed light on the intuitive yet non-trivial connections between $\mathbb{X}$L, graph theory, and Markov chains. We also present a series of open research directions to stimulate further research.

Hierarchical Federated Foundation Models over Wireless Networks for Multi-Modal Multi-Task Intelligence: Integration of Edge Learning with D2D/P2P-Enabled Fog Learning Architectures

Sep 03, 2025

Abstract:The rise of foundation models (FMs) has reshaped the landscape of machine learning. As these models continued to grow, leveraging geo-distributed data from wireless devices has become increasingly critical, giving rise to federated foundation models (FFMs). More recently, FMs have evolved into multi-modal multi-task (M3T) FMs (e.g., GPT-4) capable of processing diverse modalities across multiple tasks, which motivates a new underexplored paradigm: M3T FFMs. In this paper, we unveil an unexplored variation of M3T FFMs by proposing hierarchical federated foundation models (HF-FMs), which in turn expose two overlooked heterogeneity dimensions to fog/edge networks that have a direct impact on these emerging models: (i) heterogeneity in collected modalities and (ii) heterogeneity in executed tasks across fog/edge nodes. HF-FMs strategically align the modular structure of M3T FMs, comprising modality encoders, prompts, mixture-of-experts (MoEs), adapters, and task heads, with the hierarchical nature of fog/edge infrastructures. Moreover, HF-FMs enable the optional usage of device-to-device (D2D) communications, enabling horizontal module relaying and localized cooperative training among nodes when feasible. Through delving into the architectural design of HF-FMs, we highlight their unique capabilities along with a series of tailored future research directions. Finally, to demonstrate their potential, we prototype HF-FMs in a wireless network setting and release the open-source code for the development of HF-FMs with the goal of fostering exploration in this untapped field (GitHub: https://github.com/payamsiabd/M3T-FFM).

Dynamic D2D-Assisted Federated Learning over O-RAN: Performance Analysis, MAC Scheduler, and Asymmetric User Selection

Apr 09, 2024

Abstract:Existing studies on federated learning (FL) are mostly focused on system orchestration for static snapshots of the network and making static control decisions (e.g., spectrum allocation). However, real-world wireless networks are susceptible to temporal variations of wireless channel capacity and users' datasets. In this paper, we incorporate multi-granular system dynamics (MSDs) into FL, including (M1) dynamic wireless channel capacity, captured by a set of discrete-time events, called $\mathscr{D}$-Events, and (M2) dynamic datasets of users. The latter is characterized by (M2-a) modeling the dynamics of user's dataset size via an ordinary differential equation and (M2-b) introducing dynamic model drift}, formulated via a partial differential inequality} drawing concrete analytical connections between the dynamics of users' datasets and FL accuracy. We then conduct FL orchestration under MSDs by introducing dynamic cooperative FL with dedicated MAC schedulers (DCLM), exploiting the unique features of open radio access network (O-RAN). DCLM proposes (i) a hierarchical device-to-device (D2D)-assisted model training, (ii) dynamic control decisions through dedicated O-RAN MAC schedulers, and (iii) asymmetric user selection. We provide extensive theoretical analysis to study the convergence of DCLM. We then optimize the degrees of freedom (e.g., user selection and spectrum allocation) in DCLM through a highly non-convex optimization problem. We develop a systematic approach to obtain the solution for this problem, opening the door to solving a broad variety of network-aware FL optimization problems. We show the efficiency of DCLM via numerical simulations and provide a series of future directions.

A Unified Framework for Approximating and Clustering Data

May 28, 2016

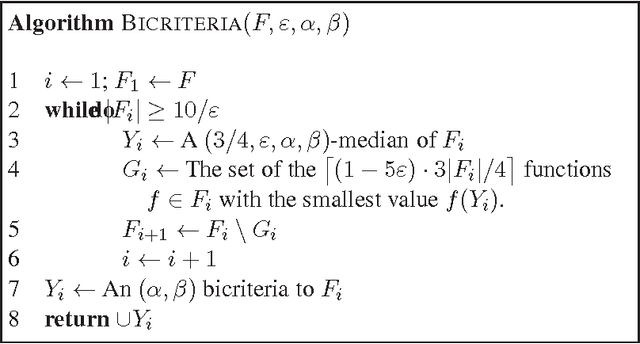

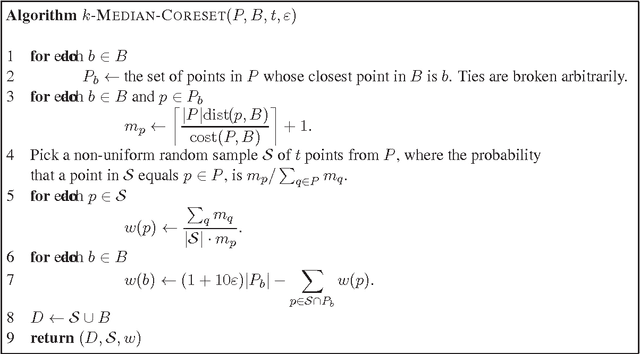

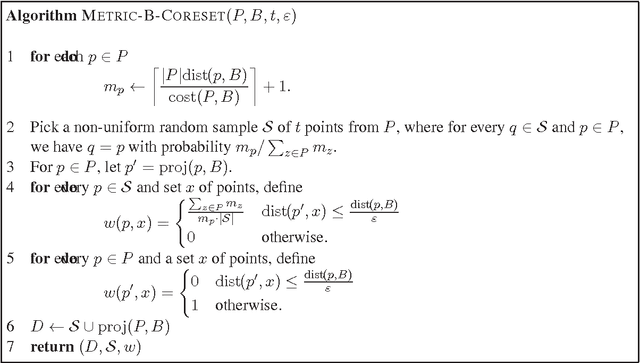

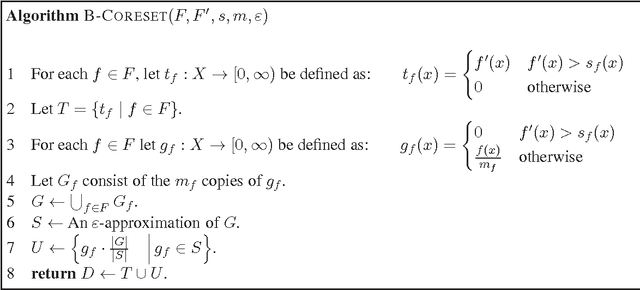

Abstract:Given a set $F$ of $n$ positive functions over a ground set $X$, we consider the problem of computing $x^*$ that minimizes the expression $\sum_{f\in F}f(x)$, over $x\in X$. A typical application is \emph{shape fitting}, where we wish to approximate a set $P$ of $n$ elements (say, points) by a shape $x$ from a (possibly infinite) family $X$ of shapes. Here, each point $p\in P$ corresponds to a function $f$ such that $f(x)$ is the distance from $p$ to $x$, and we seek a shape $x$ that minimizes the sum of distances from each point in $P$. In the $k$-clustering variant, each $x\in X$ is a tuple of $k$ shapes, and $f(x)$ is the distance from $p$ to its closest shape in $x$. Our main result is a unified framework for constructing {\em coresets} and {\em approximate clustering} for such general sets of functions. To achieve our results, we forge a link between the classic and well defined notion of $\varepsilon$-approximations from the theory of PAC Learning and VC dimension, to the relatively new (and not so consistent) paradigm of coresets, which are some kind of "compressed representation" of the input set $F$. Using traditional techniques, a coreset usually implies an LTAS (linear time approximation scheme) for the corresponding optimization problem, which can be computed in parallel, via one pass over the data, and using only polylogarithmic space (i.e, in the streaming model). We show how to generalize the results of our framework for squared distances (as in $k$-mean), distances to the $q$th power, and deterministic constructions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge