Michael Kaliske

Finite Operator Learning: Bridging Neural Operators and Numerical Methods for Efficient Parametric Solution and Optimization of PDEs

Jul 04, 2024

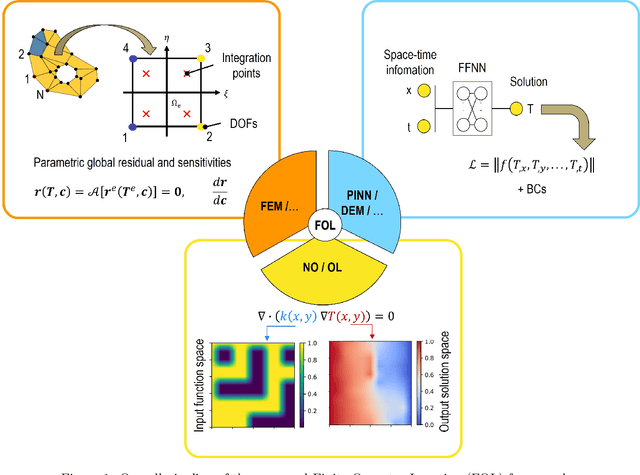

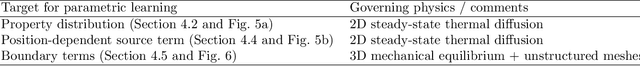

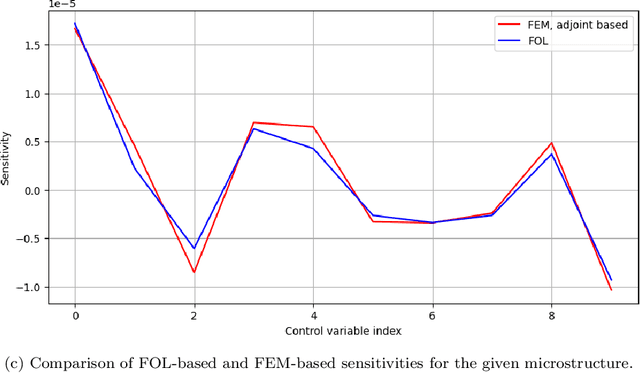

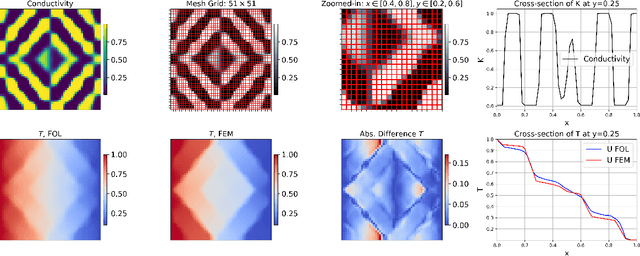

Abstract:We introduce a method that combines neural operators, physics-informed machine learning, and standard numerical methods for solving PDEs. The proposed approach extends each of the aforementioned methods and unifies them within a single framework. We can parametrically solve partial differential equations in a data-free manner and provide accurate sensitivities, meaning the derivatives of the solution space with respect to the design space. These capabilities enable gradient-based optimization without the typical sensitivity analysis costs, unlike adjoint methods that scale directly with the number of response functions. Our Finite Operator Learning (FOL) approach uses an uncomplicated feed-forward neural network model to directly map the discrete design space (i.e. parametric input space) to the discrete solution space (i.e. finite number of sensor points in the arbitrary shape domain) ensuring compliance with physical laws by designing them into loss functions. The discretized governing equations, as well as the design and solution spaces, can be derived from any well-established numerical techniques. In this work, we employ the Finite Element Method (FEM) to approximate fields and their spatial derivatives. Subsequently, we conduct Sobolev training to minimize a multi-objective loss function, which includes the discretized weak form of the energy functional, boundary conditions violations, and the stationarity of the residuals with respect to the design variables. Our study focuses on the steady-state heat equation within heterogeneous materials that exhibits significant phase contrast and possibly temperature-dependent conductivity. The network's tangent matrix is directly used for gradient-based optimization to improve the microstructure's heat transfer characteristics. ...

Integration of physics-informed operator learning and finite element method for parametric learning of partial differential equations

Jan 04, 2024Abstract:We present a method that employs physics-informed deep learning techniques for parametrically solving partial differential equations. The focus is on the steady-state heat equations within heterogeneous solids exhibiting significant phase contrast. Similar equations manifest in diverse applications like chemical diffusion, electrostatics, and Darcy flow. The neural network aims to establish the link between the complex thermal conductivity profiles and temperature distributions, as well as heat flux components within the microstructure, under fixed boundary conditions. A distinctive aspect is our independence from classical solvers like finite element methods for data. A noteworthy contribution lies in our novel approach to defining the loss function, based on the discretized weak form of the governing equation. This not only reduces the required order of derivatives but also eliminates the need for automatic differentiation in the construction of loss terms, accepting potential numerical errors from the chosen discretization method. As a result, the loss function in this work is an algebraic equation that significantly enhances training efficiency. We benchmark our methodology against the standard finite element method, demonstrating accurate yet faster predictions using the trained neural network for temperature and flux profiles. We also show higher accuracy by using the proposed method compared to purely data-driven approaches for unforeseen scenarios.

Mixed formulation of physics-informed neural networks for thermo-mechanically coupled systems and heterogeneous domains

Feb 09, 2023Abstract:Deep learning methods find a solution to a boundary value problem by defining loss functions of neural networks based on governing equations, boundary conditions, and initial conditions. Furthermore, the authors show that when it comes to many engineering problems, designing the loss functions based on first-order derivatives results in much better accuracy, especially when there is heterogeneity and variable jumps in the domain \cite{REZAEI2022PINN}. The so-called mixed formulation for PINN is applied to basic engineering problems such as the balance of linear momentum and diffusion problems. In this work, the proposed mixed formulation is further extended to solve multi-physical problems. In particular, we focus on a stationary thermo-mechanically coupled system of equations that can be utilized in designing the microstructure of advanced materials. First, sequential unsupervised training, and second, fully coupled unsupervised learning are discussed. The results of each approach are compared in terms of accuracy and corresponding computational cost. Finally, the idea of transfer learning is employed by combining data and physics to address the capability of the network to predict the response of the system for unseen cases. The outcome of this work will be useful for many other engineering applications where DL is employed on multiple coupled systems of equations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge