Michael Fanuel

Disentangled Representation Learning and Generation with Manifold Optimization

Jun 12, 2020

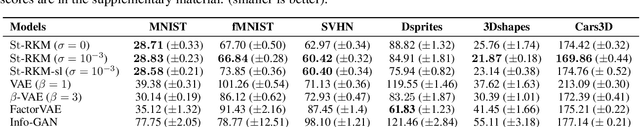

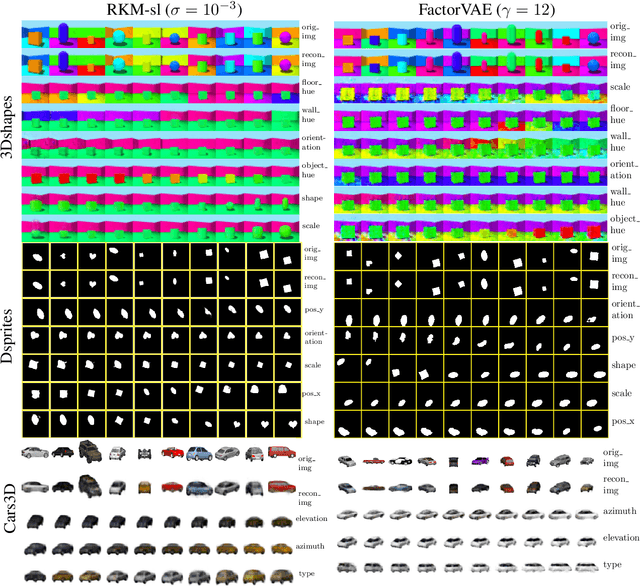

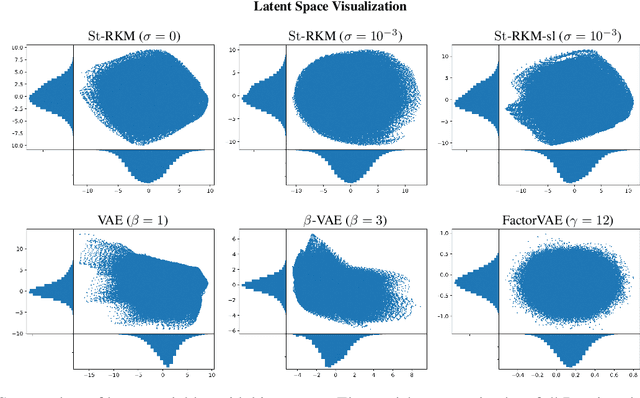

Abstract:Disentanglement is an enjoyable property in representation learning which increases the interpretability of generative models such as Variational Auto-Encoders (VAE), Generative Adversarial Models and their many variants. In the context of latent space models, this work presents a representation learning framework that explicitly promotes disentanglement thanks to the combination of an auto-encoder with Principal Component Analysis (PCA) in latent space. The proposed objective is the sum of an auto-encoder error term along with a PCA reconstruction error in the feature space. This has an interpretation of a Restricted Kernel Machine with an interconnection matrix on the Stiefel manifold. The construction encourages a matching between the principal directions in latent space and the directions of orthogonal variation in data space. The training algorithm involves a stochastic optimization method on the Stiefel manifold, which increases only marginally the computing time compared to an analogous VAE. Our theoretical discussion and various experiments show that the proposed model improves over many VAE variants along with special emphasis on disentanglement learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge