Michael DeFilippo

Distributed Control Barrier Functions for Safe Multi-Vehicle Navigation in Heterogeneous USV Fleets

Jan 16, 2026Abstract:Collision avoidance in heterogeneous fleets of uncrewed vessels is challenging because the decision-making processes and controllers often differ between platforms, and it is further complicated by the limitations on sharing trajectories and control values in real-time. This paper presents a pragmatic approach that addresses these issues by adding a control filter on each autonomous vehicle that assumes worst-case behavior from other contacts, including crewed vessels. This distributed safety control filter is developed using control barrier function (CBF) theory and the application is clearly described to ensure explainability of these safety-critical methods. This work compares the worst-case CBF approach with a Collision Regulations (COLREGS) behavior-based approach in simulated encounters. Real-world experiments with three different uncrewed vessels and a human operated vessel were performed to confirm the approach is effective across a range of platforms and is robust to uncooperative behavior from human operators. Results show that combining both CBF methods and COLREGS behaviors achieves the best safety and efficiency.

Towards Explaining Autonomy with Verbalised Decision Tree States

Sep 28, 2022

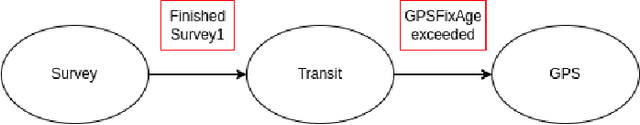

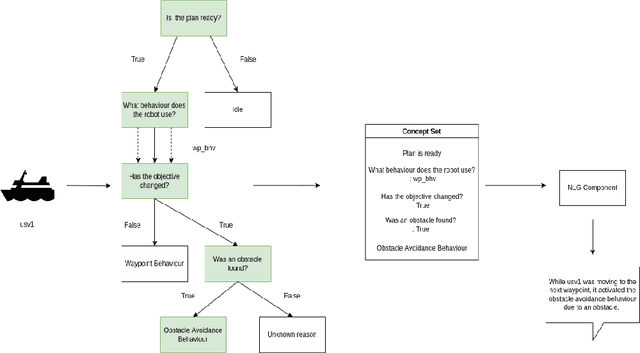

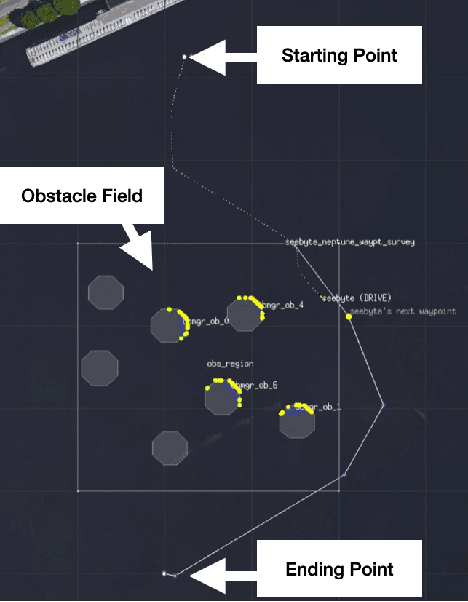

Abstract:The development of new AUV technology increased the range of tasks that AUVs can tackle and the length of their operations. As a result, AUVs are capable of handling highly complex operations. However, these missions do not fit easily into the traditional method of defining a mission as a series of pre-planned waypoints because it is not possible to know, in advance, everything that might occur during the mission. This results in a gap between the operator's expectations and actual operational performance. Consequently, this can create a diminished level of trust between the operators and AUVs, resulting in unnecessary mission interruptions. To bridge this gap between in-mission robotic behaviours and operators' expectations, this work aims to provide a framework to explain decisions and actions taken by an autonomous vehicle during the mission, in an easy-to-understand manner. Additionally, the objective is to have an autonomy-agnostic system that can be added as an additional layer on top of any autonomy architecture. To make the approach applicable across different autonomous systems equipped with different autonomies, this work decouples the inner workings of the autonomy from the decision points and the resulting executed actions applying Knowledge Distillation. Finally, to present the explanations to the operators in a more natural way, the output of the distilled decision tree is combined with natural language explanations and reported to the operators as sentences. For this reason, an additional step known as Concept2Text Generation is added at the end of the explanation pipeline.

MassMIND: Massachusetts Maritime INfrared Dataset

Sep 09, 2022

Abstract:Recent advances in deep learning technology have triggered radical progress in the autonomy of ground vehicles. Marine coastal Autonomous Surface Vehicles (ASVs) that are regularly used for surveillance, monitoring and other routine tasks can benefit from this autonomy. Long haul deep sea transportation activities are additional opportunities. These two use cases present very different terrains -- the first being coastal waters -- with many obstacles, structures and human presence while the latter is mostly devoid of such obstacles. Variations in environmental conditions are common to both terrains. Robust labeled datasets mapping such terrains are crucial in improving the situational awareness that can drive autonomy. However, there are only limited such maritime datasets available and these primarily consist of optical images. Although, Long Wave Infrared (LWIR) is a strong complement to the optical spectrum that helps in extreme light conditions, a labeled public dataset with LWIR images does not currently exist. In this paper, we fill this gap by presenting a labeled dataset of over 2,900 LWIR segmented images captured in coastal maritime environment under diverse conditions. The images are labeled using instance segmentation and classified in seven categories -- sky, water, obstacle, living obstacle, bridge, self and background. We also evaluate this dataset across three deep learning architectures (UNet, PSPNet, DeepLabv3) and provide detailed analysis of its efficacy. While the dataset focuses on the coastal terrain it can equally help deep sea use cases. Such terrain would have less traffic, and the classifier trained on cluttered environment would be able to handle sparse scenes effectively. We share this dataset with the research community with the hope that it spurs new scene understanding capabilities in the maritime environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge