Michael Bukatin

Safety without alignment

Mar 18, 2023Abstract:Currently, the dominant paradigm in AI safety is alignment with human values. Here we describe progress on developing an alternative approach to safety, based on ethical rationalism (Gewirth:1978), and propose an inherently safe implementation path via hybrid theorem provers in a sandbox. As AGIs evolve, their alignment may fade, but their rationality can only increase (otherwise more rational ones will have a significant evolutionary advantage) so an approach that ties their ethics to their rationality has clear long-term advantages.

Programming Patterns in Dataflow Matrix Machines and Generalized Recurrent Neural Nets

Aug 03, 2018

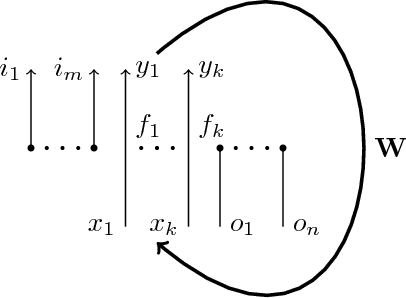

Abstract:Dataflow matrix machines arise naturally in the context of synchronous dataflow programming with linear streams. They can be viewed as a rather powerful generalization of recurrent neural networks. Similarly to recurrent neural networks, large classes of dataflow matrix machines are described by matrices of numbers, and therefore dataflow matrix machines can be synthesized by computing their matrices. At the same time, the evidence is fairly strong that dataflow matrix machines have sufficient expressive power to be a convenient general-purpose programming platform. Because of the network nature of this platform, programming patterns often correspond to patterns of connectivity in the generalized recurrent neural networks understood as programs. This paper explores a variety of such programming patterns.

Dataflow matrix machines as programmable, dynamically expandable, self-referential generalized recurrent neural networks

Jun 21, 2018Abstract:Dataflow matrix machines are a powerful generalization of recurrent neural networks. They work with multiple types of linear streams and multiple types of neurons, including higher-order neurons which dynamically update the matrix describing weights and topology of the network in question while the network is running. It seems that the power of dataflow matrix machines is sufficient for them to be a convenient general purpose programming platform. This paper explores a number of useful programming idioms and constructions arising in this context.

Dataflow Matrix Machines as a Generalization of Recurrent Neural Networks

May 28, 2018Abstract:Dataflow matrix machines are a powerful generalization of recurrent neural networks. They work with multiple types of arbitrary linear streams, multiple types of powerful neurons, and allow to incorporate higher-order constructions. We expect them to be useful in machine learning and probabilistic programming, and in the synthesis of dynamic systems and of deterministic and probabilistic programs.

Dataflow Matrix Machines and V-values: a Bridge between Programs and Neural Nets

May 23, 2018

Abstract:1) Dataflow matrix machines (DMMs) generalize neural nets by replacing streams of numbers with linear streams (streams supporting linear combinations), allowing arbitrary input and output arities for activation functions, countable-sized networks with finite dynamically changeable active part capable of unbounded growth, and a very expressive self-referential mechanism. 2) DMMs are suitable for general-purpose programming, while retaining the key property of recurrent neural networks: programs are expressed via matrices of real numbers, and continuous changes to those matrices produce arbitrarily small variations in the associated programs. 3) Spaces of V-values (vector-like elements based on nested maps) are particularly useful, enabling DMMs with variadic activation functions and conveniently representing conventional data structures.

Dataflow Matrix Machines as a Model of Computations with Linear Streams

May 03, 2017

Abstract:We overview dataflow matrix machines as a Turing complete generalization of recurrent neural networks and as a programming platform. We describe vector space of finite prefix trees with numerical leaves which allows us to combine expressive power of dataflow matrix machines with simplicity of traditional recurrent neural networks.

Linear Models of Computation and Program Learning

Dec 15, 2015Abstract:We consider two classes of computations which admit taking linear combinations of execution runs: probabilistic sampling and generalized animation. We argue that the task of program learning should be more tractable for these architectures than for conventional deterministic programs. We look at the recent advances in the "sampling the samplers" paradigm in higher-order probabilistic programming. We also discuss connections between partial inconsistency, non-monotonic inference, and vector semantics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge