Michael Berger

Distribution-free risk assessment of regression-based machine learning algorithms

Oct 05, 2023

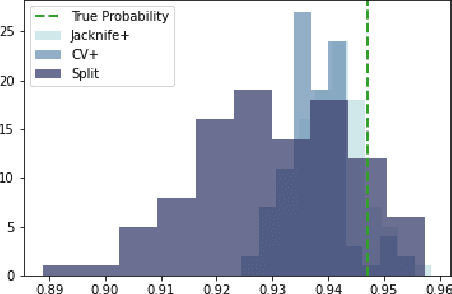

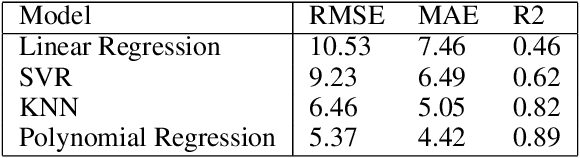

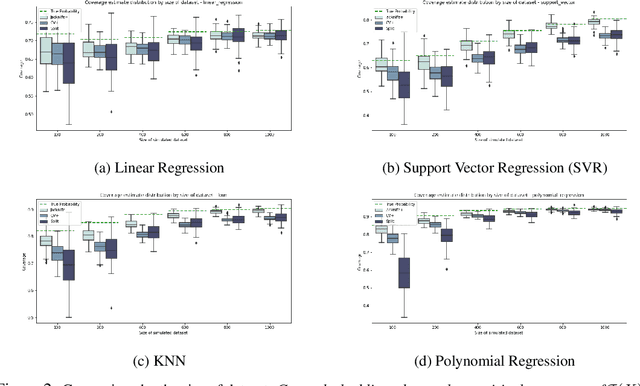

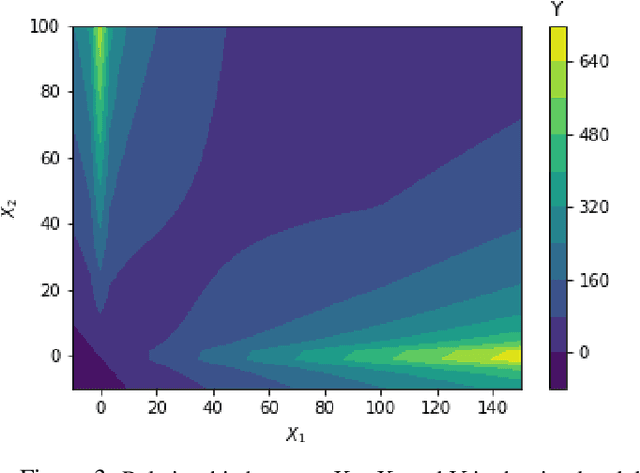

Abstract:Machine learning algorithms have grown in sophistication over the years and are increasingly deployed for real-life applications. However, when using machine learning techniques in practical settings, particularly in high-risk applications such as medicine and engineering, obtaining the failure probability of the predictive model is critical. We refer to this problem as the risk-assessment task. We focus on regression algorithms and the risk-assessment task of computing the probability of the true label lying inside an interval defined around the model's prediction. We solve the risk-assessment problem using the conformal prediction approach, which provides prediction intervals that are guaranteed to contain the true label with a given probability. Using this coverage property, we prove that our approximated failure probability is conservative in the sense that it is not lower than the true failure probability of the ML algorithm. We conduct extensive experiments to empirically study the accuracy of the proposed method for problems with and without covariate shift. Our analysis focuses on different modeling regimes, dataset sizes, and conformal prediction methodologies.

Towards an objective characterization of an individual's facial movements using Self-Supervised Person-Specific-Models

Nov 15, 2022Abstract:Disentangling facial movements from other facial characteristics, particularly from facial identity, remains a challenging task, as facial movements display great variation between individuals. In this paper, we aim to characterize individual-specific facial movements. We present a novel training approach to learn facial movements independently of other facial characteristics, focusing on each individual separately. We propose self-supervised Person-Specific Models (PSMs), in which one model per individual can learn to extract an embedding of the facial movements independently of the person's identity and other structural facial characteristics from unlabeled facial video. These models are trained using encoder-decoder-like architectures. We provide quantitative and qualitative evidence that a PSM learns a meaningful facial embedding that discovers fine-grained movements otherwise not characterized by a General Model (GM), which is trained across individuals and characterizes general patterns of facial movements. We present quantitative and qualitative evidence that this approach is easily scalable and generalizable for new individuals: facial movements knowledge learned on a person can quickly and effectively be transferred to a new person. Lastly, we propose a novel PSM using curriculum temporal learning to leverage the temporal contiguity between video frames. Our code, analysis details, and all pretrained models are available in Github and Supplementary Materials.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge