Mi Jung Park

Training-Free Safe Denoisers for Safe Use of Diffusion Models

Feb 11, 2025Abstract:There is growing concern over the safety of powerful diffusion models (DMs), as they are often misused to produce inappropriate, not-safe-for-work (NSFW) content or generate copyrighted material or data of individuals who wish to be forgotten. Many existing methods tackle these issues by heavily relying on text-based negative prompts or extensively retraining DMs to eliminate certain features or samples. In this paper, we take a radically different approach, directly modifying the sampling trajectory by leveraging a negation set (e.g., unsafe images, copyrighted data, or datapoints needed to be excluded) to avoid specific regions of data distribution, without needing to retrain or fine-tune DMs. We formally derive the relationship between the expected denoised samples that are safe and those that are not safe, leading to our $\textit{safe}$ denoiser which ensures its final samples are away from the area to be negated. Inspired by the derivation, we develop a practical algorithm that successfully produces high-quality samples while avoiding negation areas of the data distribution in text-conditional, class-conditional, and unconditional image generation scenarios. These results hint at the great potential of our training-free safe denoiser for using DMs more safely.

Differentially Private Kernel Inducing Points (DP-KIP) for Privacy-preserving Data Distillation

Jan 31, 2023

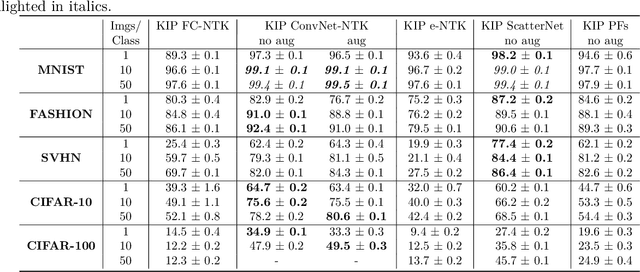

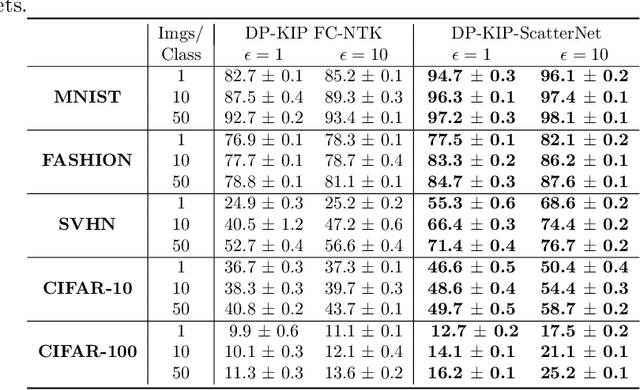

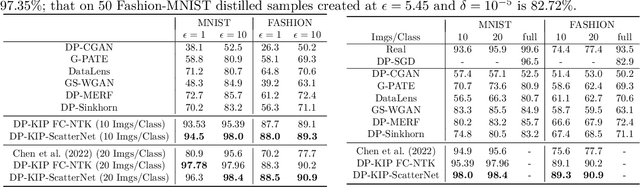

Abstract:While it is tempting to believe that data distillation preserves privacy, distilled data's empirical robustness against known attacks does not imply a provable privacy guarantee. Here, we develop a provably privacy-preserving data distillation algorithm, called differentially private kernel inducing points (DP-KIP). DP-KIP is an instantiation of DP-SGD on kernel ridge regression (KRR). Following a recent work, we use neural tangent kernels and minimize the KRR loss to estimate the distilled datapoints (i.e., kernel inducing points). We provide a computationally efficient JAX implementation of DP-KIP, which we test on several popular image and tabular datasets to show its efficacy in data distillation with differential privacy guarantees.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge