Mehdi Sattari

Dynamic Model Fine-Tuning For Extreme MIMO CSI Compression

Jan 30, 2025

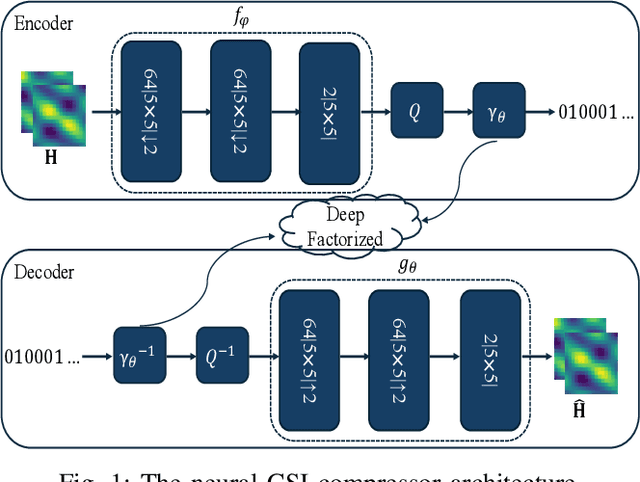

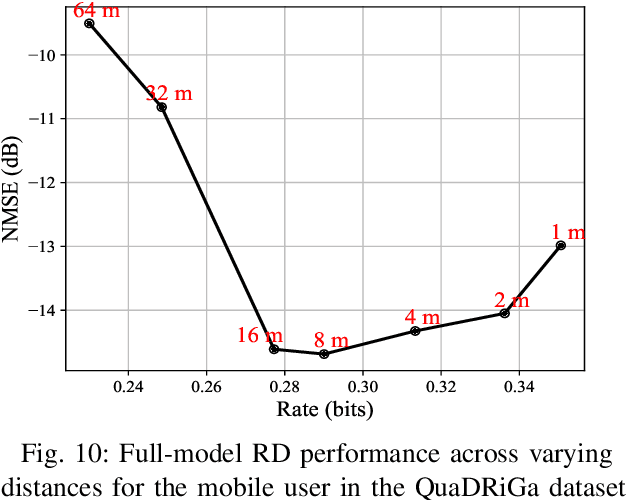

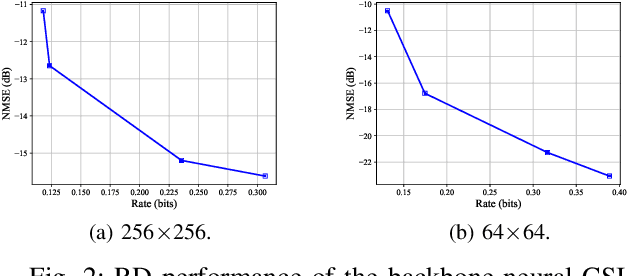

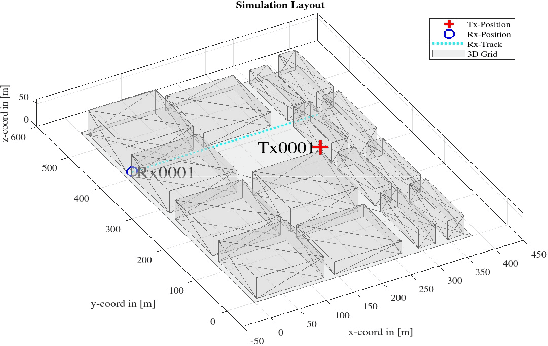

Abstract:Efficient channel state information (CSI) compression is crucial in frequency division duplexing (FDD) massive multiple-input multiple-output (MIMO) systems due to excessive feedback overhead. Recently, deep learning-based compression techniques have demonstrated superior performance across various data types, including CSI. However, these approaches often experience performance degradation when the data distribution changes due to their limited generalization capabilities. To address this challenge, we propose a model fine-tuning approach for CSI feedback in massive MIMO systems. The idea is to fine-tune the encoder/decoder network models in a dynamic fashion using the recent CSI samples. First, we explore encoder-only fine-tuning, where only the encoder parameters are updated, leaving the decoder and latent parameters unchanged. Next, we consider full-model fine-tuning, where the encoder and decoder models are jointly updated. Unlike encoder-only fine-tuning, full-model fine-tuning requires the updated decoder and latent parameters to be transmitted to the decoder side. To efficiently handle this, we propose different prior distributions for model updates, such as uniform and truncated Gaussian to entropy code them together with the compressed CSI and account for additional feedback overhead imposed by conveying the model updates. Moreover, we incorporate quantized model updates during fine-tuning to reflect the impact of quantization in the deployment phase. Our results demonstrate that full-model fine-tuning significantly enhances the rate-distortion (RD) performance of neural CSI compression. Furthermore, we analyze how often the full-model fine-tuning should be applied in a new wireless environment and identify an optimal period interval for achieving the best RD trade-off.

Full-Duplex Millimeter Wave MIMO Channel Estimation: A Neural Network Approach

Feb 06, 2024

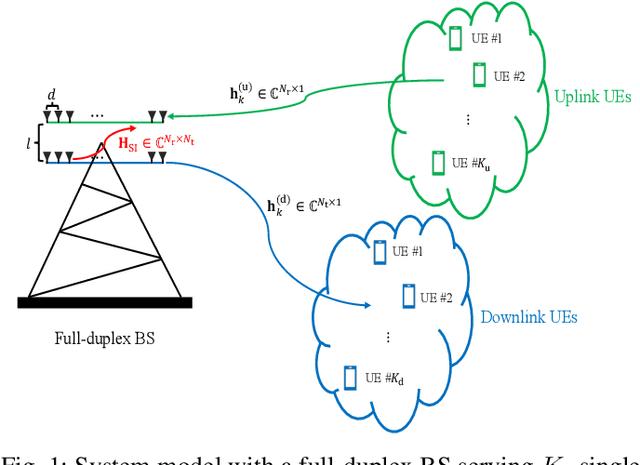

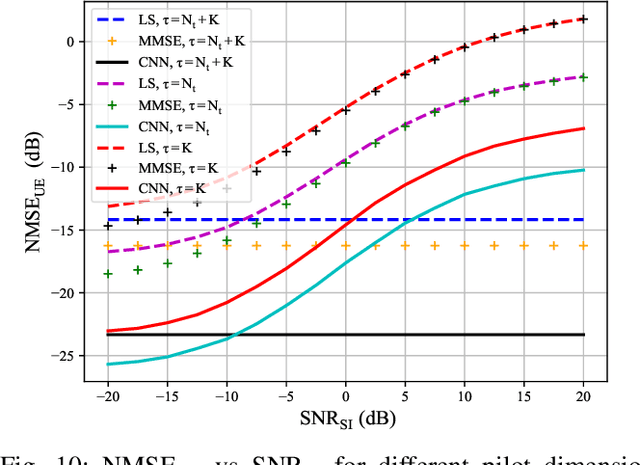

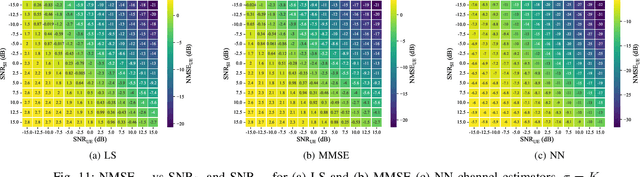

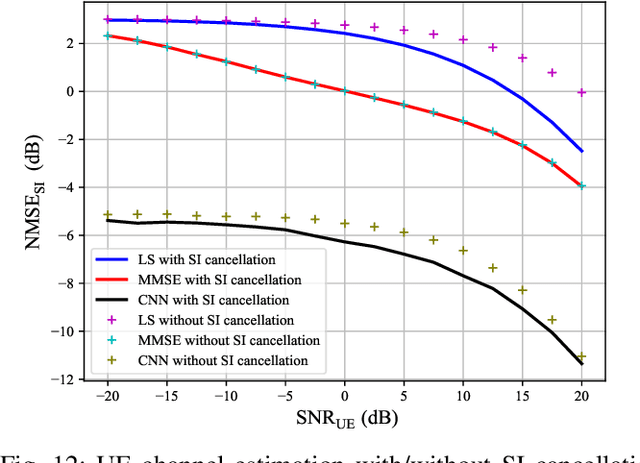

Abstract:Millimeter wave (mmWave) multiple-input-multi-output (MIMO) is now a reality with great potential for further improvement. We study full-duplex transmissions as an effective way to improve mmWave MIMO systems. Compared to half-duplex systems, full-duplex transmissions may offer higher data rates and lower latency. However, full-duplex transmission is hindered by self-interference (SI) at the receive antennas, and SI channel estimation becomes a crucial step to make the full-duplex systems feasible. In this paper, we address the problem of channel estimation in full-duplex mmWave MIMO systems using neural networks (NNs). Our approach involves sharing pilot resources between user equipments (UEs) and transmit antennas at the base station (BS), aiming to reduce the pilot overhead in full-duplex systems and to achieve a comparable level to that of a half-duplex system. Additionally, in the case of separate antenna configurations in a full-duplex BS, providing channel estimates of transmit antenna (TX) arrays to the downlink UEs poses another challenge, as the TX arrays are not capable of receiving pilot signals. To address this, we employ an NN to map the channel from the downlink UEs to the receive antenna (RX) arrays to the channel from the TX arrays to the downlink UEs. We further elaborate on how NNs perform the estimation with different architectures, (e.g., different numbers of hidden layers), the introduction of non-linear distortion (e.g., with a 1-bit analog-to-digital converter (ADC)), and different channel conditions (e.g., low-correlated and high-correlated channels). Our work provides novel insights into NN-based channel estimators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge