Mehdi Asadi

A Review on Generative AI Models for Synthetic Medical Text, Time Series, and Longitudinal Data

Nov 19, 2024Abstract:This paper presents the results of a novel scoping review on the practical models for generating three different types of synthetic health records (SHRs): medical text, time series, and longitudinal data. The innovative aspects of the review, which incorporate study objectives, data modality, and research methodology of the reviewed studies, uncover the importance and the scope of the topic for the digital medicine context. In total, 52 publications met the eligibility criteria for generating medical time series (22), longitudinal data (17), and medical text (13). Privacy preservation was found to be the main research objective of the studied papers, along with class imbalance, data scarcity, and data imputation as the other objectives. The adversarial network-based, probabilistic, and large language models exhibited superiority for generating synthetic longitudinal data, time series, and medical texts, respectively. Finding a reliable performance measure to quantify SHR re-identification risk is the major research gap of the topic.

Learning Activation Functions for Sparse Neural Networks

May 18, 2023

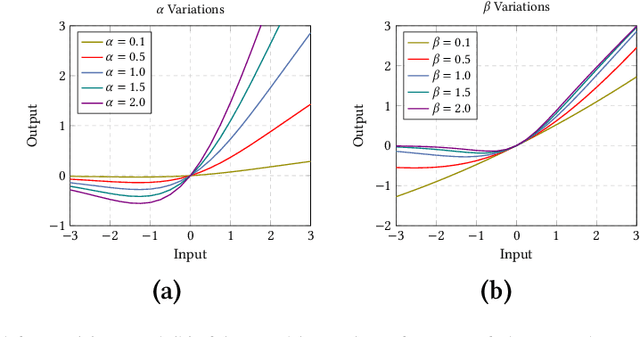

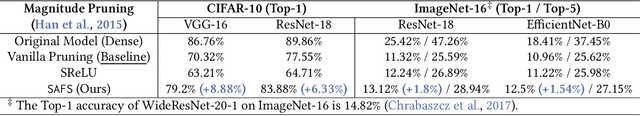

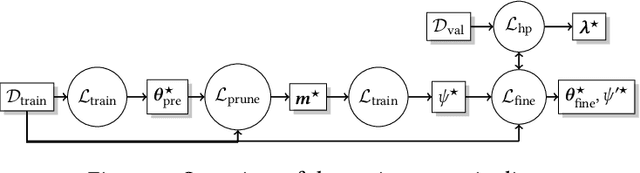

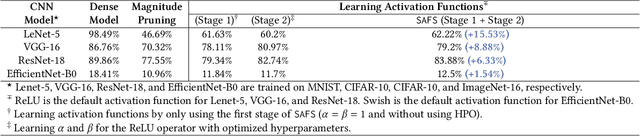

Abstract:Sparse Neural Networks (SNNs) can potentially demonstrate similar performance to their dense counterparts while saving significant energy and memory at inference. However, the accuracy drop incurred by SNNs, especially at high pruning ratios, can be an issue in critical deployment conditions. While recent works mitigate this issue through sophisticated pruning techniques, we shift our focus to an overlooked factor: hyperparameters and activation functions. Our analyses have shown that the accuracy drop can additionally be attributed to (i) Using ReLU as the default choice for activation functions unanimously, and (ii) Fine-tuning SNNs with the same hyperparameters as dense counterparts. Thus, we focus on learning a novel way to tune activation functions for sparse networks and combining these with a separate hyperparameter optimization (HPO) regime for sparse networks. By conducting experiments on popular DNN models (LeNet-5, VGG-16, ResNet-18, and EfficientNet-B0) trained on MNIST, CIFAR-10, and ImageNet-16 datasets, we show that the novel combination of these two approaches, dubbed Sparse Activation Function Search, short: SAFS, results in up to 15.53%, 8.88%, and 6.33% absolute improvement in the accuracy for LeNet-5, VGG-16, and ResNet-18 over the default training protocols, especially at high pruning ratios. Our code can be found at https://github.com/automl/SAFS

Accurate Detection of Paroxysmal Atrial Fibrillation with Certified-GAN and Neural Architecture Search

Jan 17, 2023Abstract:This paper presents a novel machine learning framework for detecting Paroxysmal Atrial Fibrillation (PxAF), a pathological characteristic of Electrocardiogram (ECG) that can lead to fatal conditions such as heart attack. To enhance the learning process, the framework involves a Generative Adversarial Network (GAN) along with a Neural Architecture Search (NAS) in the data preparation and classifier optimization phases. The GAN is innovatively invoked to overcome the class imbalance of the training data by producing the synthetic ECG for PxAF class in a certified manner. The effect of the certified GAN is statistically validated. Instead of using a general-purpose classifier, the NAS automatically designs a highly accurate convolutional neural network architecture customized for the PxAF classification task. Experimental results show that the accuracy of the proposed framework exhibits a high value of 99% which not only enhances state-of-the-art by up to 5.1%, but also improves the classification performance of the two widely-accepted baseline methods, ResNet-18, and Auto-Sklearn, by 2.2% and 6.1%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge