Md Tasnimul Hasan

Assessing Uncertainty Estimation Methods for 3D Image Segmentation under Distribution Shifts

Feb 10, 2024Abstract:In recent years, machine learning has witnessed extensive adoption across various sectors, yet its application in medical image-based disease detection and diagnosis remains challenging due to distribution shifts in real-world data. In practical settings, deployed models encounter samples that differ significantly from the training dataset, especially in the health domain, leading to potential performance issues. This limitation hinders the expressiveness and reliability of deep learning models in health applications. Thus, it becomes crucial to identify methods capable of producing reliable uncertainty estimation in the context of distribution shifts in the health sector. In this paper, we explore the feasibility of using cutting-edge Bayesian and non-Bayesian methods to detect distributionally shifted samples, aiming to achieve reliable and trustworthy diagnostic predictions in segmentation task. Specifically, we compare three distinct uncertainty estimation methods, each designed to capture either unimodal or multimodal aspects in the posterior distribution. Our findings demonstrate that methods capable of addressing multimodal characteristics in the posterior distribution, offer more dependable uncertainty estimates. This research contributes to enhancing the utility of deep learning in healthcare, making diagnostic predictions more robust and trustworthy.

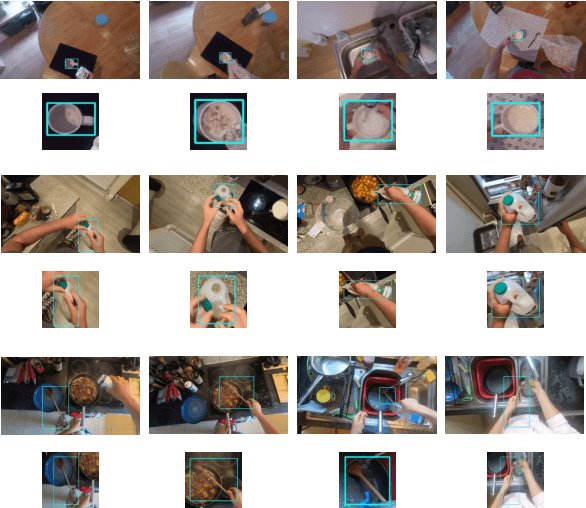

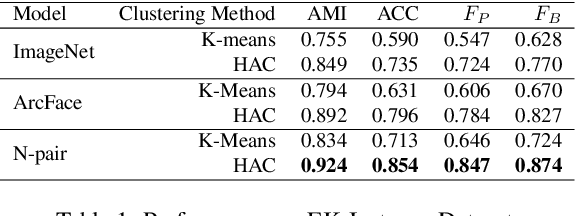

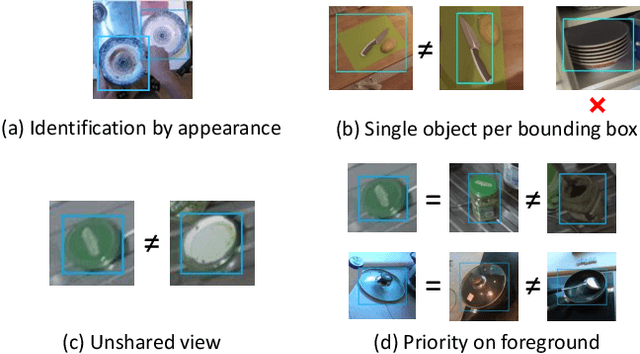

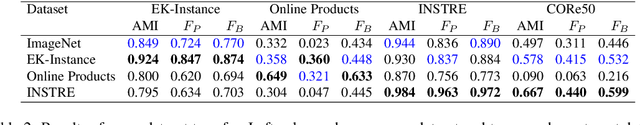

Object Instance Identification in Dynamic Environments

Jun 10, 2022

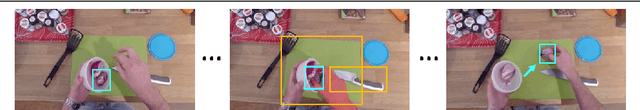

Abstract:We study the problem of identifying object instances in a dynamic environment where people interact with the objects. In such an environment, objects' appearance changes dynamically by interaction with other entities, occlusion by hands, background change, etc. This leads to a larger intra-instance variation of appearance than in static environments. To discover the challenges in this setting, we newly built a benchmark of more than 1,500 instances built on the EPIC-KITCHENS dataset which includes natural activities and conducted an extensive analysis of it. Experimental results suggest that (i) robustness against instance-specific appearance change (ii) integration of low-level (e.g., color, texture) and high-level (e.g., object category) features (iii) foreground feature selection on overlapping objects are required for further improvement.

Hand-Object Contact Prediction via Motion-Based Pseudo-Labeling and Guided Progressive Label Correction

Oct 19, 2021

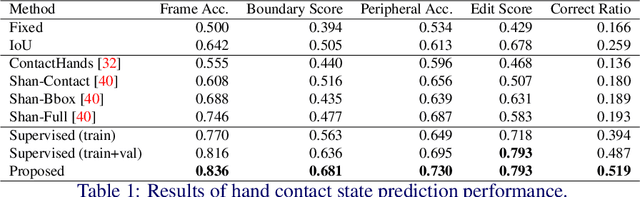

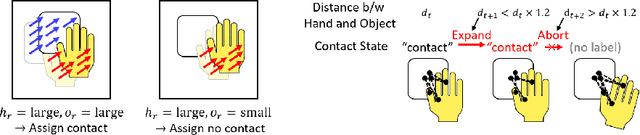

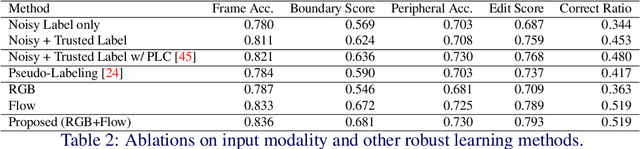

Abstract:Every hand-object interaction begins with contact. Despite predicting the contact state between hands and objects is useful in understanding hand-object interactions, prior methods on hand-object analysis have assumed that the interacting hands and objects are known, and were not studied in detail. In this study, we introduce a video-based method for predicting contact between a hand and an object. Specifically, given a video and a pair of hand and object tracks, we predict a binary contact state (contact or no-contact) for each frame. However, annotating a large number of hand-object tracks and contact labels is costly. To overcome the difficulty, we propose a semi-supervised framework consisting of (i) automatic collection of training data with motion-based pseudo-labels and (ii) guided progressive label correction (gPLC), which corrects noisy pseudo-labels with a small amount of trusted data. We validated our framework's effectiveness on a newly built benchmark dataset for hand-object contact prediction and showed superior performance against existing baseline methods. Code and data are available at https://github.com/takumayagi/hand_object_contact_prediction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge