Md Tahmid Hossain

Anti-aliasing Deep Image Classifiers using Novel Depth Adaptive Blurring and Activation Function

Oct 03, 2021

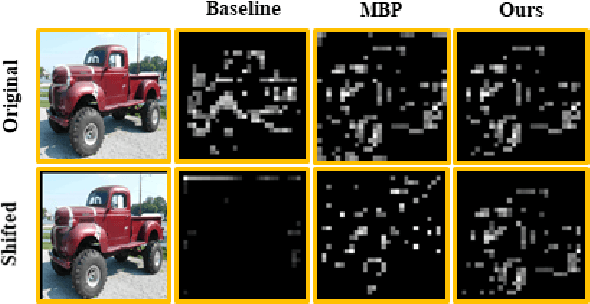

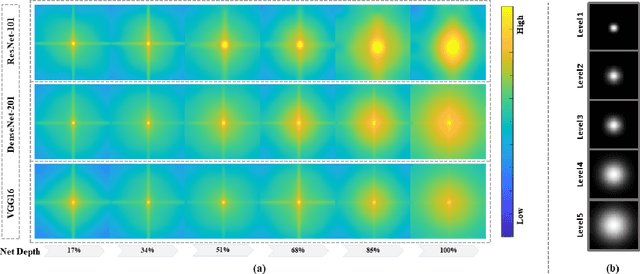

Abstract:Deep convolutional networks are vulnerable to image translation or shift, partly due to common down-sampling layers, e.g., max-pooling and strided convolution. These operations violate the Nyquist sampling rate and cause aliasing. The textbook solution is low-pass filtering (blurring) before down-sampling, which can benefit deep networks as well. Even so, non-linearity units, such as ReLU, often re-introduce the problem, suggesting that blurring alone may not suffice. In this work, first, we analyse deep features with Fourier transform and show that Depth Adaptive Blurring is more effective, as opposed to monotonic blurring. To this end, we outline how this can replace existing down-sampling methods. Second, we introduce a novel activation function -- with a built-in low pass filter, to keep the problem from reappearing. From experiments, we observe generalisation on other forms of transformations and corruptions as well, e.g., rotation, scale, and noise. We evaluate our method under three challenging settings: (1) a variety of image translations; (2) adversarial attacks -- both $\ell_{p}$ bounded and unbounded; and (3) data corruptions and perturbations. In each setting, our method achieves state-of-the-art results and improves clean accuracy on various benchmark datasets.

A novel network training approach for open set image recognition

Sep 27, 2021

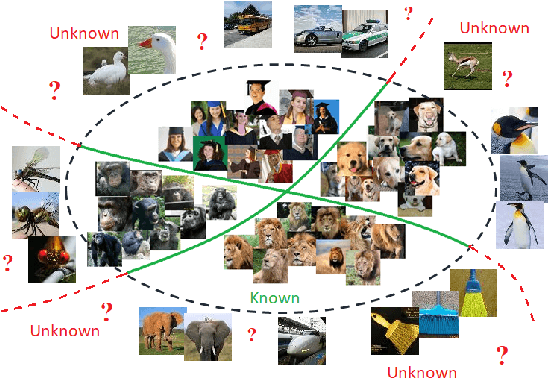

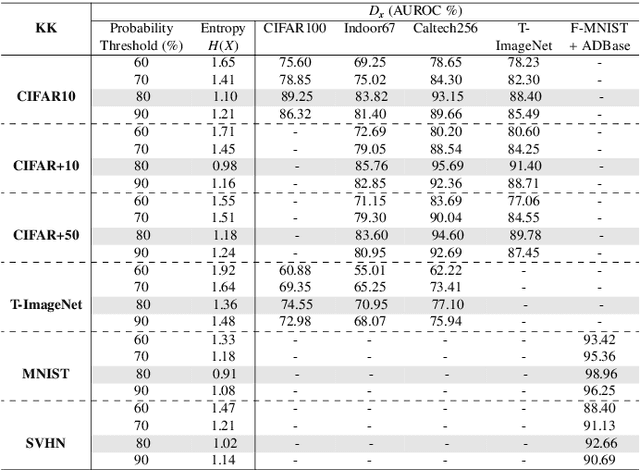

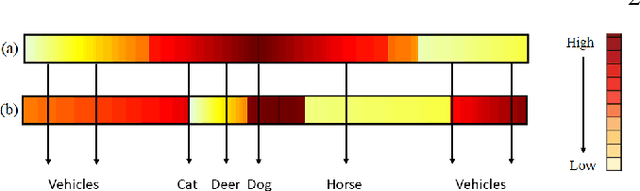

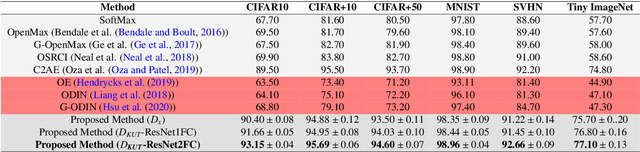

Abstract:Convolutional Neural Networks (CNNs) are commonly designed for closed set arrangements, where test instances only belong to some "Known Known" (KK) classes used in training. As such, they predict a class label for a test sample based on the distribution of the KK classes. However, when used under the Open Set Recognition (OSR) setup (where an input may belong to an "Unknown Unknown" or UU class), such a network will always classify a test instance as one of the KK classes even if it is from a UU class. As a solution, recently, data augmentation based on Generative Adversarial Networks(GAN) has been used. In this work, we propose a novel approach for mining a "Known UnknownTrainer" or KUT set and design a deep OSR Network (OSRNet) to harness this dataset. The goal isto teach OSRNet the essence of the UUs through KUT set, which is effectively a collection of mined "hard Known Unknown negatives". Once trained, OSRNet can detect the UUs while maintaining high classification accuracy on KKs. We evaluate OSRNet on six benchmark datasets and demonstrate it outperforms contemporary OSR methods.

Robust Image Classification Using A Low-Pass Activation Function and DCT Augmentation

Jul 18, 2020

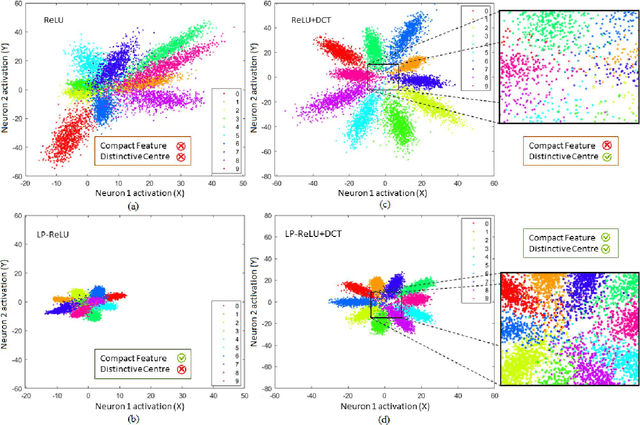

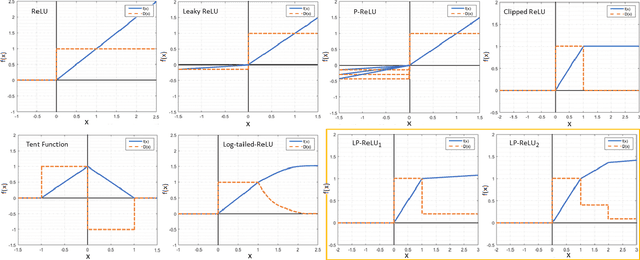

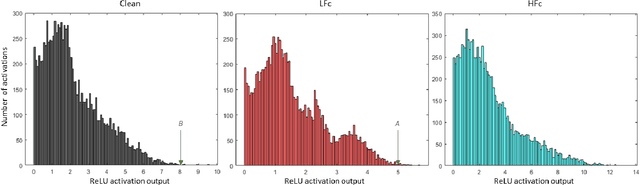

Abstract:Convolutional Neural Network's (CNN's) performance disparity on clean and corrupted datasets has recently been noticed. In this work, we analyse common corruptions from a frequency perspective, i.e., High Frequency corruptions or HFc (e.g., noise) and Low Frequency corruptions or LFc (e.g., blur). A common signal processing solution to HFc is low-pass filtering. Intriguingly, the de-facto Activation Function (AF) used in modern CNNs, i.e., ReLU does not have any filtering mechanism resulting in unstable performance on HFc. In this work, we propose a family of novel AFs with low-pass filtering to improve robustness against HFc (we call it Low-Pass ReLU or LP-ReLU). To deal with LFc, we further enhance the AFs with Discrete Cosine Transform (DCT) based augmentation. LP-ReLU coupled with DCT augmentation, enables a deep network to tackle a variety of corruptions. We evaluate our method's performance on CIFAR-10-C and Tiny ImageNet-C datasets and achieve improvements of 5.1% and 7.2% in accuracy respectively compared to the State-Of-The-Art (SOTA). We further evaluate our method's performance stability on a variety of perturbations available in CIFAR-10-P and Tiny ImageNet-P. We also achieve new SOTA results in these experiments. We also devise a decision space visualisation process to further strengthen the understanding regarding CNN's lack of robustness against corrupted data.

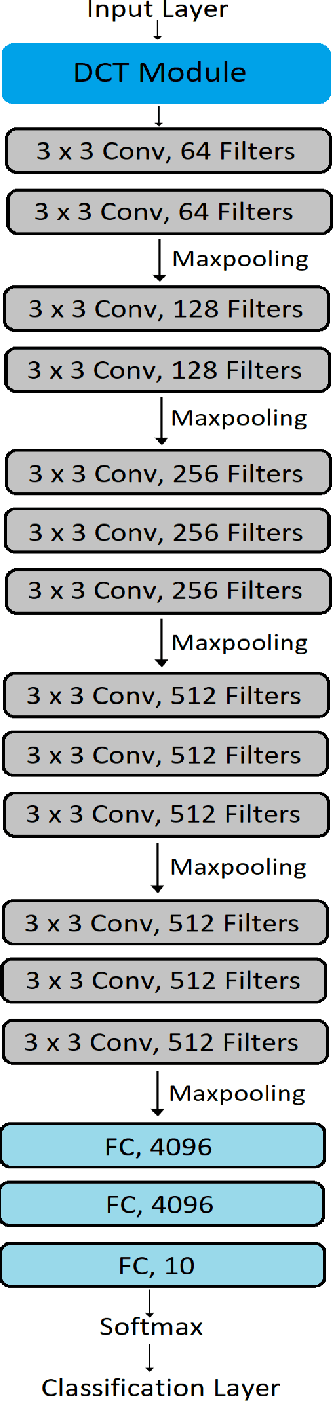

Distortion Robust Image Classification using Deep Convolutional Neural Network with Discrete Cosine Transform

Nov 19, 2018

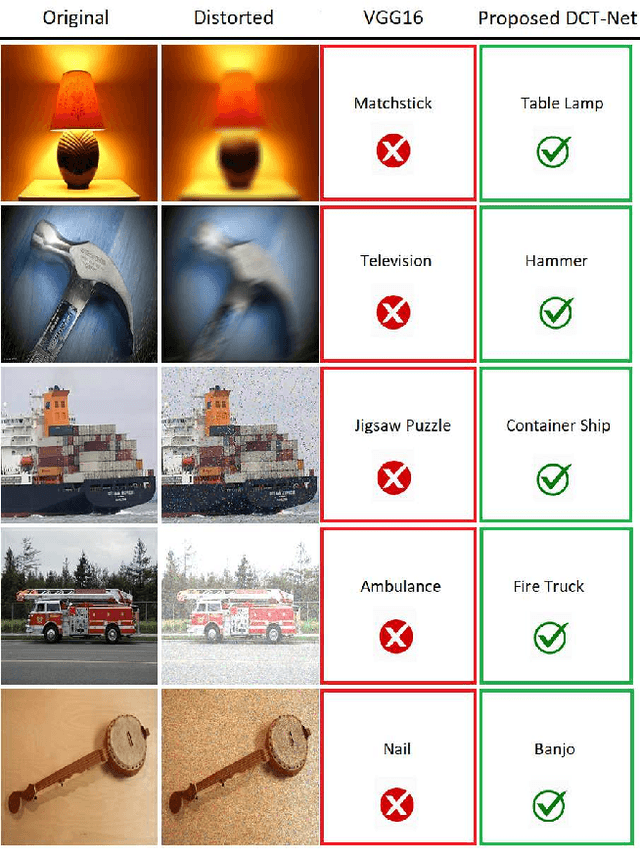

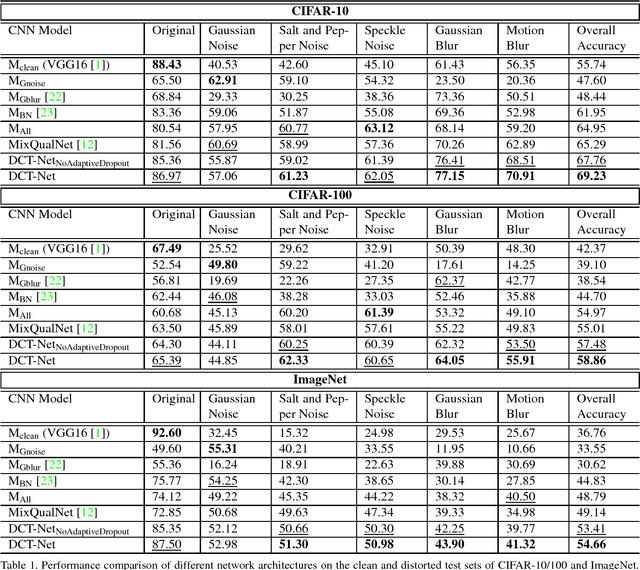

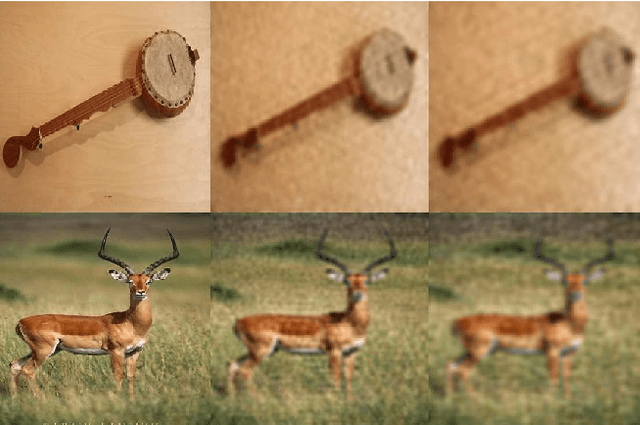

Abstract:Convolutional Neural Network is good at image classification. However, it is found to be vulnerable to image quality degradation. Even a small amount of distortion such as noise or blur can severely hamper the performance of these CNN architectures. Most of the work in the literature strives to mitigate this problem simply by fine-tuning a pre-trained CNN on mutually exclusive or a union set of distorted training data. This iterative fine-tuning process with all known types of distortion is exhaustive and the network struggles to handle unseen distortions. In this work, we propose distortion robust DCT-Net, a Discrete Cosine Transform based module integrated into a deep network which is built on top of VGG16. Unlike other works in the literature, DCT-Net is "blind" to the distortion type and level in an image both during training and testing. As a part of the training process, the proposed DCT module discards input information which mostly represents the contribution of high frequencies. The DCT-Net is trained "blindly" only once and applied in generic situation without further retraining. We also extend the idea of traditional dropout and present a training adaptive version of the same. We evaluate our proposed method against Gaussian blur, motion blur, salt and pepper noise, Gaussian noise and speckle noise added to CIFAR-10/100 and ImageNet test sets. Experimental results demonstrate that once trained, DCT-Net not only generalizes well to a variety of unseen image distortions but also outperforms other methods in the literature.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge