Md Asiful Islam

Comparative Assessment of Concrete Compressive Strength Prediction at Industry Scale Using Embedding-based Neural Networks, Transformers, and Traditional Machine Learning Approaches

Jan 14, 2026Abstract:Concrete is the most widely used construction material worldwide; however, reliable prediction of compressive strength remains challenging due to material heterogeneity, variable mix proportions, and sensitivity to field and environmental conditions. Recent advances in artificial intelligence enable data-driven modeling frameworks capable of supporting automated decision-making in construction quality control. This study leverages an industry-scale dataset consisting of approximately 70,000 compressive strength test records to evaluate and compare multiple predictive approaches, including linear regression, decision trees, random forests, transformer-based neural networks, and embedding-based neural networks. The models incorporate key mixture design and placement variables such as water cement ratio, cementitious material content, slump, air content, temperature, and placement conditions. Results indicate that the embedding-based neural network consistently outperforms traditional machine learning and transformer-based models, achieving a mean 28-day prediction error of approximately 2.5%. This level of accuracy is comparable to routine laboratory testing variability, demonstrating the potential of embedding-based learning frameworks to enable automated, data-driven quality control and decision support in large-scale construction operations.

Towards Realistic Few-Shot Relation Extraction: A New Meta Dataset and Evaluation

Apr 05, 2024

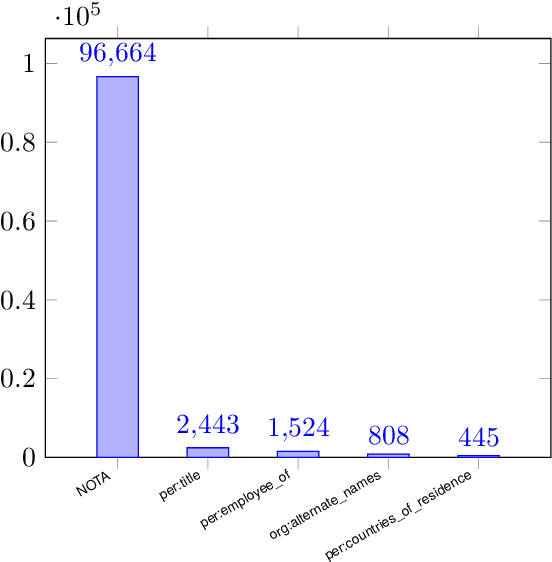

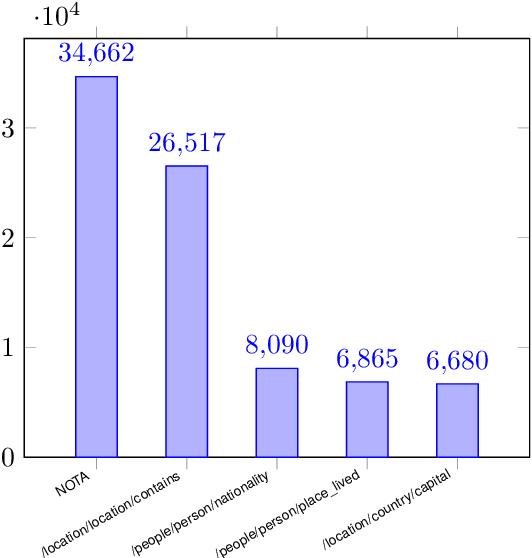

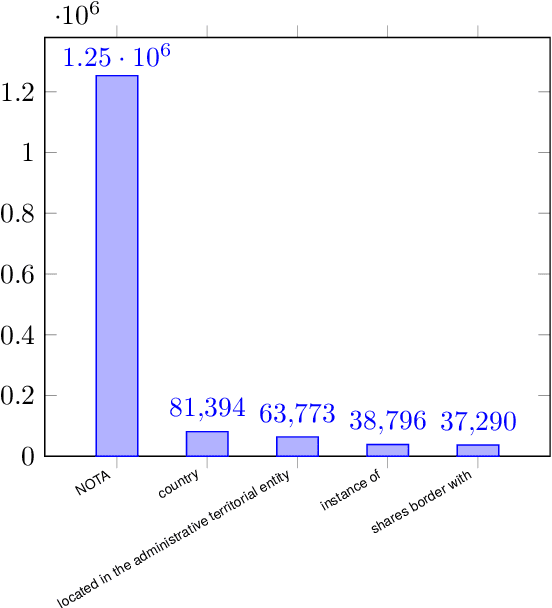

Abstract:We introduce a meta dataset for few-shot relation extraction, which includes two datasets derived from existing supervised relation extraction datasets NYT29 (Takanobu et al., 2019; Nayak and Ng, 2020) and WIKIDATA (Sorokin and Gurevych, 2017) as well as a few-shot form of the TACRED dataset (Sabo et al., 2021). Importantly, all these few-shot datasets were generated under realistic assumptions such as: the test relations are different from any relations a model might have seen before, limited training data, and a preponderance of candidate relation mentions that do not correspond to any of the relations of interest. Using this large resource, we conduct a comprehensive evaluation of six recent few-shot relation extraction methods, and observe that no method comes out as a clear winner. Further, the overall performance on this task is low, indicating substantial need for future research. We release all versions of the data, i.e., both supervised and few-shot, for future research.

Best of Both Worlds: A Pliable and Generalizable Neuro-Symbolic Approach for Relation Classification

Mar 05, 2024

Abstract:This paper introduces a novel neuro-symbolic architecture for relation classification (RC) that combines rule-based methods with contemporary deep learning techniques. This approach capitalizes on the strengths of both paradigms: the adaptability of rule-based systems and the generalization power of neural networks. Our architecture consists of two components: a declarative rule-based model for transparent classification and a neural component to enhance rule generalizability through semantic text matching. Notably, our semantic matcher is trained in an unsupervised domain-agnostic way, solely with synthetic data. Further, these components are loosely coupled, allowing for rule modifications without retraining the semantic matcher. In our evaluation, we focused on two few-shot relation classification datasets: Few-Shot TACRED and a Few-Shot version of NYT29. We show that our proposed method outperforms previous state-of-the-art models in three out of four settings, despite not seeing any human-annotated training data. Further, we show that our approach remains modular and pliable, i.e., the corresponding rules can be locally modified to improve the overall model. Human interventions to the rules for the TACRED relation \texttt{org:parents} boost the performance on that relation by as much as 26\% relative improvement, without negatively impacting the other relations, and without retraining the semantic matching component.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge