Md Abdur Rahim

Classification of ADHD and Healthy Children Using EEG Based Multi-Band Spatial Features Enhancement

Apr 07, 2025Abstract:Attention Deficit Hyperactivity Disorder (ADHD) is a common neurodevelopmental disorder in children, characterized by difficulties in attention, hyperactivity, and impulsivity. Early and accurate diagnosis of ADHD is critical for effective intervention and management. Electroencephalogram (EEG) signals have emerged as a non-invasive and efficient tool for ADHD detection due to their high temporal resolution and ability to capture neural dynamics. In this study, we propose a method for classifying ADHD and healthy children using EEG data from the benchmark dataset. There were 61 children with ADHD and 60 healthy children, both boys and girls, aged 7 to 12. The EEG signals, recorded from 19 channels, were processed to extract Power Spectral Density (PSD) and Spectral Entropy (SE) features across five frequency bands, resulting in a comprehensive 190-dimensional feature set. To evaluate the classification performance, a Support Vector Machine (SVM) with the RBF kernel demonstrated the best performance with a mean cross-validation accuracy of 99.2\% and a standard deviation of 0.0079, indicating high robustness and precision. These results highlight the potential of spatial features in conjunction with machine learning for accurately classifying ADHD using EEG data. This work contributes to developing non-invasive, data-driven tools for early diagnosis and assessment of ADHD in children.

IoT-Based Real-Time Medical-Related Human Activity Recognition Using Skeletons and Multi-Stage Deep Learning for Healthcare

Jan 13, 2025Abstract:The Internet of Things (IoT) and mobile technology have significantly transformed healthcare by enabling real-time monitoring and diagnosis of patients. Recognizing medical-related human activities (MRHA) is pivotal for healthcare systems, particularly for identifying actions that are critical to patient well-being. However, challenges such as high computational demands, low accuracy, and limited adaptability persist in Human Motion Recognition (HMR). While some studies have integrated HMR with IoT for real-time healthcare applications, limited research has focused on recognizing MRHA as essential for effective patient monitoring. This study proposes a novel HMR method for MRHA detection, leveraging multi-stage deep learning techniques integrated with IoT. The approach employs EfficientNet to extract optimized spatial features from skeleton frame sequences using seven Mobile Inverted Bottleneck Convolutions (MBConv) blocks, followed by ConvLSTM to capture spatio-temporal patterns. A classification module with global average pooling, a fully connected layer, and a dropout layer generates the final predictions. The model is evaluated on the NTU RGB+D 120 and HMDB51 datasets, focusing on MRHA, such as sneezing, falling, walking, sitting, etc. It achieves 94.85% accuracy for cross-subject evaluations and 96.45% for cross-view evaluations on NTU RGB+D 120, along with 89.00% accuracy on HMDB51. Additionally, the system integrates IoT capabilities using a Raspberry Pi and GSM module, delivering real-time alerts via Twilios SMS service to caregivers and patients. This scalable and efficient solution bridges the gap between HMR and IoT, advancing patient monitoring, improving healthcare outcomes, and reducing costs.

ChatGPT in Research and Education: Exploring Benefits and Threats

Nov 05, 2024

Abstract:In recent years, advanced artificial intelligence technologies, such as ChatGPT, have significantly impacted various fields, including education and research. Developed by OpenAI, ChatGPT is a powerful language model that presents numerous opportunities for students and educators. It offers personalized feedback, enhances accessibility, enables interactive conversations, assists with lesson preparation and evaluation, and introduces new methods for teaching complex subjects. However, ChatGPT also poses challenges to traditional education and research systems. These challenges include the risk of cheating on online exams, the generation of human-like text that may compromise academic integrity, a potential decline in critical thinking skills, and difficulties in assessing the reliability of information generated by AI. This study examines both the opportunities and challenges ChatGPT brings to education from the perspectives of students and educators. Specifically, it explores the role of ChatGPT in helping students develop their subjective skills. To demonstrate its effectiveness, we conducted several subjective experiments using ChatGPT, such as generating solutions from subjective problem descriptions. Additionally, surveys were conducted with students and teachers to gather insights into how ChatGPT supports subjective learning and teaching. The results and analysis of these surveys are presented to highlight the impact of ChatGPT in this context.

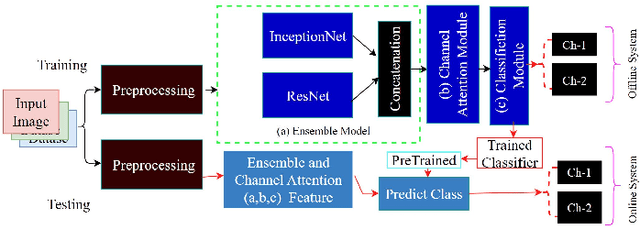

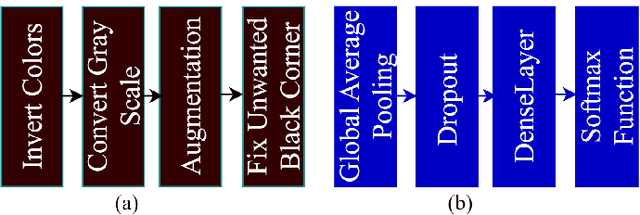

Multichannel Attention Networks with Ensembled Transfer Learning to Recognize Bangla Handwritten Charecter

Aug 20, 2024

Abstract:The Bengali language is the 5th most spoken native and 7th most spoken language in the world, and Bengali handwritten character recognition has attracted researchers for decades. However, other languages such as English, Arabic, Turkey, and Chinese character recognition have contributed significantly to developing handwriting recognition systems. Still, little research has been done on Bengali character recognition because of the similarity of the character, curvature and other complexities. However, many researchers have used traditional machine learning and deep learning models to conduct Bengali hand-written recognition. The study employed a convolutional neural network (CNN) with ensemble transfer learning and a multichannel attention network. We generated the feature from the two branches of the CNN, including Inception Net and ResNet and then produced an ensemble feature fusion by concatenating them. After that, we applied the attention module to produce the contextual information from the ensemble features. Finally, we applied a classification module to refine the features and classification. We evaluated the proposed model using the CAMTERdb 3.1.2 data set and achieved 92\% accuracy for the raw dataset and 98.00\% for the preprocessed dataset. We believe that our contribution to the Bengali handwritten character recognition domain will be considered a great development.

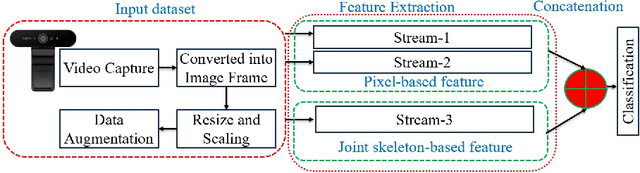

An Advanced Deep Learning Based Three-Stream Hybrid Model for Dynamic Hand Gesture Recognition

Aug 15, 2024

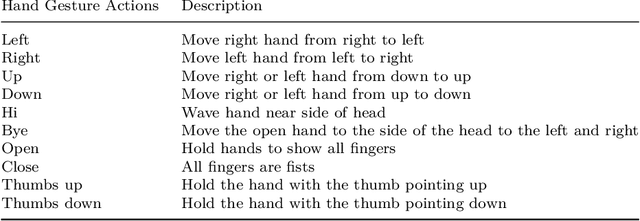

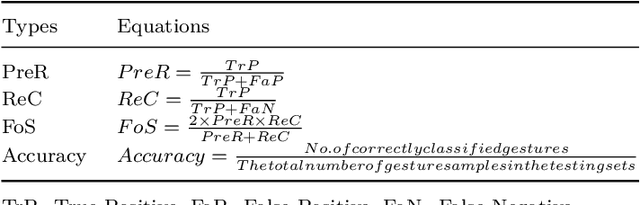

Abstract:In the modern context, hand gesture recognition has emerged as a focal point. This is due to its wide range of applications, which include comprehending sign language, factories, hands-free devices, and guiding robots. Many researchers have attempted to develop more effective techniques for recognizing these hand gestures. However, there are challenges like dataset limitations, variations in hand forms, external environments, and inconsistent lighting conditions. To address these challenges, we proposed a novel three-stream hybrid model that combines RGB pixel and skeleton-based features to recognize hand gestures. In the procedure, we preprocessed the dataset, including augmentation, to make rotation, translation, and scaling independent systems. We employed a three-stream hybrid model to extract the multi-feature fusion using the power of the deep learning module. In the first stream, we extracted the initial feature using the pre-trained Imagenet module and then enhanced this feature by using a multi-layer of the GRU and LSTM modules. In the second stream, we extracted the initial feature with the pre-trained ReseNet module and enhanced it with the various combinations of the GRU and LSTM modules. In the third stream, we extracted the hand pose key points using the media pipe and then enhanced them using the stacked LSTM to produce the hierarchical feature. After that, we concatenated the three features to produce the final. Finally, we employed a classification module to produce the probabilistic map to generate predicted output. We mainly produced a powerful feature vector by taking advantage of the pixel-based deep learning feature and pos-estimation-based stacked deep learning feature, including a pre-trained model with a scratched deep learning model for unequalled gesture detection capabilities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge