Maxim Vochten

Enhancing Hand Palm Motion Gesture Recognition by Eliminating Reference Frame Bias via Frame-Invariant Similarity Measures

Mar 14, 2025

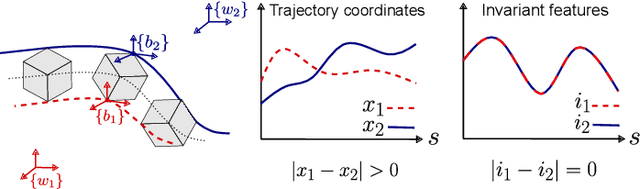

Abstract:The ability of robots to recognize human gestures facilitates a natural and accessible human-robot collaboration. However, most work in gesture recognition remains rooted in reference frame-dependent representations. This poses a challenge when reference frames vary due to different work cell layouts, imprecise frame calibrations, or other environmental changes. This paper investigated the use of invariant trajectory descriptors for robust hand palm motion gesture recognition under reference frame changes. First, a novel dataset of recorded Hand Palm Motion (HPM) gestures is introduced. The motion gestures in this dataset were specifically designed to be distinguishable without dependence on specific reference frames or directional cues. Afterwards, multiple invariant trajectory descriptor approaches were benchmarked to assess how their performances generalize to this novel HPM dataset. After this offline benchmarking, the best scoring approach is validated for online recognition by developing a real-time Proof of Concept (PoC). In this PoC, hand palm motion gestures were used to control the real-time movement of a manipulator arm. The PoC demonstrated a high recognition reliability in real-time operation, achieving an $F_1$-score of 92.3%. This work demonstrates the effectiveness of the invariant descriptor approach as a standalone solution. Moreover, we believe that the invariant descriptor approach can also be utilized within other state-of-the-art pattern recognition and learning systems to improve their robustness against reference frame variations.

BILTS: A novel bi-invariant local trajectory-shape descriptor for rigid-body motion

May 07, 2024

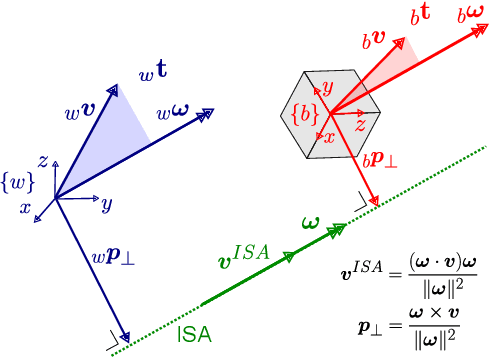

Abstract:Measuring the similarity between motions and established motion models is crucial for motion analysis, recognition, generation, and adaptation. To enhance similarity measurement across diverse contexts, invariant motion descriptors have been proposed. However, for rigid-body motion, few invariant descriptors exist that are bi-invariant, meaning invariant to both the body and world reference frames used to describe the motion. Moreover, their robustness to singularities is limited. This paper introduces a novel Bi-Invariant Local Trajectory-Shape descriptor (BILTS) and a corresponding dissimilarity measure. Mathematical relationships between BILTS and existing descriptors are derived, providing new insights into their properties. The paper also includes an algorithm to reproduce the motion from the BILTS descriptor, demonstrating its bidirectionality and usefulness for trajectory generation. Experimental validation using datasets of daily-life activities shows the higher robustness of the BILTS descriptor compared to the bi-invariant ISA descriptor. This higher robustness supports the further application of bi-invariant descriptors for motion recognition and generalization.

Automatic Derivation of an Optimal Task Frame for Learning and Controlling Contact-Rich Tasks

Apr 03, 2024

Abstract:This study investigates learning from demonstration (LfD) for contact-rich tasks. The procedure for choosing a task frame to express the learned signals for the motion and interaction wrench is often omitted or using expert insight. This article presents a procedure to derive the optimal task frame from motion and wrench data recorded during the demonstration. The procedure is based on two principles that are hypothesized to underpin the control configuration targeted by an expert, and assumes task frame origins and orientations that are fixed to either the world or the robot tool. It is rooted in screw theory, is entirely probabilistic and does not involve any hyperparameters. The procedure was validated by demonstrating several tasks, including surface following and manipulation of articulated objects, showing good agreement between the obtained and the assumed expert task frames. To validate the performance of the learned tasks by a UR10e robot, a constraint-based controller was designed based on the derived task frames and the learned data expressed therein. These experiments showed the effectiveness and versatility of the proposed approach. The task frame derivation approach fills a gap in the state of the art of LfD, bringing LfD for contact-rich tasks closer to practical application.

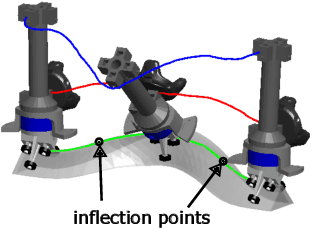

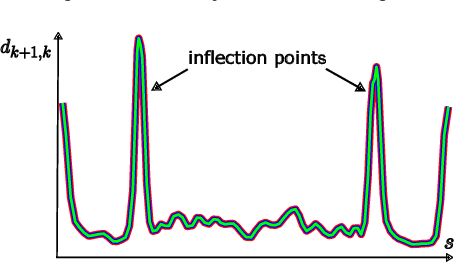

Enhancing motion trajectory segmentation of rigid bodies using a novel screw-based trajectory-shape representation

Sep 20, 2023Abstract:Trajectory segmentation refers to dividing a trajectory into meaningful consecutive sub-trajectories. This paper focuses on trajectory segmentation for 3D rigid-body motions. Most segmentation approaches in the literature represent the body's trajectory as a point trajectory, considering only its translation and neglecting its rotation. We propose a novel trajectory representation for rigid-body motions that incorporates both translation and rotation, and additionally exhibits several invariant properties. This representation consists of a geometric progress rate and a third-order trajectory-shape descriptor. Concepts from screw theory were used to make this representation time-invariant and also invariant to the choice of body reference point. This new representation is validated for a self-supervised segmentation approach, both in simulation and using real recordings of human-demonstrated pouring motions. The results show a more robust detection of consecutive submotions with distinct features and a more consistent segmentation compared to conventional representations. We believe that other existing segmentation methods may benefit from using this trajectory representation to improve their invariance.

Invariant Descriptors of Motion and Force Trajectories for Interpreting Object Manipulation Tasks in Contact

Jun 19, 2023

Abstract:Invariant descriptors of point and rigid-body motion trajectories have been proposed in the past as representative task models for motion recognition and generalization. Currently, no invariant descriptor exists for representing force trajectories which appear in contact tasks. This paper introduces invariant descriptors for force trajectories by exploiting the duality between motion and force. Two types of invariant descriptors are presented depending on whether the trajectories consist of screw coordinates or vector coordinates. Methods and software are provided for robustly calculating the invariant descriptors from noisy measurements using optimal control. Using experimental human demonstrations of a 3D contour following task, invariant descriptors are shown to result in task representations that do not depend on the calibration of reference frames or sensor locations. Tuning of the optimal control problems is shown to be fast and intuitive. Similarly as for motions in free space, the proposed invariant descriptors for motion and force trajectories may prove useful for the recognition and generalization of constrained motions such as during object manipulation in contact.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge