Maud Langlois

CRAL

A New Statistical Model of Star Speckles for Learning to Detect and Characterize Exoplanets in Direct Imaging Observations

Mar 21, 2025

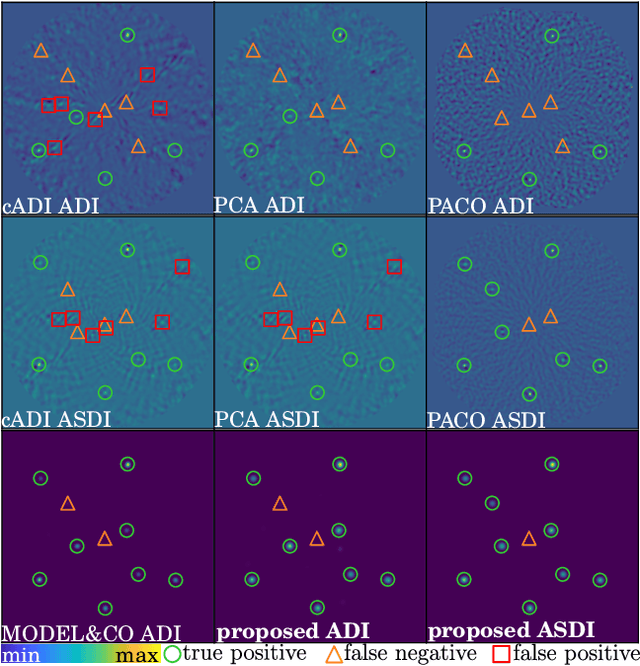

Abstract:The search for exoplanets is an active field in astronomy, with direct imaging as one of the most challenging methods due to faint exoplanet signals buried within stronger residual starlight. Successful detection requires advanced image processing to separate the exoplanet signal from this nuisance component. This paper presents a novel statistical model that captures nuisance fluctuations using a multi-scale approach, leveraging problem symmetries and a joint spectral channel representation grounded in physical principles. Our model integrates into an interpretable, end-to-end learnable framework for simultaneous exoplanet detection and flux estimation. The proposed algorithm is evaluated against the state of the art using datasets from the SPHERE instrument operating at the Very Large Telescope (VLT). It significantly improves the precision-recall trade-off, notably on challenging datasets that are otherwise unusable by astronomers. The proposed approach is computationally efficient, robust to varying data quality, and well suited for large-scale observational surveys.

Blind and robust reconstruction of adaptive optics point spread functions for asteroid deconvolution and moon detection

Oct 11, 2024Abstract:Initially designed to detect and characterize exoplanets, extreme adaptive optics systems (AO) open a new window on the solar system by resolving its small bodies. Nonetheless, despite the always increasing performances of AO systems, the correction is not perfect, degrading their image and producing a bright halo that can hide faint and close moons. Using a reference point spread function (PSF) is not always sufficient due to the random nature of the turbulence. In this work, we present our method to overcome this limitation. It blindly reconstructs the AO-PSF directly in the data of interest, without any prior on the instrument nor the asteroid's shape. This is done by first estimating the PSF core parameters under the assumption of a sharp-edge and flat object, allowing the image of the main body to be deconvolved. Then, the PSF faint extensions are reconstructed with a robust penalization optimization, discarding outliers on-the-fly such as cosmic rays, defective pixels and moons. This allows to properly model and remove the asteroid's halo. Finally, moons can be detected in the residuals, using the reconstructed PSF and the knowledge of the outliers learned with the robust method. We show that our method can be easily applied to different instruments (VLT/SPHERE, Keck/NIRC2), efficiently retrieving the features of AO-PSFs. Compared with state-of-the-art moon enhancement algorithms, moon signal is greatly improved and our robust detection method manages to discriminate faint moons from outliers.

* arXiv admin note: text overlap with arXiv:2407.21548

Combining multi-spectral data with statistical and deep-learning models for improved exoplanet detection in direct imaging at high contrast

Jun 21, 2023Abstract:Exoplanet detection by direct imaging is a difficult task: the faint signals from the objects of interest are buried under a spatially structured nuisance component induced by the host star. The exoplanet signals can only be identified when combining several observations with dedicated detection algorithms. In contrast to most of existing methods, we propose to learn a model of the spatial, temporal and spectral characteristics of the nuisance, directly from the observations. In a pre-processing step, a statistical model of their correlations is built locally, and the data are centered and whitened to improve both their stationarity and signal-to-noise ratio (SNR). A convolutional neural network (CNN) is then trained in a supervised fashion to detect the residual signature of synthetic sources in the pre-processed images. Our method leads to a better trade-off between precision and recall than standard approaches in the field. It also outperforms a state-of-the-art algorithm based solely on a statistical framework. Besides, the exploitation of the spectral diversity improves the performance compared to a similar model built solely from spatio-temporal data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge