Mathew Huang

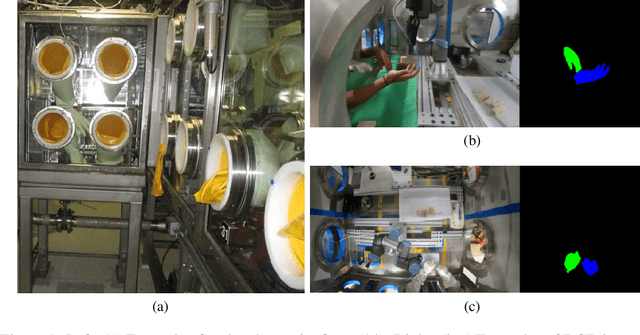

The Collection of a Human Robot Collaboration Dataset for Cooperative Assembly in Glovebox Environments

Jul 19, 2024

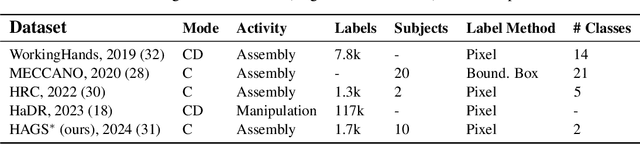

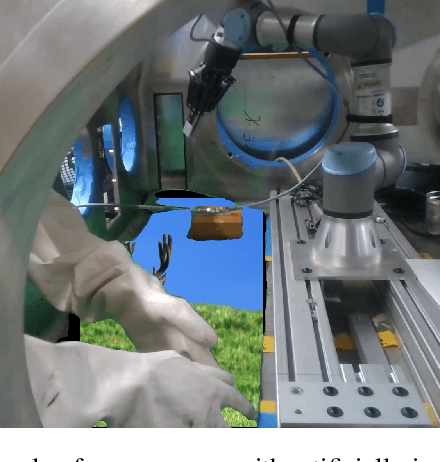

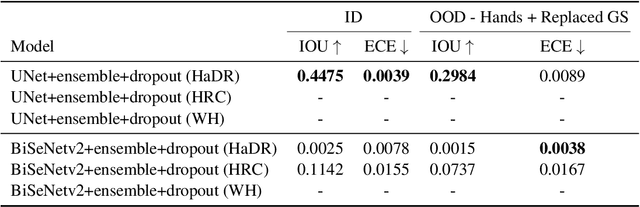

Abstract:Industry 4.0 introduced AI as a transformative solution for modernizing manufacturing processes. Its successor, Industry 5.0, envisions humans as collaborators and experts guiding these AI-driven manufacturing solutions. Developing these techniques necessitates algorithms capable of safe, real-time identification of human positions in a scene, particularly their hands, during collaborative assembly. Although substantial efforts have curated datasets for hand segmentation, most focus on residential or commercial domains. Existing datasets targeting industrial settings predominantly rely on synthetic data, which we demonstrate does not effectively transfer to real-world operations. Moreover, these datasets lack uncertainty estimations critical for safe collaboration. Addressing these gaps, we present HAGS: Hand and Glove Segmentation Dataset. This dataset provides 1200 challenging examples to build applications toward hand and glove segmentation in industrial human-robot collaboration scenarios as well as assess out-of-distribution images, constructed via green screen augmentations, to determine ML-classifier robustness. We study state-of-the-art, real-time segmentation models to evaluate existing methods. Our dataset and baselines are publicly available: https://dataverse.tdl.org/dataset.xhtml?persistentId=doi:10.18738/T8/85R7KQ and https://github.com/UTNuclearRoboticsPublic/assembly_glovebox_dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge