Matheus Schmitz

Quantifying How Hateful Communities Radicalize Online Users

Oct 02, 2022

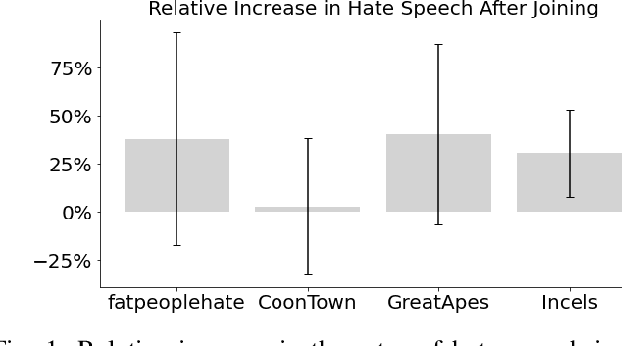

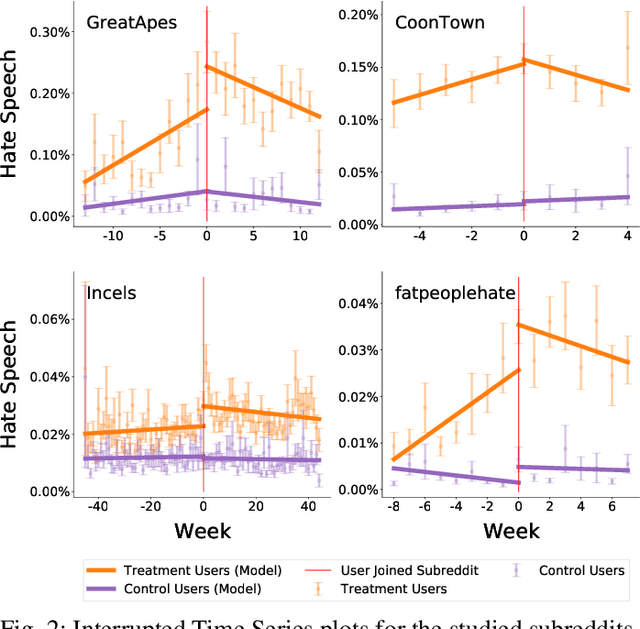

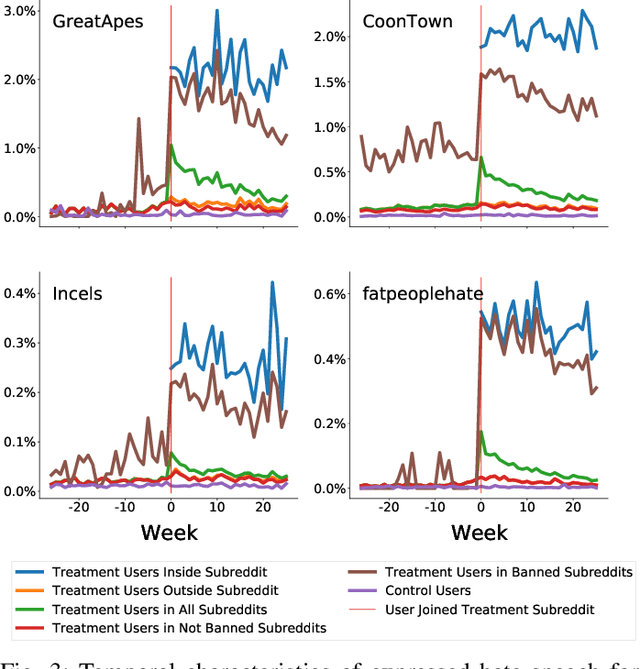

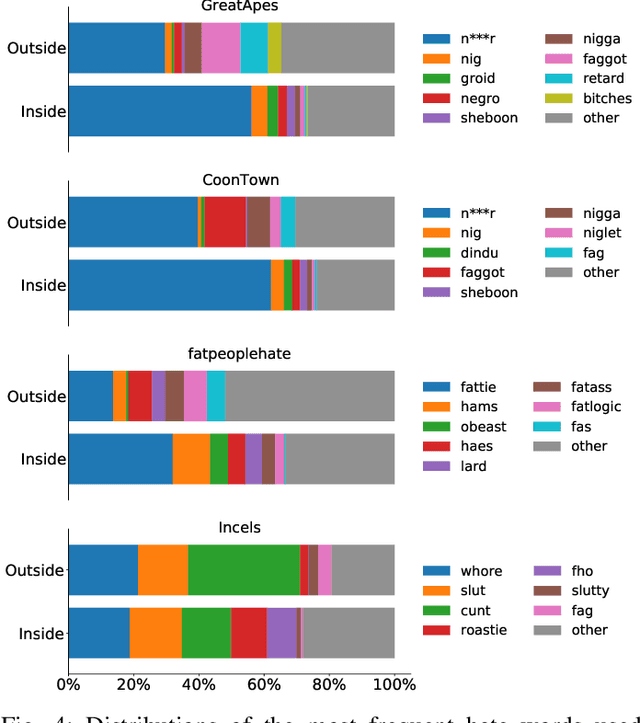

Abstract:While online social media offers a way for ignored or stifled voices to be heard, it also allows users a platform to spread hateful speech. Such speech usually originates in fringe communities, yet it can spill over into mainstream channels. In this paper, we measure the impact of joining fringe hateful communities in terms of hate speech propagated to the rest of the social network. We leverage data from Reddit to assess the effect of joining one type of echo chamber: a digital community of like-minded users exhibiting hateful behavior. We measure members' usage of hate speech outside the studied community before and after they become active participants. Using Interrupted Time Series (ITS) analysis as a causal inference method, we gauge the spillover effect, in which hateful language from within a certain community can spread outside that community by using the level of out-of-community hate word usage as a proxy for learned hate. We investigate four different Reddit sub-communities (subreddits) covering three areas of hate speech: racism, misogyny and fat-shaming. In all three cases we find an increase in hate speech outside the originating community, implying that joining such community leads to a spread of hate speech throughout the platform. Moreover, users are found to pick up this new hateful speech for months after initially joining the community. We show that the harmful speech does not remain contained within the community. Our results provide new evidence of the harmful effects of echo chambers and the potential benefit of moderating them to reduce adoption of hateful speech.

Bias and Fairness on Multimodal Emotion Detection Algorithms

May 11, 2022

Abstract:Numerous studies have shown that machine learning algorithms can latch onto protected attributes such as race and gender and generate predictions that systematically discriminate against one or more groups. To date the majority of bias and fairness research has been on unimodal models. In this work, we explore the biases that exist in emotion recognition systems in relationship to the modalities utilized, and study how multimodal approaches affect system bias and fairness. We consider audio, text, and video modalities, as well as all possible multimodal combinations of those, and find that text alone has the least bias, and accounts for the majority of the models' performances, raising doubts about the worthiness of multimodal emotion recognition systems when bias and fairness are desired alongside model performance.

A Python Package to Detect Anti-Vaccine Users on Twitter

Oct 21, 2021

Abstract:Vaccine hesitancy has a long history but has been recently driven by the anti-vaccine narratives shared online, which significantly degrades the efficacy of vaccination strategies, such as those for COVID-19. Despite broad agreement in the medical community about the safety and efficacy of available vaccines, a large number of social media users continue to be inundated with false information about vaccines and, partly because of this, became indecisive or unwilling to be vaccinated. The goal of this study is to better understand anti-vaccine sentiment, and work to reduce its impact, by developing a system capable of automatically identifying the users responsible for spreading anti-vaccine narratives. We introduce a publicly available Python package capable of analyzing Twitter profiles to assess how likely that profile is to spread anti-vaccine sentiment in the future. The software package is built using text embedding methods, neural networks, and automated dataset generation. It is trained on over one hundred thousand accounts and several million tweets. This model will help researchers and policy-makers understand anti-vaccine discussion and misinformation strategies, which can further help tailor targeted campaigns seeking to inform and debunk the harmful anti-vaccination myths currently being spread. Additionally, we leverage the data on such users to understand what are the moral and emotional characteristics of anti-vaccine spreaders.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge