Mateusz Dolata

Learning with Digital Agents: An Analysis based on the Activity Theory

Aug 08, 2024Abstract:Digital agents are considered a general-purpose technology. They spread quickly in private and organizational contexts, including education. Yet, research lacks a conceptual framing to describe interaction with such agents in a holistic manner. While focusing on the interaction with a pedagogical agent, i.e., a digital agent capable of natural-language interaction with a learner, we propose a model of learning activity based on activity theory. We use this model and a review of prior research on digital agents in education to analyze how various characteristics of the activity, including features of a pedagogical agent or learner, influence learning outcomes. The analysis leads to identification of IS research directions and guidance for developers of pedagogical agents and digital agents in general. We conclude by extending the activity theory-based model beyond the context of education and show how it helps designers and researchers ask the right questions when creating a digital agent.

* Authors manuscript accepted for publication in Journal of Management Information Systems

A Survey of AI Reliance

Jul 22, 2024

Abstract:Artificial intelligence (AI) systems have become an indispensable component of modern technology. However, research on human behavioral responses is lagging behind, i.e., the research into human reliance on AI advice (AI reliance). Current shortcomings in the literature include the unclear influences on AI reliance, lack of external validity, conflicting approaches to measuring reliance, and disregard for a change in reliance over time. Promising avenues for future research include reliance on generative AI output and reliance in multi-user situations. In conclusion, we present a morphological box that serves as a guide for research on AI reliance.

When Generative AI Meets Workplace Learning: Creating A Realistic & Motivating Learning Experience With A Generative PCA

May 24, 2024

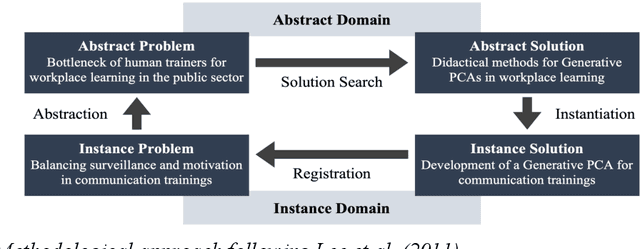

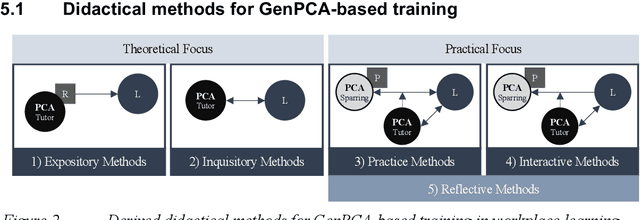

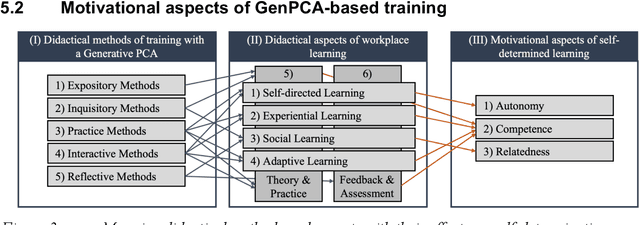

Abstract:Workplace learning is used to train employees systematically, e.g., via e-learning or in 1:1 training. However, this is often deemed ineffective and costly. Whereas pure e-learning lacks the possibility of conversational exercise and personal contact, 1:1 training with human instructors involves a high level of personnel and organizational costs. Hence, pedagogical conversational agents (PCAs), based on generative AI, seem to compensate for the disadvantages of both forms. Following Action Design Research, this paper describes an organizational communication training with a Generative PCA (GenPCA). The evaluation shows promising results: the agent was perceived positively among employees and contributed to an improvement in self-determined learning. However, the integration of such agent comes not without limitations. We conclude with suggestions concerning the didactical methods, which are supported by a GenPCA, and possible improvements of such an agent for workplace learning.

PROMISE: A Framework for Model-Driven Stateful Prompt Orchestration

Dec 07, 2023

Abstract:The advent of increasingly powerful language models has raised expectations for language-based interactions. However, controlling these models is a challenge, emphasizing the need to be able to investigate the feasibility and value of their application. We present PROMISE, a framework that facilitates the development of complex language-based interactions with information systems. Its use of state machine modeling concepts enables model-driven, dynamic prompt orchestration across hierarchically nested states and transitions. This improves the control of the behavior of language models and thus enables their effective and efficient use. We show the benefits of PROMISE in the context of application scenarios within health information systems and demonstrate its ability to handle complex interactions.

A Sociotechnical View of Algorithmic Fairness

Sep 27, 2021

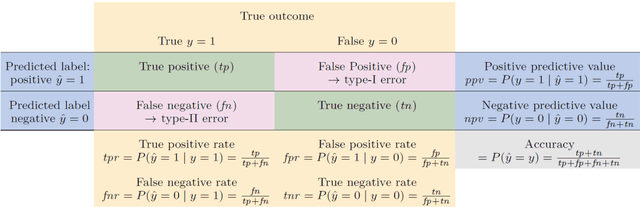

Abstract:Algorithmic fairness has been framed as a newly emerging technology that mitigates systemic discrimination in automated decision-making, providing opportunities to improve fairness in information systems (IS). However, based on a state-of-the-art literature review, we argue that fairness is an inherently social concept and that technologies for algorithmic fairness should therefore be approached through a sociotechnical lens. We advance the discourse on algorithmic fairness as a sociotechnical phenomenon. Our research objective is to embed AF in the sociotechnical view of IS. Specifically, we elaborate on why outcomes of a system that uses algorithmic means to assure fairness depends on mutual influences between technical and social structures. This perspective can generate new insights that integrate knowledge from both technical fields and social studies. Further, it spurs new directions for IS debates. We contribute as follows: First, we problematize fundamental assumptions in the current discourse on algorithmic fairness based on a systematic analysis of 310 articles. Second, we respond to these assumptions by theorizing algorithmic fairness as a sociotechnical construct. Third, we propose directions for IS researchers to enhance their impacts by pursuing a unique understanding of sociotechnical algorithmic fairness. We call for and undertake a holistic approach to AF. A sociotechnical perspective on algorithmic fairness can yield holistic solutions to systemic biases and discrimination.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge