Mastaneh Torkamani-Azar

A Hybrid End-to-End Spatio-Temporal Attention Neural Network with Graph-Smooth Signals for EEG Emotion Recognition

Jul 06, 2023Abstract:Recently, physiological data such as electroencephalography (EEG) signals have attracted significant attention in affective computing. In this context, the main goal is to design an automated model that can assess emotional states. Lately, deep neural networks have shown promising performance in emotion recognition tasks. However, designing a deep architecture that can extract practical information from raw data is still a challenge. Here, we introduce a deep neural network that acquires interpretable physiological representations by a hybrid structure of spatio-temporal encoding and recurrent attention network blocks. Furthermore, a preprocessing step is applied to the raw data using graph signal processing tools to perform graph smoothing in the spatial domain. We demonstrate that our proposed architecture exceeds state-of-the-art results for emotion classification on the publicly available DEAP dataset. To explore the generality of the learned model, we also evaluate the performance of our architecture towards transfer learning (TL) by transferring the model parameters from a specific source to other target domains. Using DEAP as the source dataset, we demonstrate the effectiveness of our model in performing cross-modality TL and improving emotion classification accuracy on DREAMER and the Emotional English Word (EEWD) datasets, which involve EEG-based emotion classification tasks with different stimuli.

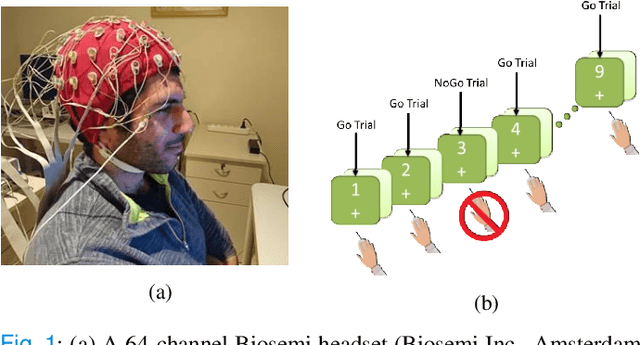

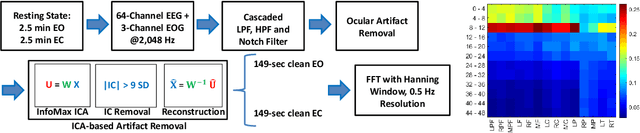

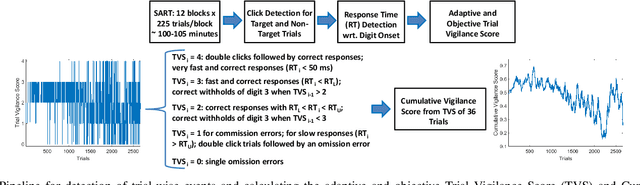

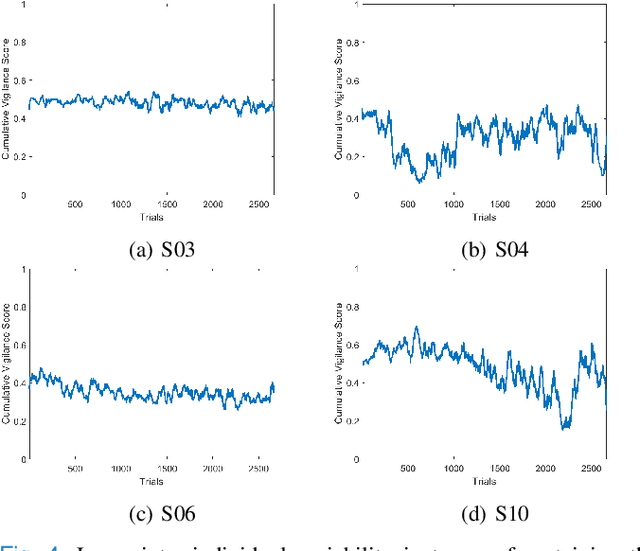

Prediction of Reaction Time and Vigilance Variability from Spatiospectral Features of Resting-State EEG in a Long Sustained Attention Task

Oct 21, 2019

Abstract:Resting-state brain networks represent the intrinsic state of the brain during the majority of cognitive and sensorimotor tasks. However, no study has yet presented concise predictors of task-induced vigilance variability from spectrospatial features of the pre-task, resting-state electroencephalograms (EEG). We asked ten healthy volunteers (6 females, 4 males) to participate in 105-minute fixed-sequence-varying-duration sessions of sustained attention to response task (SART). A novel and adaptive vigilance scoring scheme was designed based on the performance and response time in consecutive trials, and demonstrated large inter-participant variability in terms of maintaining consistent tonic performance. Multiple linear regression using feature relevance analysis obtained significant predictors of the mean cumulative vigilance score (CVS), mean response time, and variabilities of these scores from the resting-state, band-power ratios of EEG signals, p<0.05. Single-layer neural networks trained with cross-validation also captured different associations for the beta sub-bands. Increase in the gamma (28-48 Hz) and upper beta ratios from the left central and temporal regions predicted slower reactions and more inconsistent vigilance as explained by the increased activation of default mode network (DMN) and differences between the high- and low-attention networks at temporal regions. Higher ratios of parietal alpha from the Brodmann's areas 18, 19, and 37 during the eyes-open states predicted slower responses but more consistent CVS and reactions associated with the superior ability in vigilance maintenance. The proposed framework and these findings on the most stable and significant attention predictors from the intrinsic EEG power ratios can be used to model attention variations during the calibration sessions of BCI applications and vigilance monitoring systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge