Massimiliano Sassoli de Bianchi

Identifying Quantum Mechanical Statistics in Italian Corpora

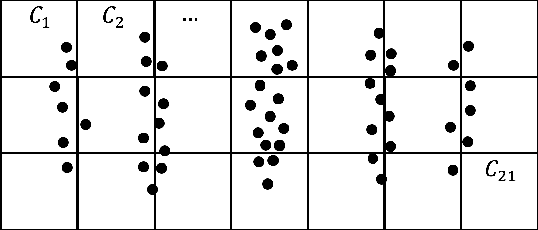

Dec 10, 2024Abstract:We present a theoretical and empirical investigation of the statistical behaviour of the words in a text produced by human language. To this aim, we analyse the word distribution of various texts of Italian language selected from a specific literary corpus. We firstly generalise a theoretical framework elaborated by ourselves to identify 'quantum mechanical statistics' in large-size texts. Then, we show that, in all analysed texts, words distribute according to 'Bose--Einstein statistics' and show significant deviations from 'Maxwell--Boltzmann statistics'. Next, we introduce an effect of 'word randomization' which instead indicates that the difference between the two statistical models is not as pronounced as in the original cases. These results confirm the empirical patterns obtained in texts of English language and strongly indicate that identical words tend to 'clump together' as a consequence of their meaning, which can be explained as an effect of 'quantum entanglement' produced through a phenomenon of 'contextual updating'. More, word randomization can be seen as the linguistic-conceptual equivalent of an increase of temperature which destroys 'coherence' and makes classical statistics prevail over quantum statistics. Some insights into the origin of quantum statistics in physics are finally provided.

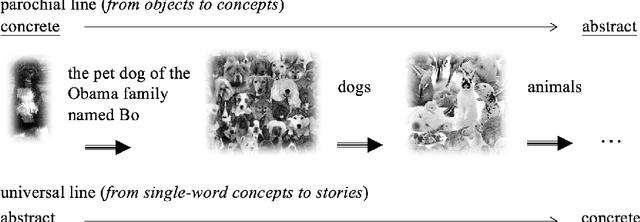

Modeling Meaning Associated with Documental Entities: Introducing the Brussels Quantum Approach

Aug 03, 2018

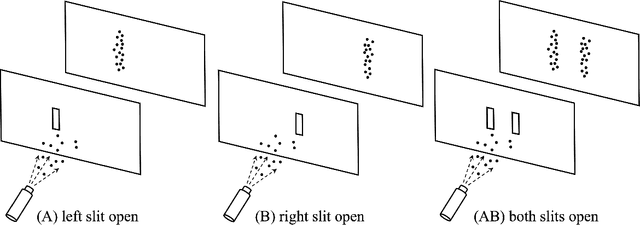

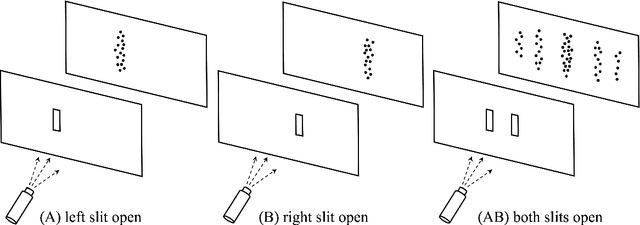

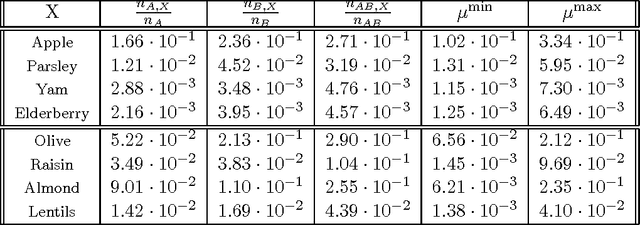

Abstract:We show that the Brussels operational-realistic approach to quantum physics and quantum cognition offers a fundamental strategy for modeling the meaning associated with collections of documental entities. To do so, we take the World Wide Web as a paradigmatic example and emphasize the importance of distinguishing the Web, made of printed documents, from a more abstract meaning entity, which we call the Quantum Web, or QWeb, where the former is considered to be the collection of traces that can be left by the latter, in specific measurements, similarly to how a non-spatial quantum entity, like an electron, can leave localized traces of impact on a detection screen. The double-slit experiment is extensively used to illustrate the rationale of the modeling, which is guided by how physicists constructed quantum theory to describe the behavior of the microscopic entities. We also emphasize that the superposition principle and the associated interference effects are not sufficient to model all experimental probabilistic data, like those obtained by counting the relative number of documents containing certain words and co-occurrences of words. For this, additional effects, like context effects, must also be taken into consideration.

Quantum cognition goes beyond-quantum: modeling the collective participant in psychological measurements

Feb 24, 2018

Abstract:In psychological measurements, two levels should be distinguished: the 'individual level', relative to the different participants in a given cognitive situation, and the 'collective level', relative to the overall statistics of their outcomes, which we propose to associate with a notion of 'collective participant'. When the distinction between these two levels is properly formalized, it reveals why the modeling of the collective participant generally requires beyond-quantum - non-Bornian - probabilistic models, when sequential measurements at the individual level are considered, and this though a pure quantum description remains valid for single measurement situations.

Towards a Quantum World Wide Web

Jan 29, 2018

Abstract:We elaborate a quantum model for the meaning associated with corpora of written documents, like the pages forming the World Wide Web. To that end, we are guided by how physicists constructed quantum theory for microscopic entities, which unlike classical objects cannot be fully represented in our spatial theater. We suggest that a similar construction needs to be carried out by linguists and computational scientists, to capture the full meaning carried by collections of documental entities. More precisely, we show how to associate a quantum-like 'entity of meaning' to a 'language entity formed by printed documents', considering the latter as the collection of traces that are left by the former, in specific results of search actions that we describe as measurements. In other words, we offer a perspective where a collection of documents, like the Web, is described as the space of manifestation of a more complex entity - the QWeb - which is the object of our modeling, drawing its inspiration from previous studies on operational-realistic approaches to quantum physics and quantum modeling of human cognition and decision-making. We emphasize that a consistent QWeb model needs to account for the observed correlations between words appearing in printed documents, e.g., co-occurrences, as the latter would depend on the 'meaning connections' existing between the concepts that are associated with these words. In that respect, we show that both 'context and interference (quantum) effects' are required to explain the probabilities calculated by counting the relative number of documents containing certain words and co-ocurrrences of words.

Testing Quantum Models of Conjunction Fallacy on the World Wide Web

Jun 02, 2017Abstract:The 'conjunction fallacy' has been extensively debated by scholars in cognitive science and, in recent times, the discussion has been enriched by the proposal of modeling the fallacy using the quantum formalism. Two major quantum approaches have been put forward: the first assumes that respondents use a two-step sequential reasoning and that the fallacy results from the presence of 'question order effects'; the second assumes that respondents evaluate the cognitive situation as a whole and that the fallacy results from the 'emergence of new meanings', as an 'effect of overextension' in the conceptual conjunction. Thus, the question arises as to determine whether and to what extent conjunction fallacies would result from 'order effects' or, instead, from 'emergence effects'. To help clarify this situation, we propose to use the World Wide Web as an 'information space' that can be interrogated both in a sequential and non-sequential way, to test these two quantum approaches. We find that 'emergence effects', and not 'order effects', should be considered the main cognitive mechanism producing the observed conjunction fallacies.

Context and Interference Effects in the Combinations of Natural Concepts

Dec 19, 2016Abstract:The mathematical formalism of quantum theory exhibits significant effectiveness when applied to cognitive phenomena that have resisted traditional (set theoretical) modeling. Relying on a decade of research on the operational foundations of micro-physical and conceptual entities, we present a theoretical framework for the representation of concepts and their conjunctions and disjunctions that uses the quantum formalism. This framework provides a unified solution to the 'conceptual combinations problem' of cognitive psychology, explaining the observed deviations from classical (Boolean, fuzzy set and Kolmogorovian) structures in terms of genuine quantum effects. In particular, natural concepts 'interfere' when they combine to form more complex conceptual entities, and they also exhibit a 'quantum-type context-dependence', which are responsible of the 'over- and under-extension' that are systematically observed in experiments on membership judgments.

* 12 pages, no figures

Quantum cognition beyond Hilbert space II: Applications

Apr 27, 2016

Abstract:The research on human cognition has recently benefited from the use of the mathematical formalism of quantum theory in Hilbert space. However, cognitive situations exist which indicate that the Hilbert space structure, and the associated Born rule, would be insufficient to provide a satisfactory modeling of the collected data, so that one needs to go beyond Hilbert space. In Part I of this paper we follow this direction and present a general tension-reduction (GTR) model, in the ambit of an operational and realistic framework for human cognition. In this Part II we apply this non-Hilbertian quantum-like model to faithfully reproduce the probabilities of the 'Clinton/Gore' and 'Rose/Jackson' experiments on question order effects. We also explain why the GTR-model is needed if one wants to deal, in a fully consistent way, with response replicability and unpacking effects.

Quantum Cognition Beyond Hilbert Space I: Fundamentals

Apr 27, 2016

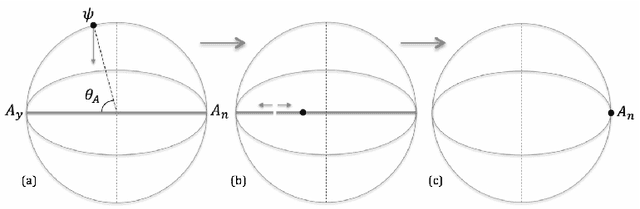

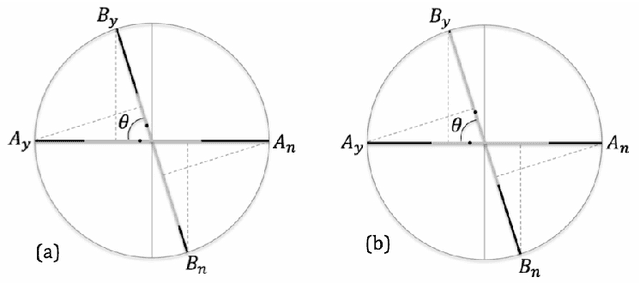

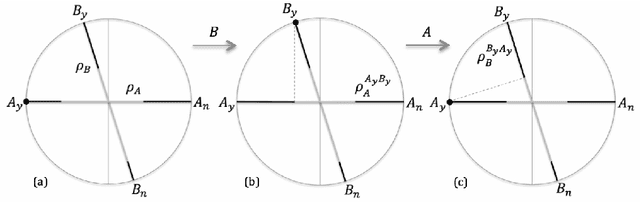

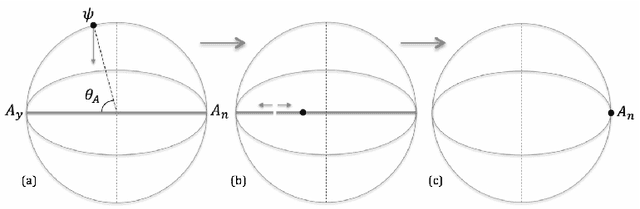

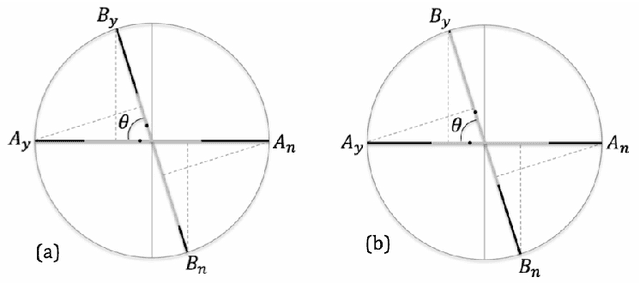

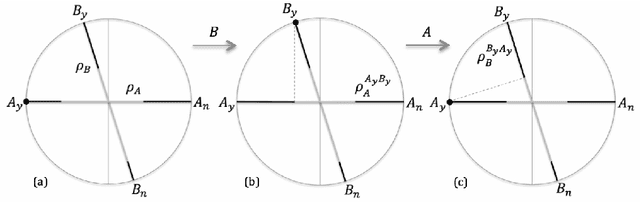

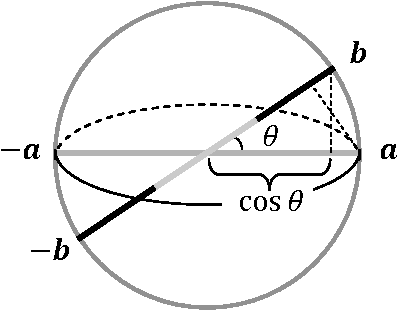

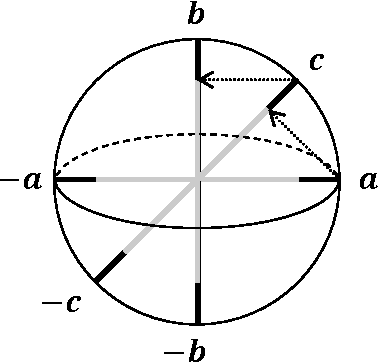

Abstract:The formalism of quantum theory in Hilbert space has been applied with success to the modeling and explanation of several cognitive phenomena, whereas traditional cognitive approaches were problematical. However, this 'quantum cognition paradigm' was recently challenged by its proven impossibility to simultaneously model 'question order effects' and 'response replicability'. In Part I of this paper we describe sequential dichotomic measurements within an operational and realistic framework for human cognition elaborated by ourselves, and represent them in a quantum-like 'extended Bloch representation' where the Born rule of quantum probability does not necessarily hold. In Part II we apply this mathematical framework to successfully model question order effects, response replicability and unpacking effects, thus opening the way toward quantum cognition beyond Hilbert space.

On the Foundations of the Brussels Operational-Realistic Approach to Cognition

Dec 29, 2015

Abstract:The scientific community is becoming more and more interested in the research that applies the mathematical formalism of quantum theory to model human decision-making. In this paper, we provide the theoretical foundations of the quantum approach to cognition that we developed in Brussels. These foundations rest on the results of two decade studies on the axiomatic and operational-realistic approaches to the foundations of quantum physics. The deep analogies between the foundations of physics and cognition lead us to investigate the validity of quantum theory as a general and unitary framework for cognitive processes, and the empirical success of the Hilbert space models derived by such investigation provides a strong theoretical confirmation of this validity. However, two situations in the cognitive realm, 'question order effects' and 'response replicability', indicate that even the Hilbert space framework could be insufficient to reproduce the collected data. This does not mean that the mentioned operational-realistic approach would be incorrect, but simply that a larger class of measurements would be in force in human cognition, so that an extended quantum formalism may be needed to deal with all of them. As we will explain, the recently derived 'extended Bloch representation' of quantum theory (and the associated 'general tension-reduction' model) precisely provides such extended formalism, while remaining within the same unitary interpretative framework.

* 21 pages

The GTR-model: a universal framework for quantum-like measurements

Dec 02, 2015

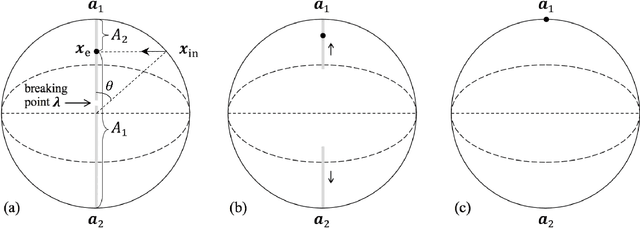

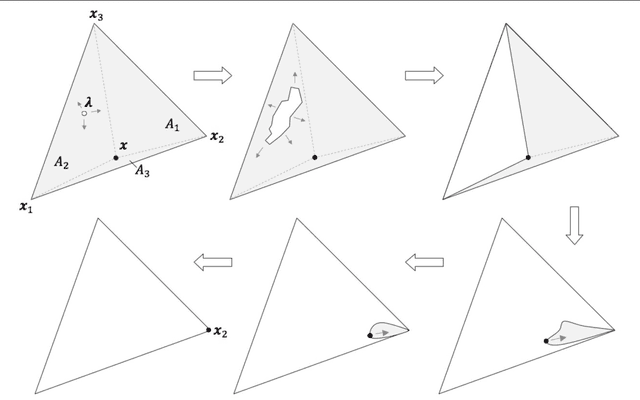

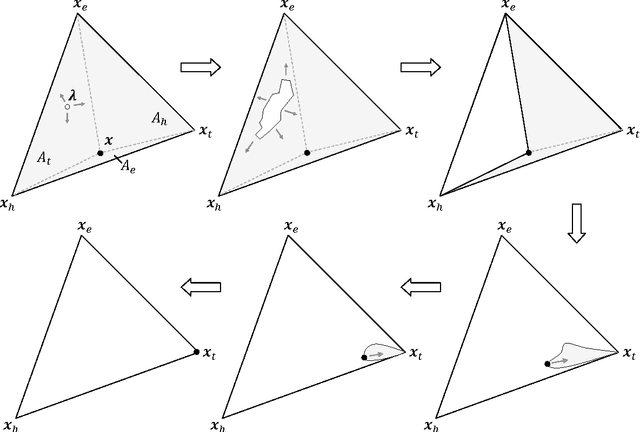

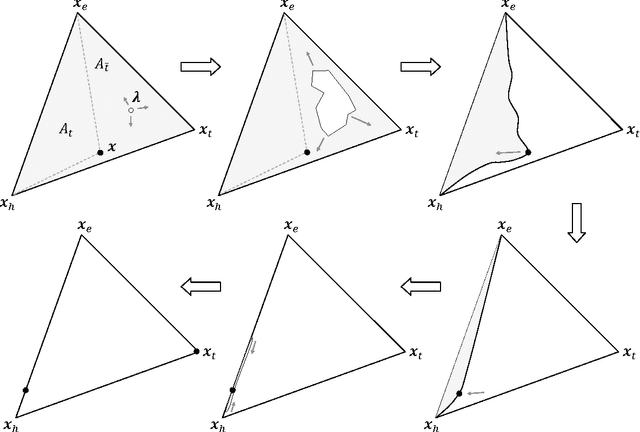

Abstract:We present a very general geometrico-dynamical description of physical or more abstract entities, called the 'general tension-reduction' (GTR) model, where not only states, but also measurement-interactions can be represented, and the associated outcome probabilities calculated. Underlying the model is the hypothesis that indeterminism manifests as a consequence of unavoidable fluctuations in the experimental context, in accordance with the 'hidden-measurements interpretation' of quantum mechanics. When the structure of the state space is Hilbertian, and measurements are of the 'universal' kind, i.e., are the result of an average over all possible ways of selecting an outcome, the GTR-model provides the same predictions of the Born rule, and therefore provides a natural completed version of quantum mechanics. However, when the structure of the state space is non-Hilbertian and/or not all possible ways of selecting an outcome are available to be actualized, the predictions of the model generally differ from the quantum ones, especially when sequential measurements are considered. Some paradigmatic examples will be discussed, taken from physics and human cognition. Particular attention will be given to some known psychological effects, like question order effects and response replicability, which we show are able to generate non-Hilbertian statistics. We also suggest a realistic interpretation of the GTR-model, when applied to human cognition and decision, which we think could become the generally adopted interpretative framework in quantum cognition research.

* 33 pages, 5 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge