Sandro Sozzo

Identifying Quantum Mechanical Statistics in Italian Corpora

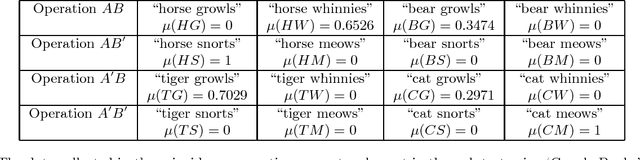

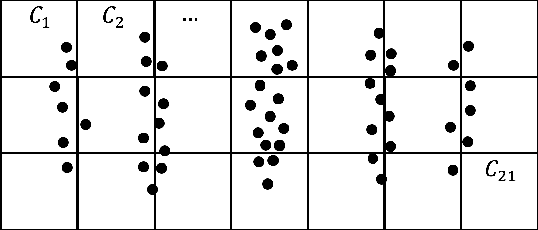

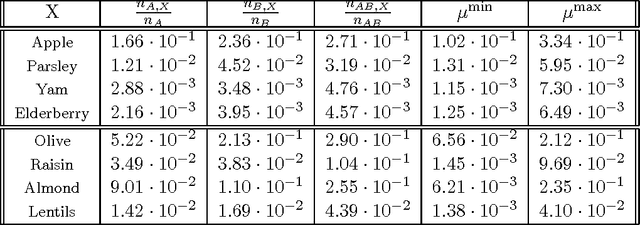

Dec 10, 2024Abstract:We present a theoretical and empirical investigation of the statistical behaviour of the words in a text produced by human language. To this aim, we analyse the word distribution of various texts of Italian language selected from a specific literary corpus. We firstly generalise a theoretical framework elaborated by ourselves to identify 'quantum mechanical statistics' in large-size texts. Then, we show that, in all analysed texts, words distribute according to 'Bose--Einstein statistics' and show significant deviations from 'Maxwell--Boltzmann statistics'. Next, we introduce an effect of 'word randomization' which instead indicates that the difference between the two statistical models is not as pronounced as in the original cases. These results confirm the empirical patterns obtained in texts of English language and strongly indicate that identical words tend to 'clump together' as a consequence of their meaning, which can be explained as an effect of 'quantum entanglement' produced through a phenomenon of 'contextual updating'. More, word randomization can be seen as the linguistic-conceptual equivalent of an increase of temperature which destroys 'coherence' and makes classical statistics prevail over quantum statistics. Some insights into the origin of quantum statistics in physics are finally provided.

Entanglement as a Method to Reduce Uncertainty

Feb 12, 2023Abstract:In physics, entanglement 'reduces' the entropy of an entity, because the (von Neumann) entropy of, e.g., a composite bipartite entity in a pure entangled state is systematically lower than the entropy of the component sub-entities. We show here that this 'genuinely non-classical reduction of entropy as a result of composition' also holds whenever two concepts combine in human cognition and, more generally, it is valid in human culture. We exploit these results and make a 'new hypothesis' on the nature of entanglement, namely, the production of entanglement in the preparation of a composite entity can be seen as a 'dynamical process of collaboration between its sub-entities to reduce uncertainty', because the composite entity is in a pure state while its sub-entities are in a non-pure, or density, state, as a result of the preparation. We identify within the nature of this entanglement a mechanism of contextual updating and illustrate the mechanism in the example we analyze. Our hypothesis naturally explains the 'non-classical nature' of some quantum logical connectives, as due to Bell-type correlations.

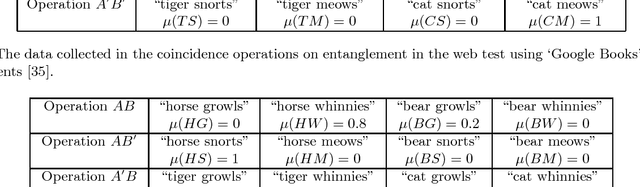

Development of a Thermodynamics of Human Cognition and Human Culture

Dec 24, 2022Abstract:Inspired by foundational studies in classical and quantum physics, and by information retrieval studies in quantum information theory, we have recently proved that the notions of 'energy' and 'entropy' can be consistently introduced in human language and, more generally, in human culture. More explicitly, if energy is attributed to words according to their frequency of appearance in a text, then the ensuing energy levels are distributed non-classically, namely, they obey Bose-Einstein, rather than Maxwell-Boltzmann, statistics, as a consequence of the genuinely 'quantum indistinguishability' of the words that appear in the text. Secondly, the 'quantum entanglement' due to the way meaning is carried by a text reduces the (von Neumann) entropy of the words that appear in the text, a behaviour which cannot be explained within classical (thermodynamic or information) entropy. We claim here that this 'quantum-type behaviour is valid in general in human cognition', namely, any text is conceptually more concrete than the words composing it, which entails that the entropy of the overall text decreases. This result can be prolonged to human culture and its collaborative entities having lower entropy than their constituent elements. We use these findings to propose the development of a new 'non-classical thermodynamic theory for human cognition and human culture', which bridges concepts and quantum entities and agrees with some recent findings on the conceptual, not physical, nature of quantum entities.

Representing Attitudes Towards Ambiguity in Managerial Decisions

Jul 10, 2019

Abstract:We provide here a general mathematical framework to model attitudes towards ambiguity which uses the formalism of quantum theory as a "purely mathematical formalism, detached from any physical interpretation". We show that the quantum-theoretic framework enables modelling of the "Ellsberg paradox", but it also successfully applies to more concrete human decision-making (DM) tests involving financial, managerial and medical decisions. In particular, we provide a faithful mathematical representation of various empirical studies which reveal that attitudes of managers towards uncertainty shift from "ambiguity seeking" to "ambiguity aversion", and viceversa, thus exhibiting "hope effects" and "fear effects" in management decisions. The present framework provides a new bold and promising direction towards the development of a unified theory of decisions in the presence of uncertainty.

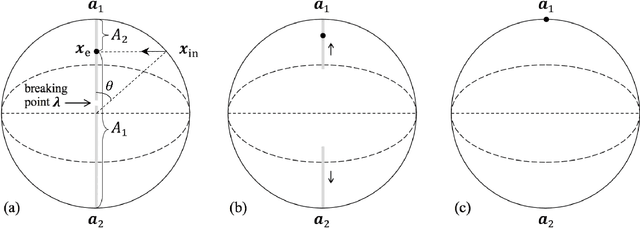

Explaining versus Describing Human Decisions. Hilbert Space Structures in Decision Theory

Apr 04, 2019

Abstract:Despite the impressive success of quantum structures to model long-standing human judgement and decision puzzles, the {\it quantum cognition research programme} still faces challenges about its explanatory power. Indeed, quantum models introduce new parameters, which may fit empirical data without necessarily explaining them. Also, one wonders whether more general non-classical structures are better equipped to model cognitive phenomena. In this paper, we provide a {\it realistic-operational foundation of decision processes} using a known decision-making puzzle, the {\it Ellsberg paradox}, as a case study. Then, we elaborate a novel representation of the Ellsberg decision situation applying standard quantum correspondence rules which map realistic-operational entities into quantum mathematical terms. This result opens the way towards an independent, foundational rather than phenomenological, motivation for a general use of quantum Hilbert space structures in human cognition.

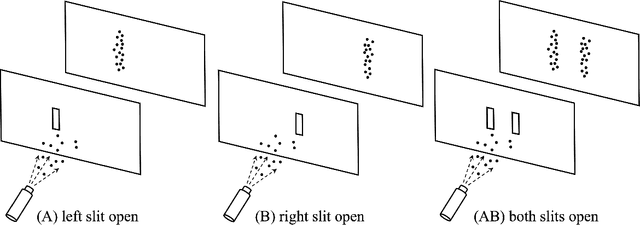

Quantum-theoretic Modeling in Computer Science A complex Hilbert space model for entangled concepts in corpuses of documents

Jan 05, 2019

Abstract:We work out a quantum-theoretic model in complex Hilbert space of a recently performed test on co-occurrencies of two concepts and their combination in retrieval processes on specific corpuses of documents. The test violated the Clauser-Horne-Shimony-Holt version of the Bell inequalities ('CHSH inequality'), thus indicating the presence of entanglement between the combined concepts. We make use of a recently elaborated 'entanglement scheme' and represent the collected data in the tensor product of Hilbert spaces of the individual concepts, showing that the identified violation is due to the occurrence of a strong form of entanglement, involving both states and measurements and reflecting the meaning connection between the component concepts. These results provide a significant confirmation of the presence of quantum structures in corpuses of documents, like it is the case for the entanglement identified in human cognition.

Quantum Structures in Human Decision-making: Towards Quantum Expected Utility

Oct 29, 2018

Abstract:{\it Ellsberg thought experiments} and empirical confirmation of Ellsberg preferences pose serious challenges to {\it subjective expected utility theory} (SEUT). We have recently elaborated a quantum-theoretic framework for human decisions under uncertainty which satisfactorily copes with the Ellsberg paradox and other puzzles of SEUT. We apply here the quantum-theoretic framework to the {\it Ellsberg two-urn example}, showing that the paradox can be explained by assuming a state change of the conceptual entity that is the object of the decision ({\it decision-making}, or {\it DM}, {\it entity}) and representing subjective probabilities by quantum probabilities. We also model the empirical data we collected in a DM test on human participants within the theoretic framework above. The obtained results are relevant, as they provide a line to model real life, e.g., financial and medical, decisions that show the same empirical patterns as the two-urn experiment.

Modeling Meaning Associated with Documental Entities: Introducing the Brussels Quantum Approach

Aug 03, 2018

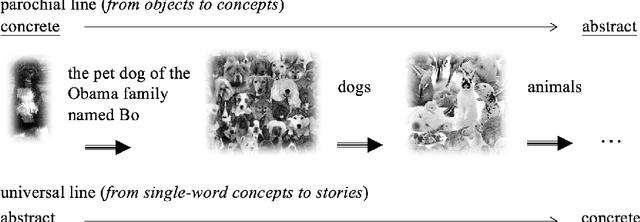

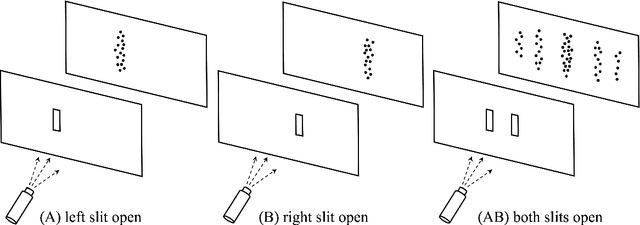

Abstract:We show that the Brussels operational-realistic approach to quantum physics and quantum cognition offers a fundamental strategy for modeling the meaning associated with collections of documental entities. To do so, we take the World Wide Web as a paradigmatic example and emphasize the importance of distinguishing the Web, made of printed documents, from a more abstract meaning entity, which we call the Quantum Web, or QWeb, where the former is considered to be the collection of traces that can be left by the latter, in specific measurements, similarly to how a non-spatial quantum entity, like an electron, can leave localized traces of impact on a detection screen. The double-slit experiment is extensively used to illustrate the rationale of the modeling, which is guided by how physicists constructed quantum theory to describe the behavior of the microscopic entities. We also emphasize that the superposition principle and the associated interference effects are not sufficient to model all experimental probabilistic data, like those obtained by counting the relative number of documents containing certain words and co-occurrences of words. For this, additional effects, like context effects, must also be taken into consideration.

Quantum cognition goes beyond-quantum: modeling the collective participant in psychological measurements

Feb 24, 2018

Abstract:In psychological measurements, two levels should be distinguished: the 'individual level', relative to the different participants in a given cognitive situation, and the 'collective level', relative to the overall statistics of their outcomes, which we propose to associate with a notion of 'collective participant'. When the distinction between these two levels is properly formalized, it reveals why the modeling of the collective participant generally requires beyond-quantum - non-Bornian - probabilistic models, when sequential measurements at the individual level are considered, and this though a pure quantum description remains valid for single measurement situations.

Towards a Quantum World Wide Web

Jan 29, 2018

Abstract:We elaborate a quantum model for the meaning associated with corpora of written documents, like the pages forming the World Wide Web. To that end, we are guided by how physicists constructed quantum theory for microscopic entities, which unlike classical objects cannot be fully represented in our spatial theater. We suggest that a similar construction needs to be carried out by linguists and computational scientists, to capture the full meaning carried by collections of documental entities. More precisely, we show how to associate a quantum-like 'entity of meaning' to a 'language entity formed by printed documents', considering the latter as the collection of traces that are left by the former, in specific results of search actions that we describe as measurements. In other words, we offer a perspective where a collection of documents, like the Web, is described as the space of manifestation of a more complex entity - the QWeb - which is the object of our modeling, drawing its inspiration from previous studies on operational-realistic approaches to quantum physics and quantum modeling of human cognition and decision-making. We emphasize that a consistent QWeb model needs to account for the observed correlations between words appearing in printed documents, e.g., co-occurrences, as the latter would depend on the 'meaning connections' existing between the concepts that are associated with these words. In that respect, we show that both 'context and interference (quantum) effects' are required to explain the probabilities calculated by counting the relative number of documents containing certain words and co-ocurrrences of words.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge