Mason Kortz

AI Procurement Checklists: Revisiting Implementation in the Age of AI Governance

Apr 23, 2024Abstract:Public sector use of AI has been quietly on the rise for the past decade, but only recently have efforts to regulate it entered the cultural zeitgeist. While simple to articulate, promoting ethical and effective roll outs of AI systems in government is a notoriously elusive task. On the one hand there are hard-to-address pitfalls associated with AI-based tools, including concerns about bias towards marginalized communities, safety, and gameability. On the other, there is pressure not to make it too difficult to adopt AI, especially in the public sector which typically has fewer resources than the private sector$\unicode{x2014}$conserving scarce government resources is often the draw of using AI-based tools in the first place. These tensions create a real risk that procedures built to ensure marginalized groups are not hurt by government use of AI will, in practice, be performative and ineffective. To inform the latest wave of regulatory efforts in the United States, we look to jurisdictions with mature regulations around government AI use. We report on lessons learned by officials in Brazil, Singapore and Canada, who have collectively implemented risk categories, disclosure requirements and assessments into the way they procure AI tools. In particular, we investigate two implemented checklists: the Canadian Directive on Automated Decision-Making (CDADM) and the World Economic Forum's AI Procurement in a Box (WEF). We detail three key pitfalls around expertise, risk frameworks and transparency, that can decrease the efficacy of regulations aimed at government AI use and suggest avenues for improvement.

Accountability of AI Under the Law: The Role of Explanation

Nov 21, 2017

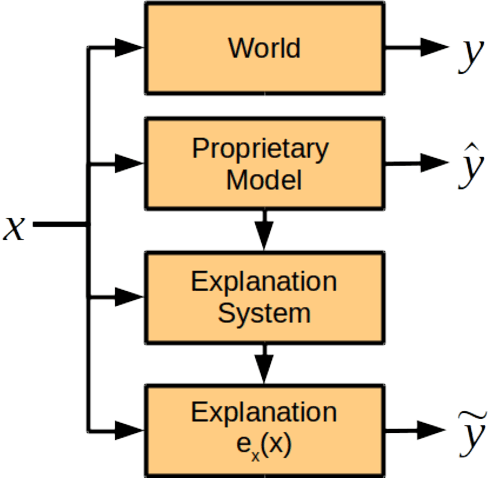

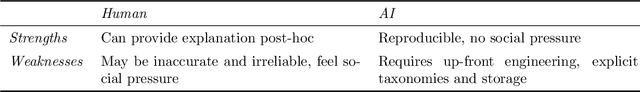

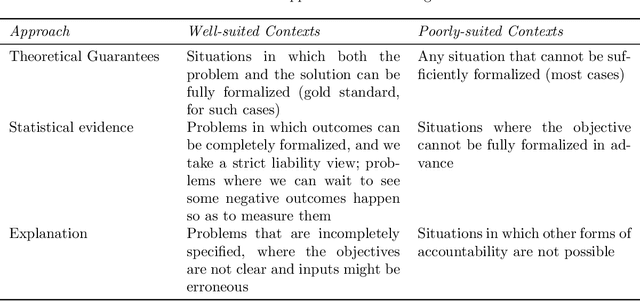

Abstract:The ubiquity of systems using artificial intelligence or "AI" has brought increasing attention to how those systems should be regulated. The choice of how to regulate AI systems will require care. AI systems have the potential to synthesize large amounts of data, allowing for greater levels of personalization and precision than ever before---applications range from clinical decision support to autonomous driving and predictive policing. That said, there exist legitimate concerns about the intentional and unintentional negative consequences of AI systems. There are many ways to hold AI systems accountable. In this work, we focus on one: explanation. Questions about a legal right to explanation from AI systems was recently debated in the EU General Data Protection Regulation, and thus thinking carefully about when and how explanation from AI systems might improve accountability is timely. In this work, we review contexts in which explanation is currently required under the law, and then list the technical considerations that must be considered if we desired AI systems that could provide kinds of explanations that are currently required of humans.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge