Martin Tröschel

Analyzing Power Grid, ICT, and Market Without Domain Knowledge Using Distributed Artificial Intelligence

Jun 10, 2020

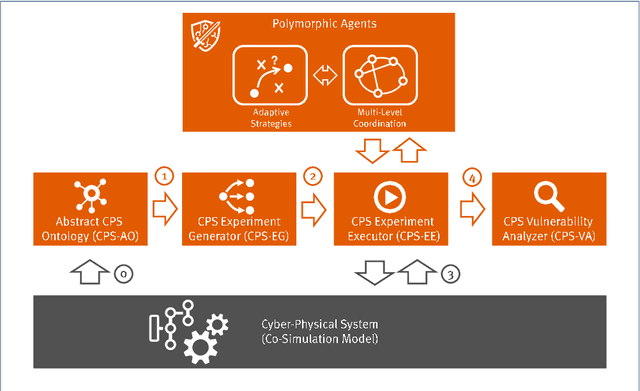

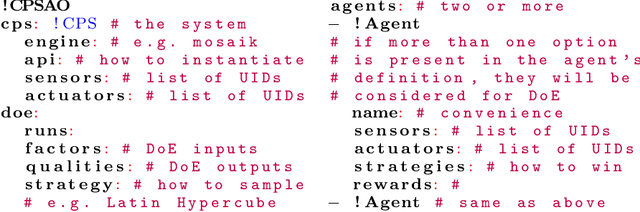

Abstract:Modern cyber-physical systems (CPS), such as our energy infrastructure, are becoming increasingly complex: An ever-higher share of Artificial Intelligence (AI)-based technologies use the Information and Communication Technology (ICT) facet of energy systems for operation optimization, cost efficiency, and to reach CO2 goals worldwide. At the same time, markets with increased flexibility and ever shorter trade horizons enable the multi-stakeholder situation that is emerging in this setting. These systems still form critical infrastructures that need to perform with highest reliability. However, today's CPS are becoming too complex to be analyzed in the traditional monolithic approach, where each domain, e.g., power grid and ICT as well as the energy market, are considered as separate entities while ignoring dependencies and side-effects. To achieve an overall analysis, we introduce the concept for an application of distributed artificial intelligence as a self-adaptive analysis tool that is able to analyze the dependencies between domains in CPS by attacking them. It eschews pre-configured domain knowledge, instead exploring the CPS domains for emergent risk situations and exploitable loopholes in codices, with a focus on rational market actors that exploit the system while still following the market rules.

Analyzing Cyber-Physical Systems from the Perspective of Artificial Intelligence

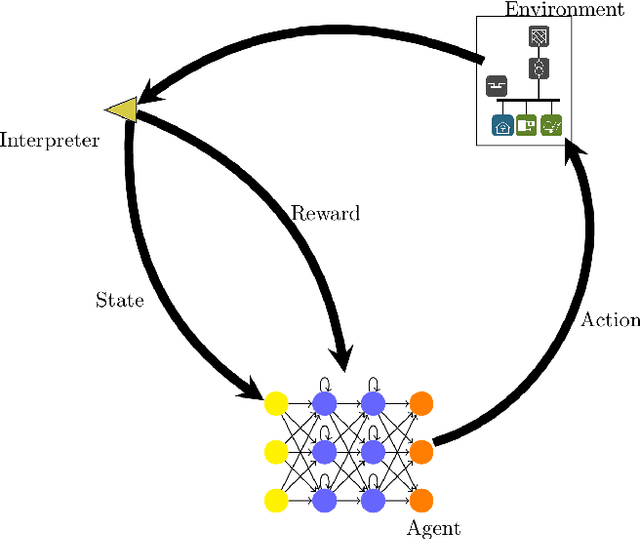

Aug 21, 2019Abstract:Principles of modern cyber-physical system (CPS) analysis are based on analytical methods that depend on whether safety or liveness requirements are considered. Complexity is abstracted through different techniques, ranging from stochastic modelling to contracts. However, both distributed heuristics and Artificial Intelligence (AI)-based approaches as well as the user perspective or unpredictable effects, such as accidents or the weather, introduce enough uncertainty to warrant reinforcement-learning-based approaches. This paper compares traditional approaches in the domain of CPS modelling and analysis with the AI researcher perspective to exploring unknown complex systems.

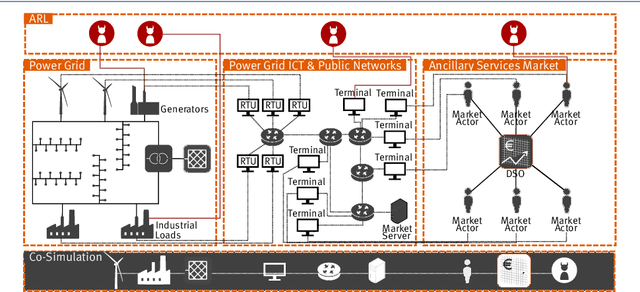

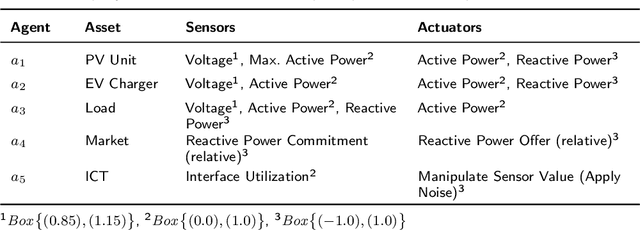

Adversarial Resilience Learning - Towards Systemic Vulnerability Analysis for Large and Complex Systems

Nov 15, 2018

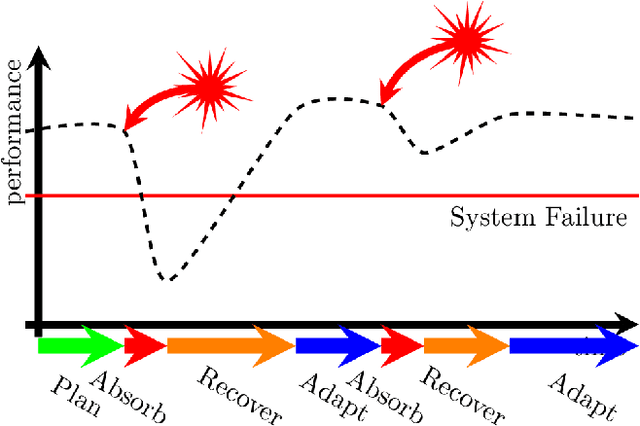

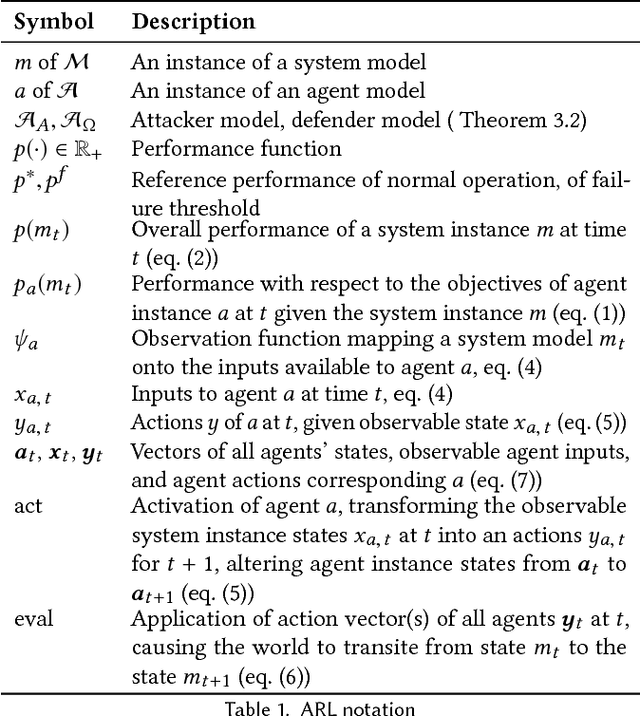

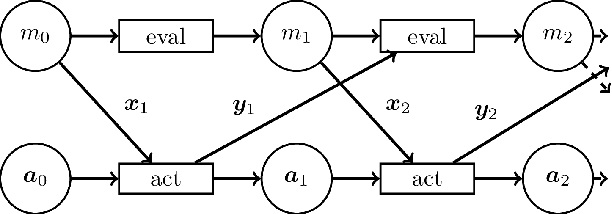

Abstract:This paper introduces Adversarial Resilience Learning (ARL), a concept to model, train, and analyze artificial neural networks as representations of competitive agents in highly complex systems. In our examples, the agents normally take the roles of attackers or defenders that aim at worsening or improving-or keeping, respectively-defined performance indicators of the system. Our concept provides adaptive, repeatable, actor-based testing with a chance of detecting previously unknown attack vectors. We provide the constitutive nomenclature of ARL and, based on it, the description of experimental setups and results of a preliminary implementation of ARL in simulated power systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge