Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Jan-Menno Memmen

Adversarial Resilience Learning - Towards Systemic Vulnerability Analysis for Large and Complex Systems

Nov 15, 2018Figures and Tables:

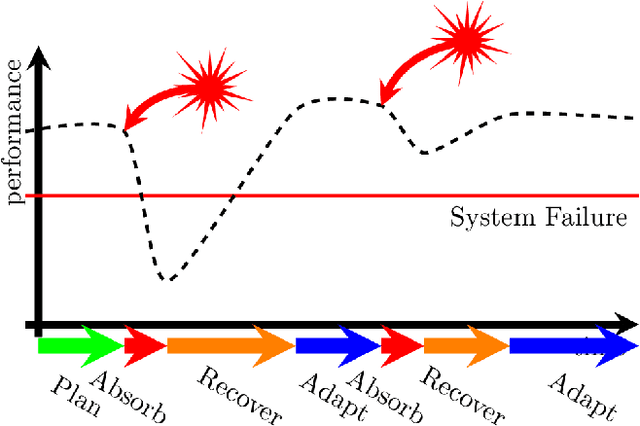

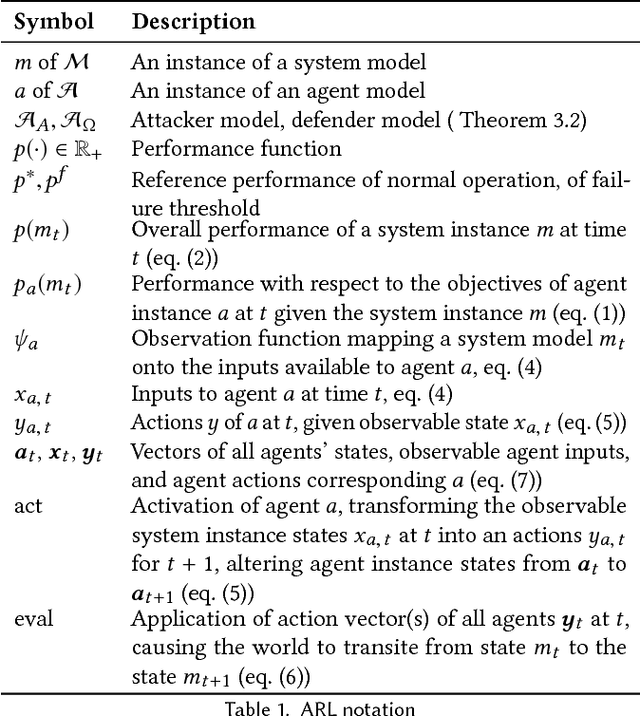

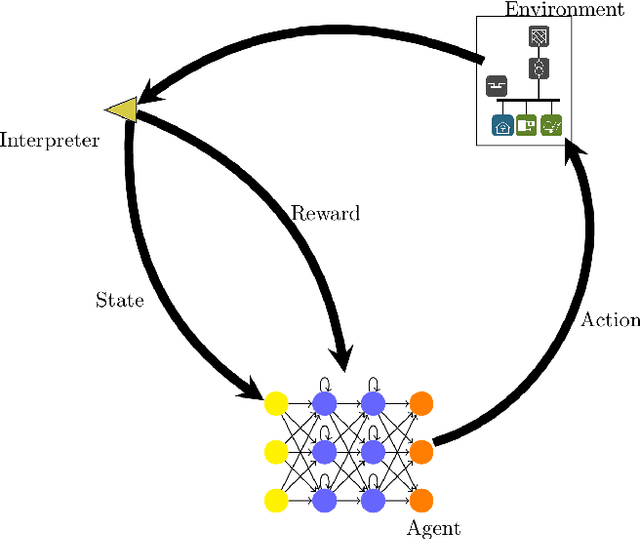

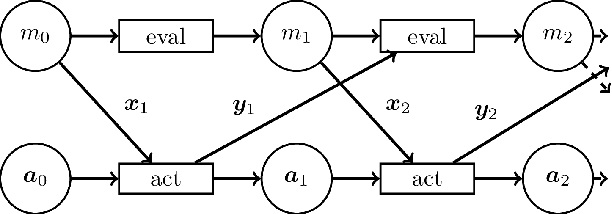

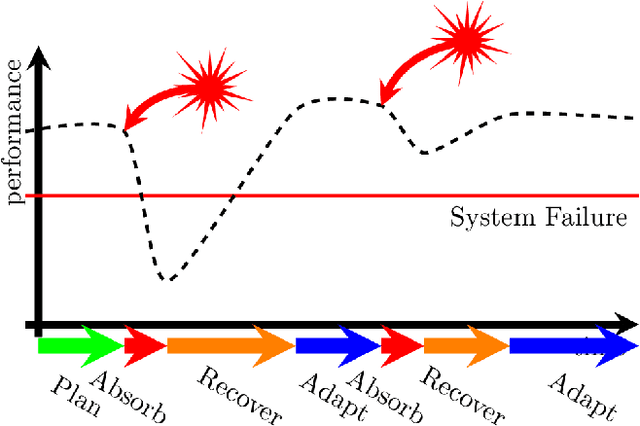

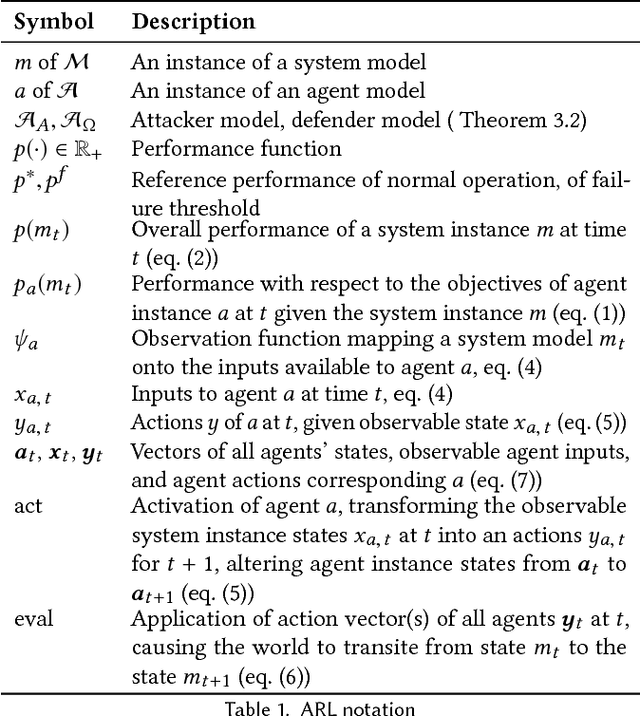

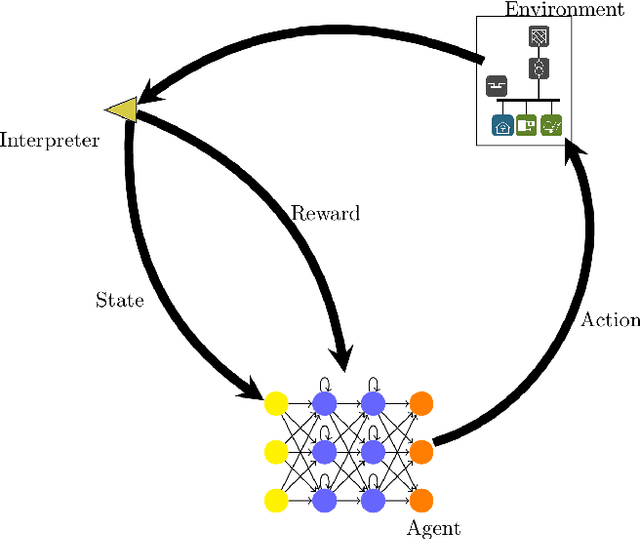

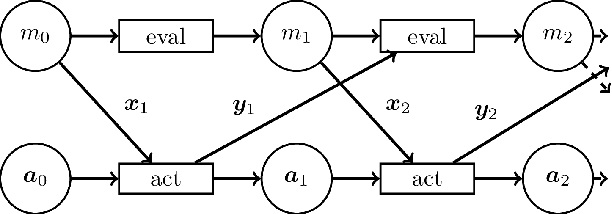

Abstract:This paper introduces Adversarial Resilience Learning (ARL), a concept to model, train, and analyze artificial neural networks as representations of competitive agents in highly complex systems. In our examples, the agents normally take the roles of attackers or defenders that aim at worsening or improving-or keeping, respectively-defined performance indicators of the system. Our concept provides adaptive, repeatable, actor-based testing with a chance of detecting previously unknown attack vectors. We provide the constitutive nomenclature of ARL and, based on it, the description of experimental setups and results of a preliminary implementation of ARL in simulated power systems.

* 10 pages

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge