Martin Ralf Oswald

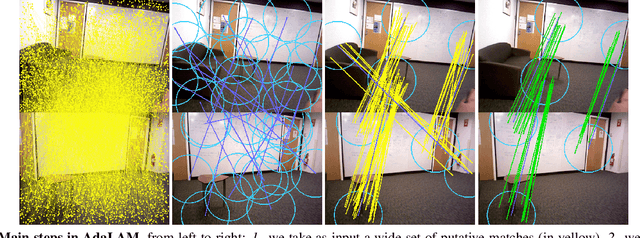

AdaLAM: Revisiting Handcrafted Outlier Detection

Jun 07, 2020

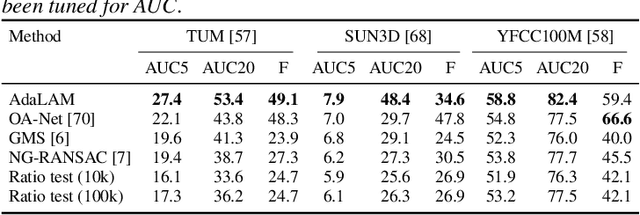

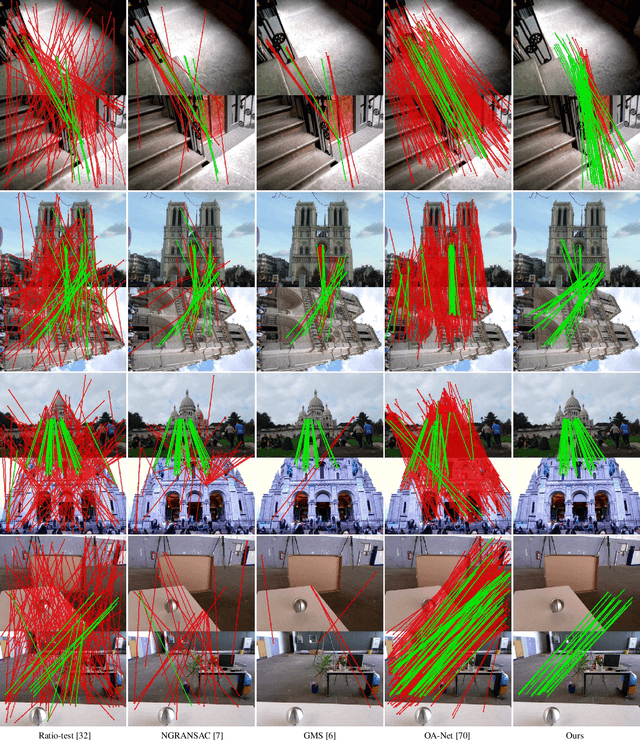

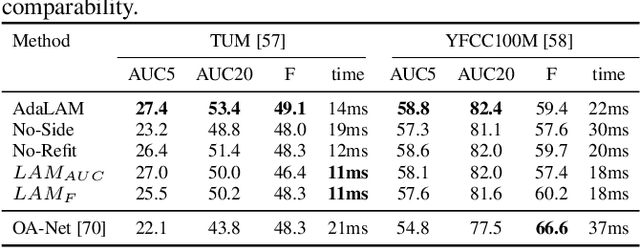

Abstract:Local feature matching is a critical component of many computer vision pipelines, including among others Structure-from-Motion, SLAM, and Visual Localization. However, due to limitations in the descriptors, raw matches are often contaminated by a majority of outliers. As a result, outlier detection is a fundamental problem in computer vision, and a wide range of approaches have been proposed over the last decades. In this paper we revisit handcrafted approaches to outlier filtering. Based on best practices, we propose a hierarchical pipeline for effective outlier detection as well as integrate novel ideas which in sum lead to AdaLAM, an efficient and competitive approach to outlier rejection. AdaLAM is designed to effectively exploit modern parallel hardware, resulting in a very fast, yet very accurate, outlier filter. We validate AdaLAM on multiple large and diverse datasets, and we submit to the Image Matching Challenge (CVPR2020), obtaining competitive results with simple baseline descriptors. We show that AdaLAM is more than competitive to current state of the art, both in terms of efficiency and effectiveness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge