Martin Kreuzer

Learning with Cross-Kernels and Ideal PCA

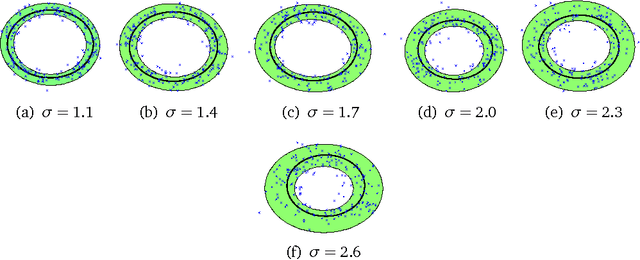

Jun 10, 2014Abstract:We describe how cross-kernel matrices, that is, kernel matrices between the data and a custom chosen set of `feature spanning points' can be used for learning. The main potential of cross-kernels lies in the fact that (a) only one side of the matrix scales with the number of data points, and (b) cross-kernels, as opposed to the usual kernel matrices, can be used to certify for the data manifold. Our theoretical framework, which is based on a duality involving the feature space and vanishing ideals, indicates that cross-kernels have the potential to be used for any kind of kernel learning. We present a novel algorithm, Ideal PCA (IPCA), which cross-kernelizes PCA. We demonstrate on real and synthetic data that IPCA allows to (a) obtain PCA-like features faster and (b) to extract novel and empirically validated features certifying for the data manifold.

Dual-to-kernel learning with ideals

Feb 01, 2014

Abstract:In this paper, we propose a theory which unifies kernel learning and symbolic algebraic methods. We show that both worlds are inherently dual to each other, and we use this duality to combine the structure-awareness of algebraic methods with the efficiency and generality of kernels. The main idea lies in relating polynomial rings to feature space, and ideals to manifolds, then exploiting this generative-discriminative duality on kernel matrices. We illustrate this by proposing two algorithms, IPCA and AVICA, for simultaneous manifold and feature learning, and test their accuracy on synthetic and real world data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge