Martin Isaksson

Adaptive Expert Models for Personalization in Federated Learning

Jun 15, 2022

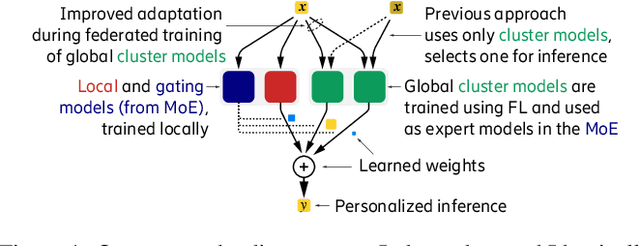

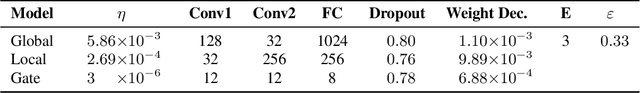

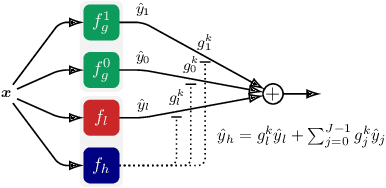

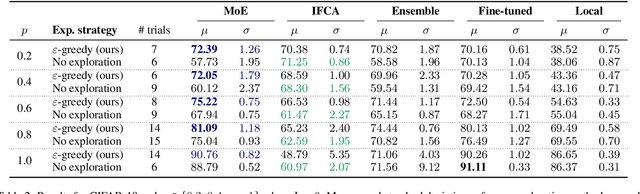

Abstract:Federated Learning (FL) is a promising framework for distributed learning when data is private and sensitive. However, the state-of-the-art solutions in this framework are not optimal when data is heterogeneous and non-Independent and Identically Distributed (non-IID). We propose a practical and robust approach to personalization in FL that adjusts to heterogeneous and non-IID data by balancing exploration and exploitation of several global models. To achieve our aim of personalization, we use a Mixture of Experts (MoE) that learns to group clients that are similar to each other, while using the global models more efficiently. We show that our approach achieves an accuracy up to 29.78 % and up to 4.38 % better compared to a local model in a pathological non-IID setting, even though we tune our approach in the IID setting.

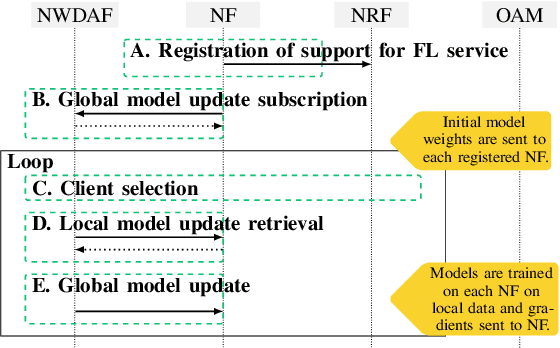

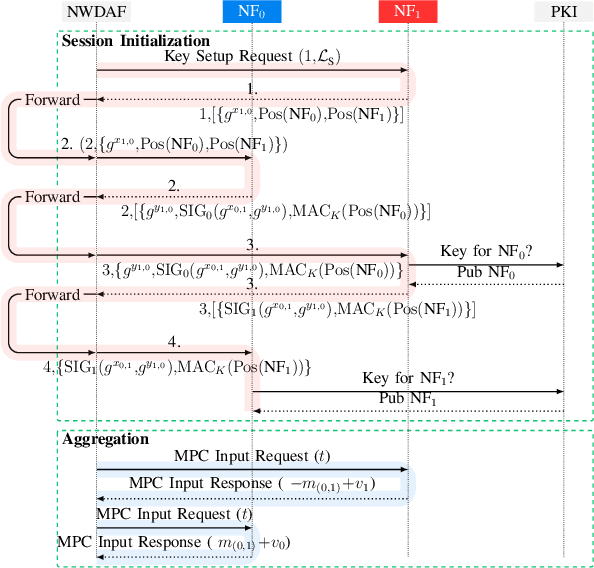

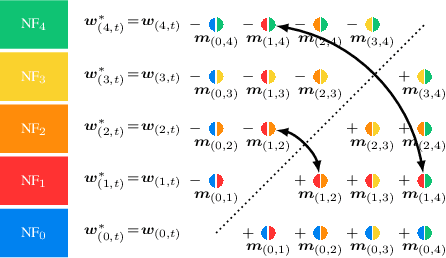

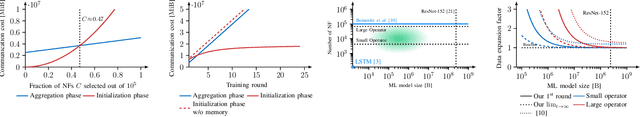

Secure Federated Learning in 5G Mobile Networks

Apr 14, 2020

Abstract:Machine Learning (ML) is an important enabler for optimizing, securing and managing mobile networks. This leads to increased collection and processing of data from network functions, which in turn may increase threats to sensitive end-user information. Consequently, mechanisms to reduce threats to end-user privacy are needed to take full advantage of ML. We seamlessly integrate Federated Learning (FL) into the 3GPP 5G Network Data Analytics (NWDA) architecture, and add a Multi-Party Computation (MPC) protocol for protecting the confidentiality of local updates. We evaluate the protocol and find that it has much lower overhead than previous work, without affecting ML performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge