Martin Eichenlaub

Airway Label Prediction in Video Bronchoscopy: Capturing Temporal Dependencies Utilizing Anatomical Knowledge

Jul 17, 2023Abstract:Purpose: Navigation guidance is a key requirement for a multitude of lung interventions using video bronchoscopy. State-of-the-art solutions focus on lung biopsies using electromagnetic tracking and intraoperative image registration w.r.t. preoperative CT scans for guidance. The requirement of patient-specific CT scans hampers the utilisation of navigation guidance for other applications such as intensive care units. Methods: This paper addresses navigation guidance solely incorporating bronchosopy video data. In contrast to state-of-the-art approaches we entirely omit the use of electromagnetic tracking and patient-specific CT scans. Guidance is enabled by means of topological bronchoscope localization w.r.t. an interpatient airway model. Particularly, we take maximally advantage of anatomical constraints of airway trees being sequentially traversed. This is realized by incorporating sequences of CNN-based airway likelihoods into a Hidden Markov Model. Results: Our approach is evaluated based on multiple experiments inside a lung phantom model. With the consideration of temporal context and use of anatomical knowledge for regularization, we are able to improve the accuracy up to to 0.98 compared to 0.81 (weighted F1: 0.98 compared to 0.81) for a classification based on individual frames. Conclusion: We combine CNN-based single image classification of airway segments with anatomical constraints and temporal HMM-based inference for the first time. Our approach renders vision-only guidance for bronchoscopy interventions in the absence of electromagnetic tracking and patient-specific CT scans possible.

Weakly Supervised Airway Orifice Segmentation in Video Bronchoscopy

Aug 24, 2022

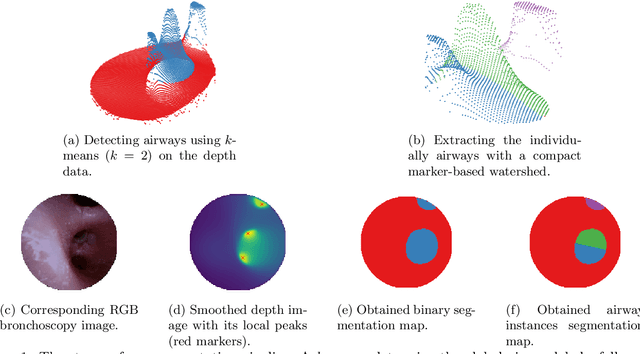

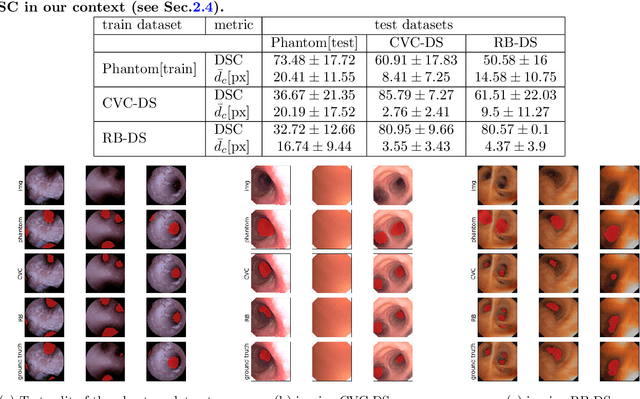

Abstract:Video bronchoscopy is routinely conducted for biopsies of lung tissue suspected for cancer, monitoring of COPD patients and clarification of acute respiratory problems at intensive care units. The navigation within complex bronchial trees is particularly challenging and physically demanding, requiring long-term experiences of physicians. This paper addresses the automatic segmentation of bronchial orifices in bronchoscopy videos. Deep learning-based approaches to this task are currently hampered due to the lack of readily-available ground truth segmentation data. Thus, we present a data-driven pipeline consisting of a k-means followed by a compact marker-based watershed algorithm which enables to generate airway instance segmentation maps from given depth images. In this way, these traditional algorithms serve as weak supervision for training a shallow CNN directly on RGB images solely based on a phantom dataset. We evaluate generalization capabilities of this model on two in-vivo datasets covering 250 frames on 21 different bronchoscopies. We demonstrate that its performance is comparable to those models being directly trained on in-vivo data, reaching an average error of 11 vs 5 pixels for the detected centers of the airway segmentation by an image resolution of 128x128. Our quantitative and qualitative results indicate that in the context of video bronchoscopy, phantom data and weak supervision using non-learning-based approaches enable to gain a semantic understanding of airway structures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge