Martin Caminada

Strong Admissibility, a Tractable Algorithmic Approach (proofs)

Apr 07, 2022

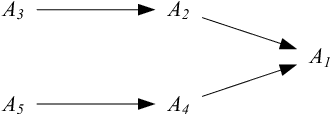

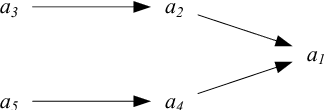

Abstract:Much like admissibility is the key concept underlying preferred semantics, strong admissibility is the key concept underlying grounded semantics, as membership of a strongly admissible set is sufficient to show membership of the grounded extension. As such, strongly admissible sets and labellings can be used as an explanation of membership of the grounded extension, as is for instance done in some of the proof procedures for grounded semantics. In the current paper, we present two polynomial algorithms for constructing relatively small strongly admissible labellings, with associated min-max numberings, for a particular argument. These labellings can be used as relatively small explanations for the argument's membership of the grounded extension. Although our algorithms are not guaranteed to yield an absolute minimal strongly admissible labelling for the argument (as doing do would have implied an exponential complexity), our best performing algorithm yields results that are only marginally bigger. Moreover, the runtime of this algorithm is an order of magnitude smaller than that of the existing approach for computing an absolute minimal strongly admissible labelling for a particular argument. As such, we believe that our algorithms can be of practical value in situations where the aim is to construct a minimal or near-minimal strongly admissible labelling in a time-efficient way.

Pareto Optimality and Strategy Proofness in Group Argument Evaluation (Extended Version)

Apr 07, 2017

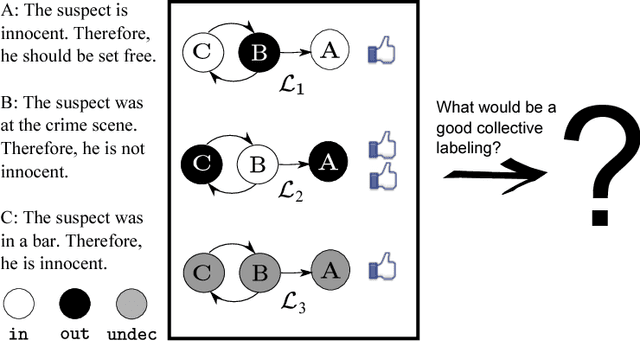

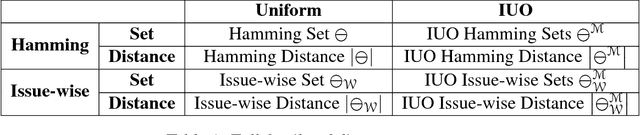

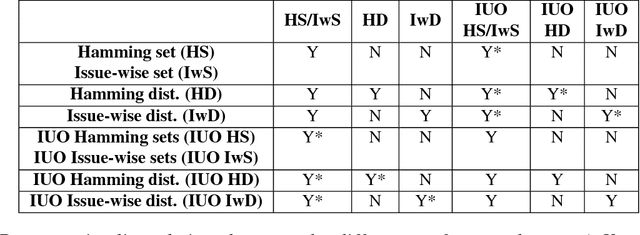

Abstract:An inconsistent knowledge base can be abstracted as a set of arguments and a defeat relation among them. There can be more than one consistent way to evaluate such an argumentation graph. Collective argument evaluation is the problem of aggregating the opinions of multiple agents on how a given set of arguments should be evaluated. It is crucial not only to ensure that the outcome is logically consistent, but also satisfies measures of social optimality and immunity to strategic manipulation. This is because agents have their individual preferences about what the outcome ought to be. In the current paper, we analyze three previously introduced argument-based aggregation operators with respect to Pareto optimality and strategy proofness under different general classes of agent preferences. We highlight fundamental trade-offs between strategic manipulability and social optimality on one hand, and classical logical criteria on the other. Our results motivate further investigation into the relationship between social choice and argumentation theory. The results are also relevant for choosing an appropriate aggregation operator given the criteria that are considered more important, as well as the nature of agents' preferences.

Experimental Assessment of Aggregation Principles in Argumentation-enabled Collective Intelligence

Feb 12, 2017

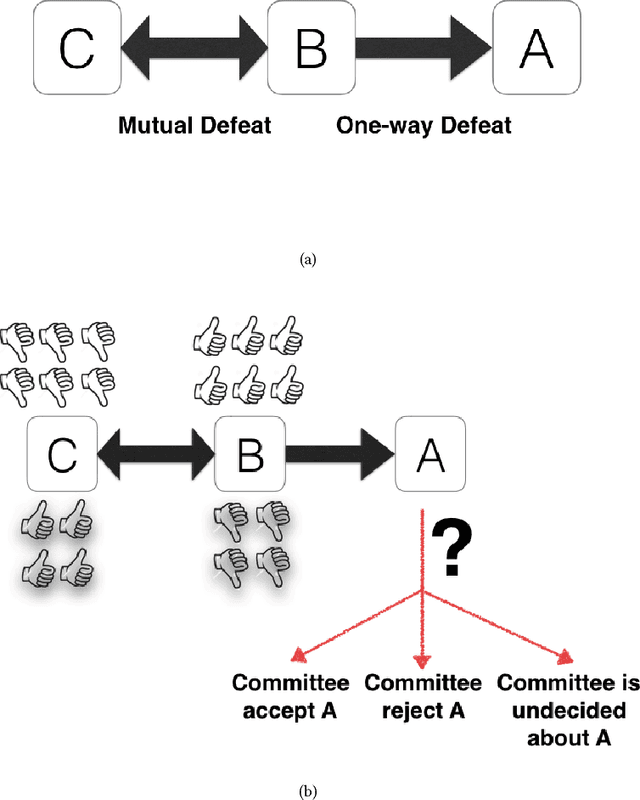

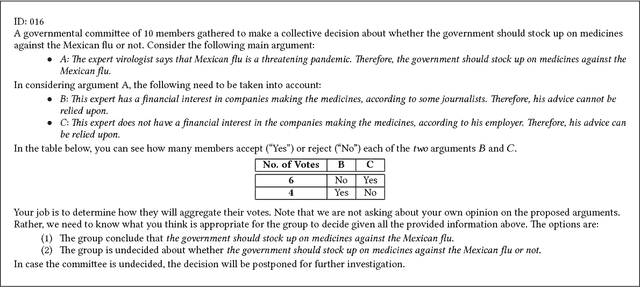

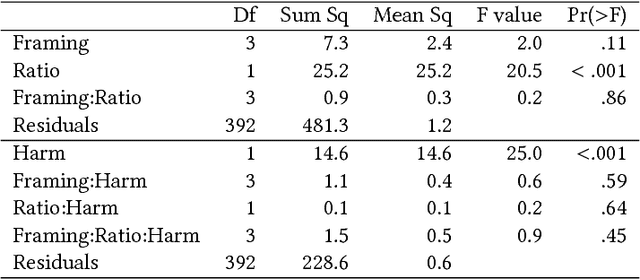

Abstract:On the Web, there is always a need to aggregate opinions from the crowd (as in posts, social networks, forums, etc.). Different mechanisms have been implemented to capture these opinions such as "Like" in Facebook, "Favorite" in Twitter, thumbs-up/down, flagging, and so on. However, in more contested domains (e.g. Wikipedia, political discussion, and climate change discussion) these mechanisms are not sufficient since they only deal with each issue independently without considering the relationships between different claims. We can view a set of conflicting arguments as a graph in which the nodes represent arguments and the arcs between these nodes represent the defeat relation. A group of people can then collectively evaluate such graphs. To do this, the group must use a rule to aggregate their individual opinions about the entire argument graph. Here, we present the first experimental evaluation of different principles commonly employed by aggregation rules presented in the literature. We use randomized controlled experiments to investigate which principles people consider better at aggregating opinions under different conditions. Our analysis reveals a number of factors, not captured by traditional formal models, that play an important role in determining the efficacy of aggregation. These results help bring formal models of argumentation closer to real-world application.

Assessing the Impact of Informedness on a Consultant's Profit

Sep 04, 2009Abstract:We study the notion of informedness in a client-consultant setting. Using a software simulator, we examine the extent to which it pays off for consultants to provide their clients with advice that is well-informed, or with advice that is merely meant to appear to be well-informed. The latter strategy is beneficial in that it costs less resources to keep up-to-date, but carries the risk of a decreased reputation if the clients discover the low level of informedness of the consultant. Our experimental results indicate that under different circumstances, different strategies yield the optimal results (net profit) for the consultants.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge