Martin Alain

View Sub-sampling and Reconstruction for Efficient Light Field Compression

Aug 12, 2022

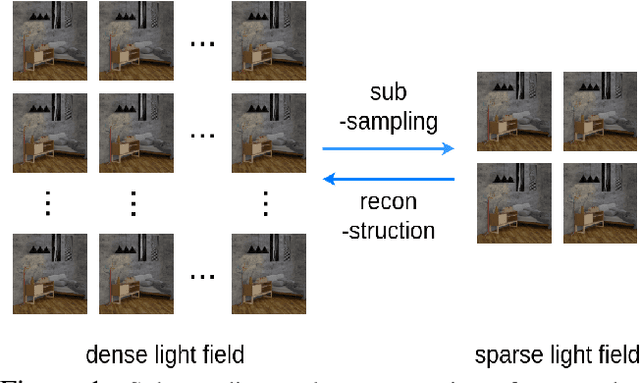

Abstract:Compression is an important task for many practical applications of light fields. Although previous work has proposed numerous methods for efficient light field compression, the effect of view selection on this task is not well exploited. In this work, we study different sub-sampling and reconstruction strategies for light field compression. We apply various sub-sampling and corresponding reconstruction strategies before and after light field compression. Then, fully reconstructed light fields are assessed to evaluate the performance of different methods. Our evaluation is performed on both real-world and synthetic datasets, and optimal strategies are devised from our experimental results. We hope this study would be beneficial for future research such as light field streaming, storage, and transmission.

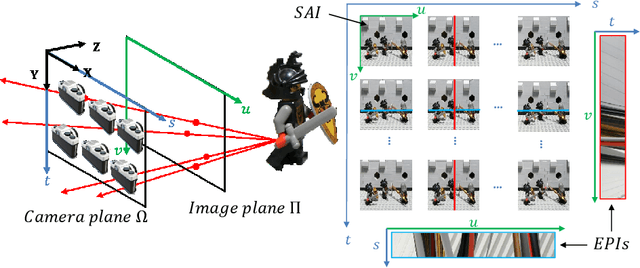

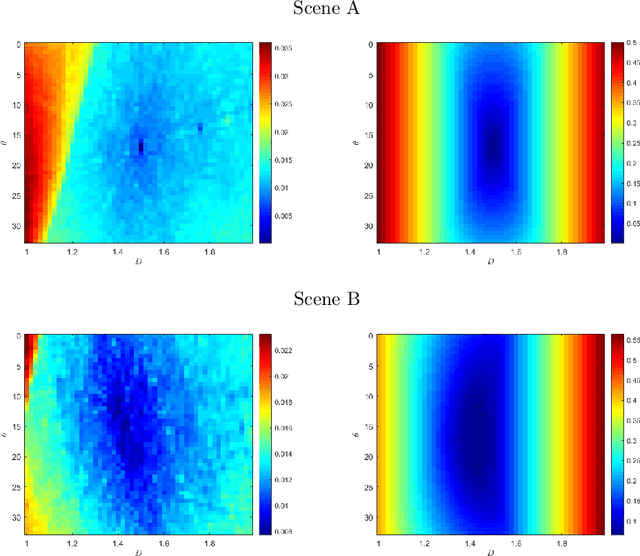

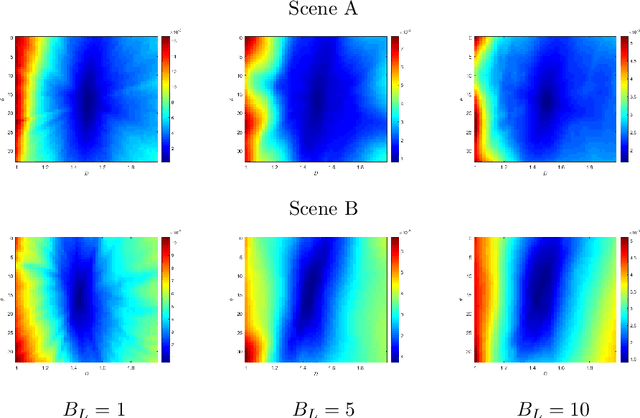

Spectral analysis of re-parameterized light fields

Oct 12, 2021

Abstract:In this paper, we study the spectral properties of re-parameterized light field. Following previous studies of the light field spectrum, which notably provided sampling guidelines, we focus on the two plane parameterization of the light field. However, we introduce additional flexibility by allowing the image plane to be tilted and not only parallel. A formal theoretical analysis is first presented, which shows that more flexible sampling guidelines (i.e. wider camera baselines) can be used to sample the light field when adapting the image plane orientation to the scene geometry. We then present our simulations and results to support these theoretical findings. While the work introduced in this paper is mostly theoretical, we believe these new findings open exciting avenues for more practical application of light fields, such as view synthesis or compact representation.

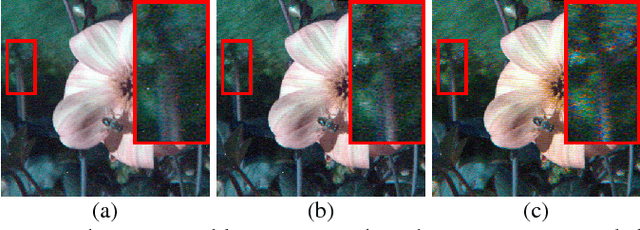

Self-supervised Light Field View Synthesis Using Cycle Consistency

Aug 12, 2020

Abstract:High angular resolution is advantageous for practical applications of light fields. In order to enhance the angular resolution of light fields, view synthesis methods can be utilized to generate dense intermediate views from sparse light field input. Most successful view synthesis methods are learning-based approaches which require a large amount of training data paired with ground truth. However, collecting such large datasets for light fields is challenging compared to natural images or videos. To tackle this problem, we propose a self-supervised light field view synthesis framework with cycle consistency. The proposed method aims to transfer prior knowledge learned from high quality natural video datasets to the light field view synthesis task, which reduces the need for labeled light field data. A cycle consistency constraint is used to build bidirectional mapping enforcing the generated views to be consistent with the input views. Derived from this key concept, two loss functions, cycle loss and reconstruction loss, are used to fine-tune the pre-trained model of a state-of-the-art video interpolation method. The proposed method is evaluated on various datasets to validate its robustness, and results show it not only achieves competitive performance compared to supervised fine-tuning, but also outperforms state-of-the-art light field view synthesis methods, especially when generating multiple intermediate views. Besides, our generic light field view synthesis framework can be adopted to any pre-trained model for advanced video interpolation.

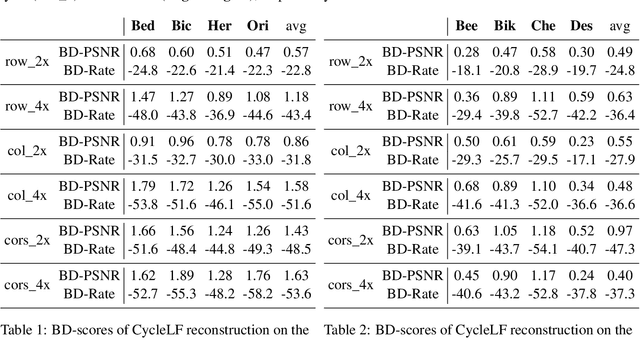

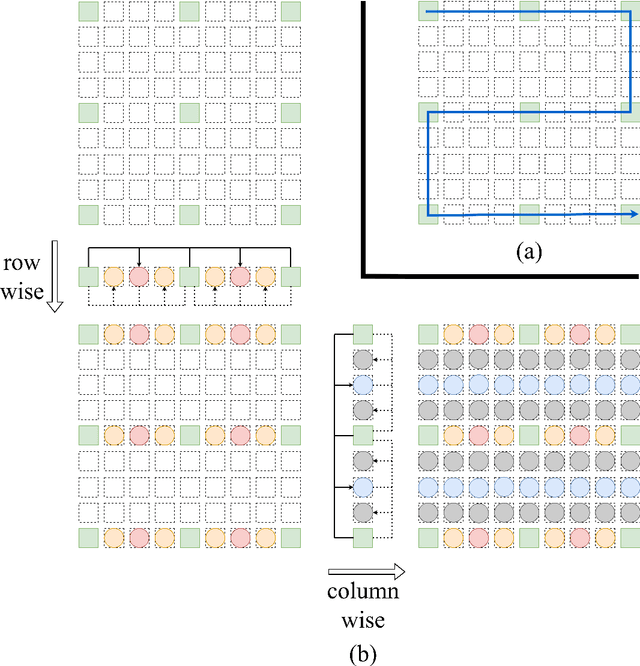

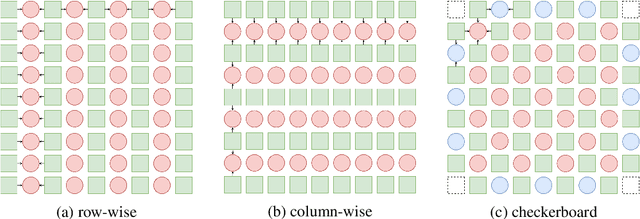

A Study of Efficient Light Field Subsampling and Reconstruction Strategies

Aug 11, 2020

Abstract:Limited angular resolution is one of the main obstacles for practical applications of light fields. Although numerous approaches have been proposed to enhance angular resolution, view selection strategies have not been well explored in this area. In this paper, we study subsampling and reconstruction strategies for light fields. First, different subsampling strategies are studied with a fixed sampling ratio, such as row-wise sampling, column-wise sampling, or their combinations. Second, several strategies are explored to reconstruct intermediate views from four regularly sampled input views. The influence of the angular density of the input is also evaluated. We evaluate these strategies on both real-world and synthetic datasets, and optimal selection strategies are devised from our results. These can be applied in future light field research such as compression, angular super-resolution, and design of camera systems.

Fast and Accurate Optical Flow based Depth Map Estimation from Light Fields

Aug 11, 2020Abstract:Depth map estimation is a crucial task in computer vision, and new approaches have recently emerged taking advantage of light fields, as this new imaging modality captures much more information about the angular direction of light rays compared to common approaches based on stereoscopic images or multi-view. In this paper, we propose a novel depth estimation method from light fields based on existing optical flow estimation methods. The optical flow estimator is applied on a sequence of images taken along an angular dimension of the light field, which produces several disparity map estimates. Considering both accuracy and efficiency, we choose the feature flow method as our optical flow estimator. Thanks to its spatio-temporal edge-aware filtering properties, the different disparity map estimates that we obtain are very consistent, which allows a fast and simple aggregation step to create a single disparity map, which can then converted into a depth map. Since the disparity map estimates are consistent, we can also create a depth map from each disparity estimate, and then aggregate the different depth maps in the 3D space to create a single dense depth map.

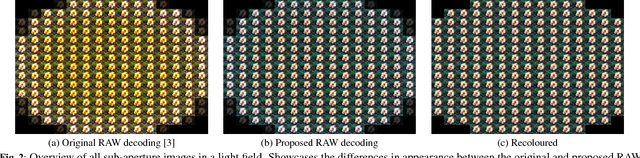

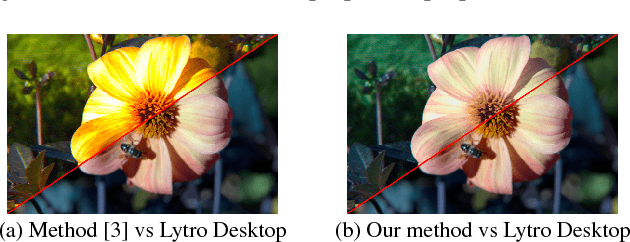

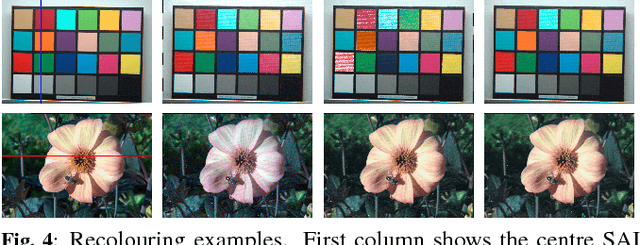

A Pipeline for Lenslet Light Field Quality Enhancement

Aug 16, 2018

Abstract:In recent years, light fields have become a major research topic and their applications span across the entire spectrum of classical image processing. Among the different methods used to capture a light field are the lenslet cameras, such as those developed by Lytro. While these cameras give a lot of freedom to the user, they also create light field views that suffer from a number of artefacts. As a result, it is common to ignore a significant subset of these views when doing high-level light field processing. We propose a pipeline to process light field views, first with an enhanced processing of RAW images to extract subaperture images, then a colour correction process using a recent colour transfer algorithm, and finally a denoising process using a state of the art light field denoising approach. We show that our method improves the light field quality on many levels, by reducing ghosting artefacts and noise, as well as retrieving more accurate and homogeneous colours across the sub-aperture images.

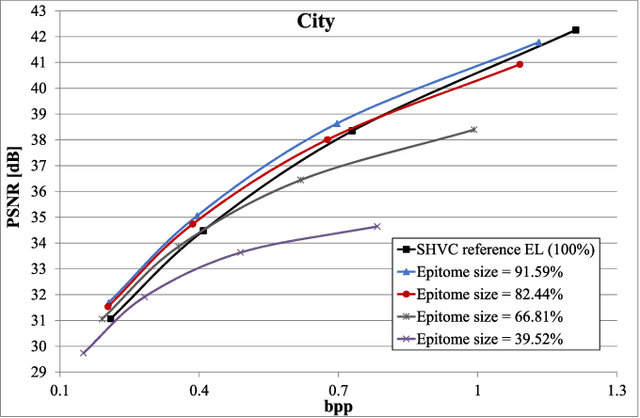

Scalable image coding based on epitomes

Jun 28, 2016

Abstract:In this paper, we propose a novel scheme for scalable image coding based on the concept of epitome. An epitome can be seen as a factorized representation of an image. Focusing on spatial scalability, the enhancement layer of the proposed scheme contains only the epitome of the input image. The pixels of the enhancement layer not contained in the epitome are then restored using two approaches inspired from local learning-based super-resolution methods. In the first method, a locally linear embedding model is learned on base layer patches and then applied to the corresponding epitome patches to reconstruct the enhancement layer. The second approach learns linear mappings between pairs of co-located base layer and epitome patches. Experiments have shown that significant improvement of the rate-distortion performances can be achieved compared to an SHVC reference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge