Mario Franco

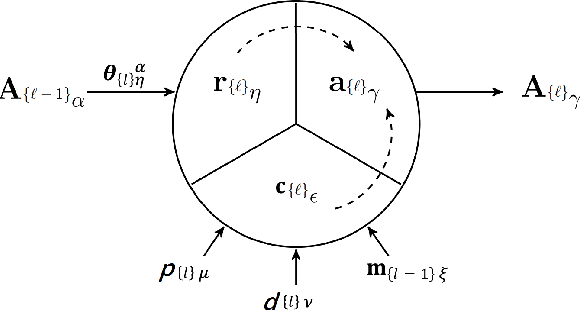

Spark: Modular Spiking Neural Networks

Feb 02, 2026Abstract:Nowadays, neural networks act as a synonym for artificial intelligence. Present neural network models, although remarkably powerful, are inefficient both in terms of data and energy. Several alternative forms of neural networks have been proposed to address some of these problems. Specifically, spiking neural networks are suitable for efficient hardware implementations. However, effective learning algorithms for spiking networks remain elusive, although it is suspected that effective plasticity mechanisms could alleviate the problem of data efficiency. Here, we present a new framework for spiking neural networks - Spark - built upon the idea of modular design, from simple components to entire models. The aim of this framework is to provide an efficient and streamlined pipeline for spiking neural networks. We showcase this framework by solving the sparse-reward cartpole problem with simple plasticity mechanisms. We hope that a framework compatible with traditional ML pipelines may accelerate research in the area, specifically for continuous and unbatched learning, akin to the one animals exhibit.

The Art of Misclassification: Too Many Classes, Not Enough Points

Feb 12, 2025

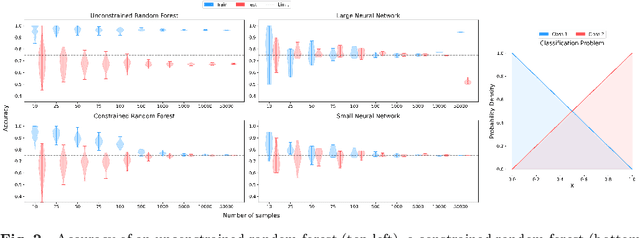

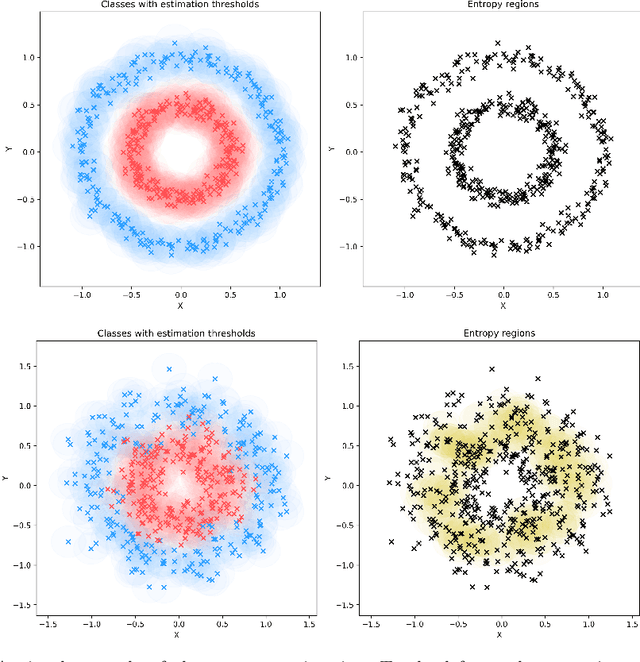

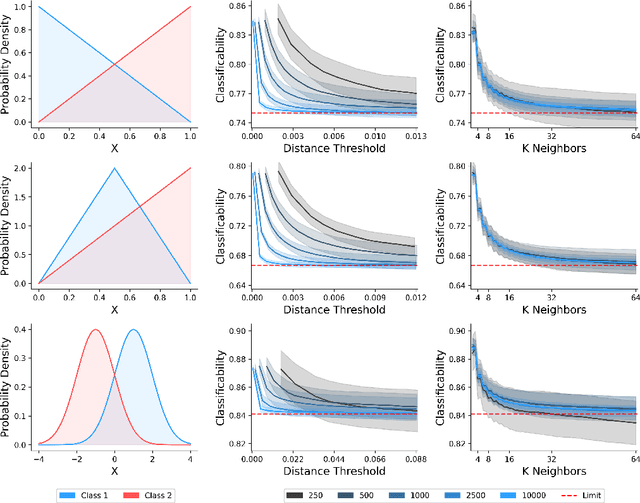

Abstract:Classification is a ubiquitous and fundamental problem in artificial intelligence and machine learning, with extensive efforts dedicated to developing more powerful classifiers and larger datasets. However, the classification task is ultimately constrained by the intrinsic properties of datasets, independently of computational power or model complexity. In this work, we introduce a formal entropy-based measure of classificability, which quantifies the inherent difficulty of a classification problem by assessing the uncertainty in class assignments given feature representations. This measure captures the degree of class overlap and aligns with human intuition, serving as an upper bound on classification performance for classification problems. Our results establish a theoretical limit beyond which no classifier can improve the classification accuracy, regardless of the architecture or amount of data, in a given problem. Our approach provides a principled framework for understanding when classification is inherently fallible and fundamentally ambiguous.

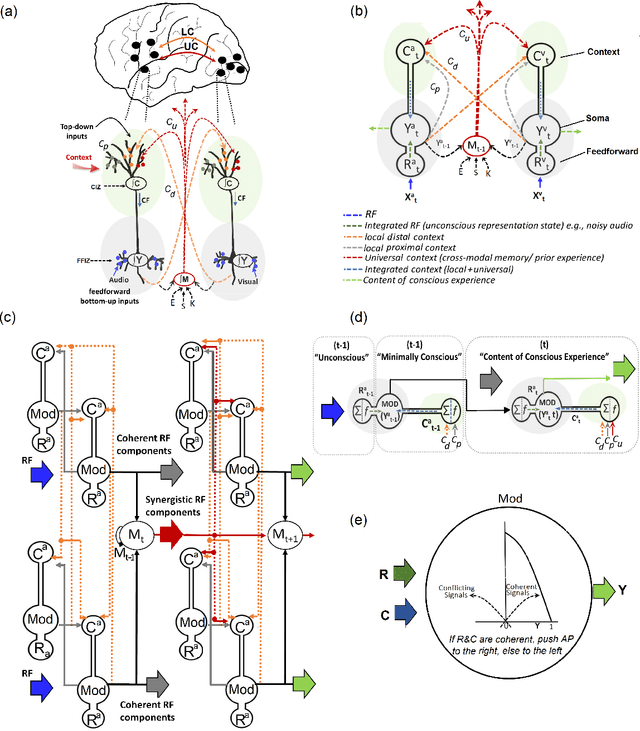

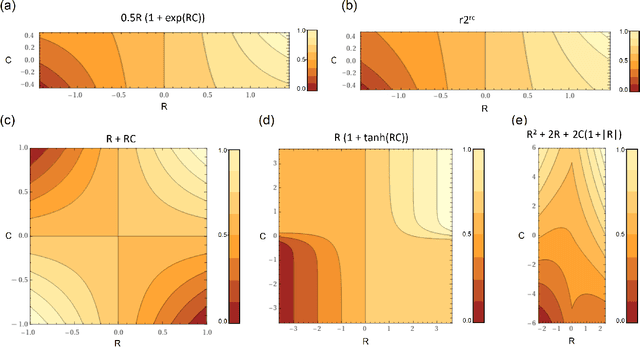

Context-sensitive neocortical neurons transform the effectiveness and efficiency of neural information processing

Jul 15, 2022

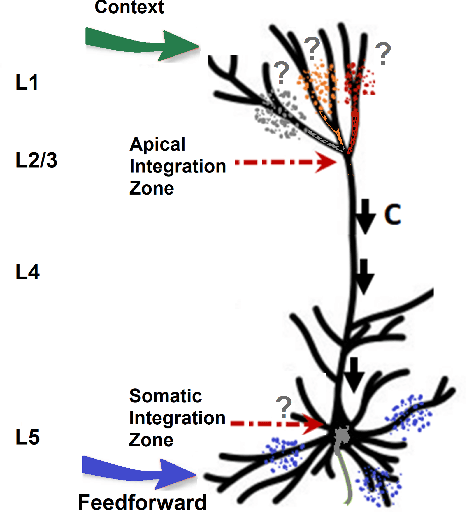

Abstract:There is ample neurobiological evidence that context-sensitive neocortical neurons use their apical inputs as context to amplify the transmission of coherent feedforward (FF) inputs. However, it has not been demonstrated until now how this known mechanism can provide useful neural computation. Here we show for the first time that the processing and learning capabilities of this form of neural information processing are well-matched to the abilities of mammalian neocortex. Specifically, we show that a network composed of such local processors restricts the transmission of conflicting information to higher levels and greatly reduces the amount of activity required to process large amounts of heterogeneous real-world data e.g., when processing audiovisual speech, these local processors use seen lip movements to selectively amplify FF transmission of the auditory information that those movements generate and vice versa. As this mechanism is shown to be far more effective and efficient than the best available forms of deep neural nets, it offers a step-change in understanding the brain's mysterious energy-saving mechanism and inspires advances in designing enhanced forms of biologically plausible machine learning algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge