Marija Habijan

Evaluation Framework for Computer Vision-Based Guidance of the Visually Impaired

Sep 20, 2022

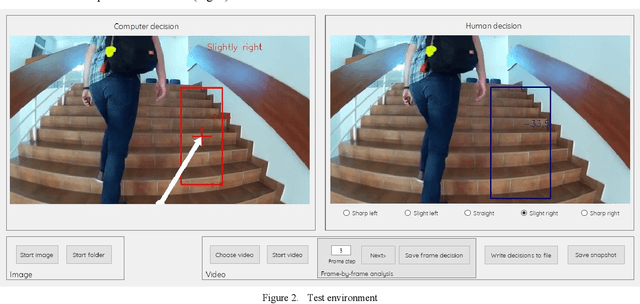

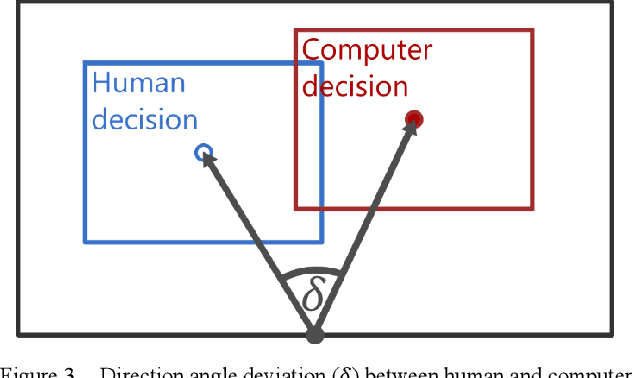

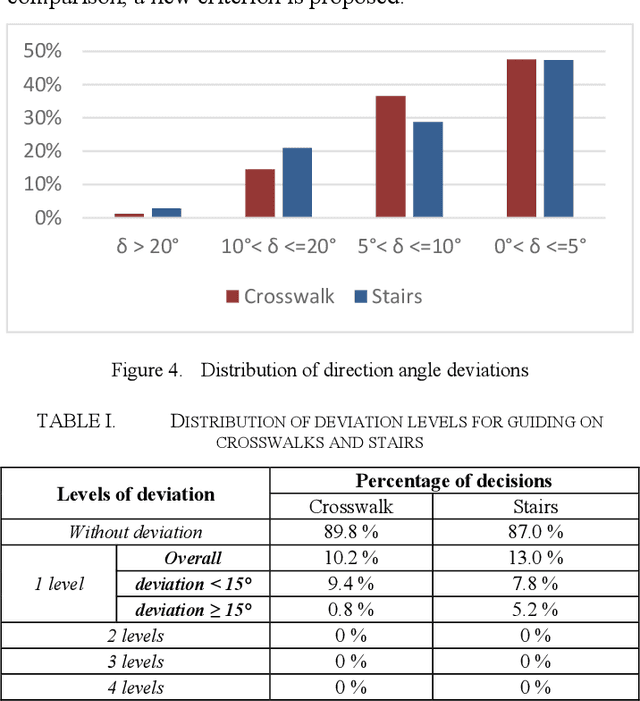

Abstract:Visually impaired persons have significant problems in their everyday movement. Therefore, some of our previous work involves computer vision in developing assistance systems for guiding the visually impaired in critical situations. Some of those situations includes crosswalks on road crossings and stairs in indoor and outdoor environment. This paper presents an evaluation framework for computer vision-based guiding of the visually impaired persons in such critical situations. Presented framework includes the interface for labeling and storing referent human decisions for guiding directions and compares them to computer vision-based decisions. Since strict evaluation methodology in this research field is not clearly defined and due to the specifics of the transfer of information to visually impaired persons, evaluation criterion for specific simplified guiding instructions is proposed.

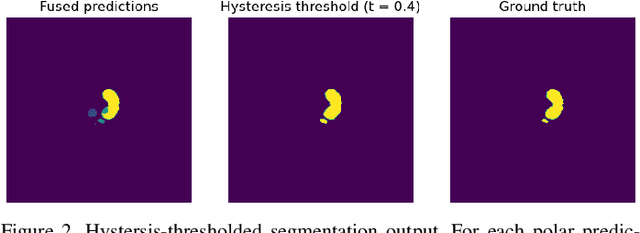

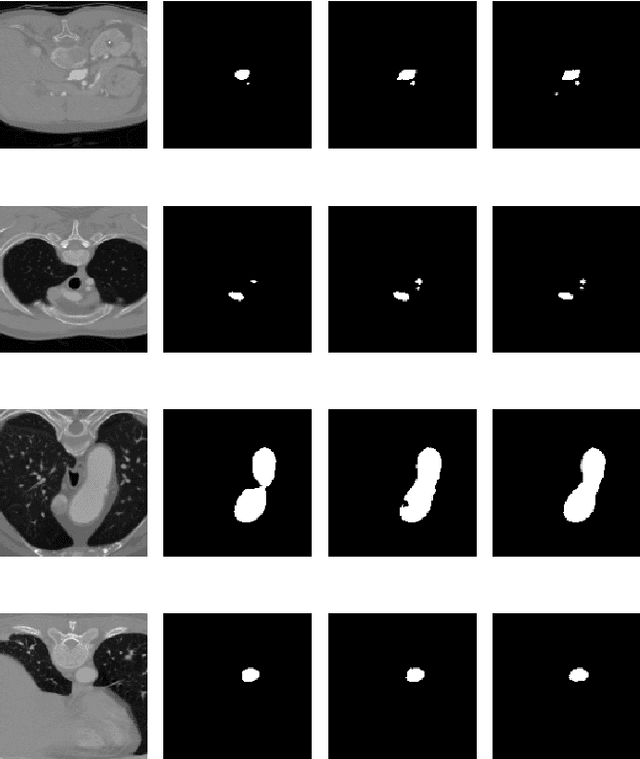

Using the Polar Transform for Efficient Deep Learning-Based Aorta Segmentation in CTA Images

Jun 21, 2022

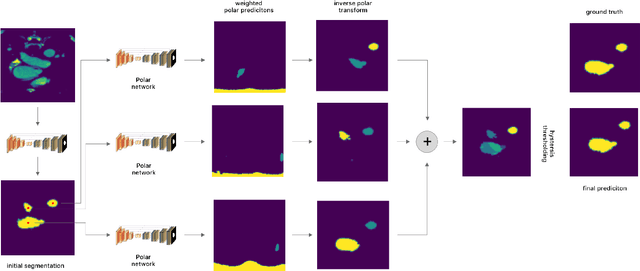

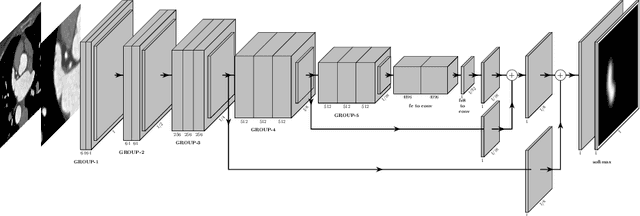

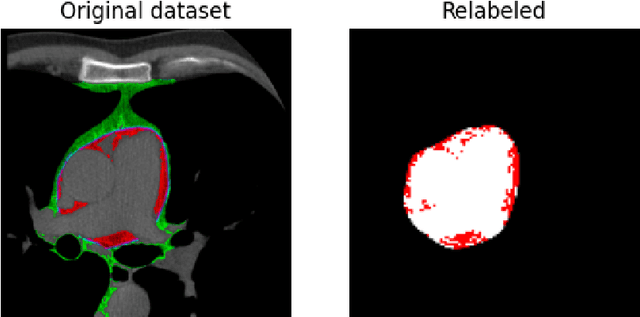

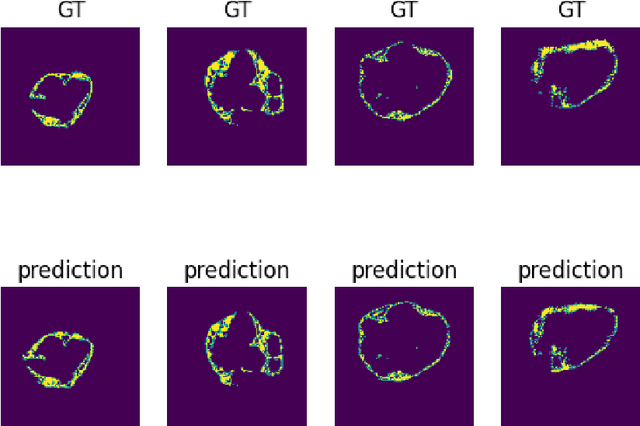

Abstract:Medical image segmentation often requires segmenting multiple elliptical objects on a single image. This includes, among other tasks, segmenting vessels such as the aorta in axial CTA slices. In this paper, we present a general approach to improving the semantic segmentation performance of neural networks in these tasks and validate our approach on the task of aorta segmentation. We use a cascade of two neural networks, where one performs a rough segmentation based on the U-Net architecture and the other performs the final segmentation on polar image transformations of the input. Connected component analysis of the rough segmentation is used to construct the polar transformations, and predictions on multiple transformations of the same image are fused using hysteresis thresholding. We show that this method improves aorta segmentation performance without requiring complex neural network architectures. In addition, we show that our approach improves robustness and pixel-level recall while achieving segmentation performance in line with the state of the art.

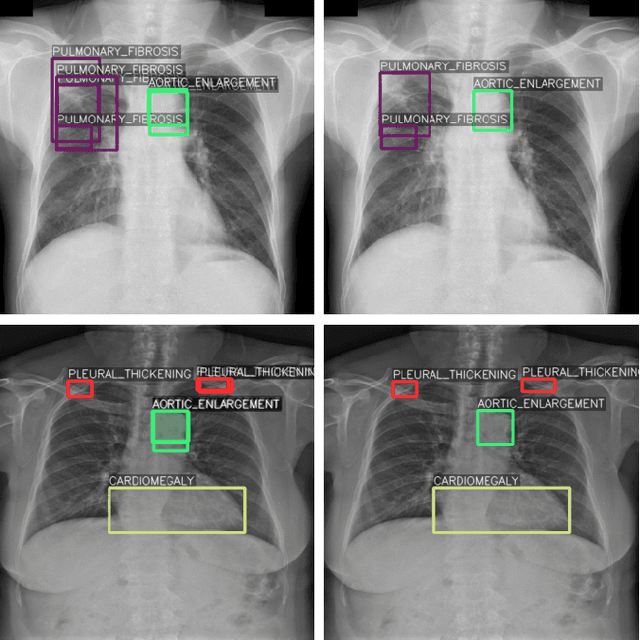

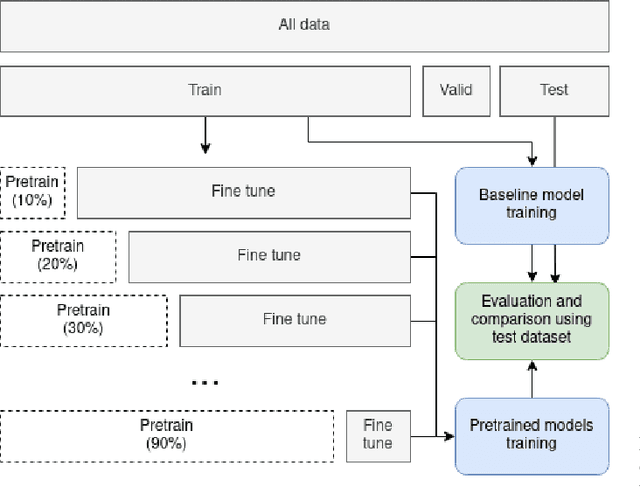

Self-Supervised Learning as a Means To Reduce the Need for Labeled Data in Medical Image Analysis

Jun 01, 2022

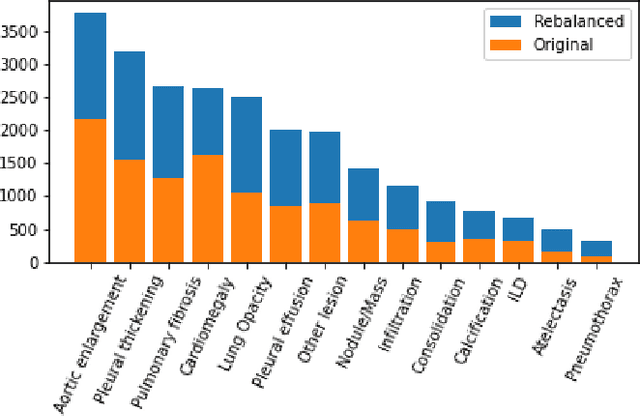

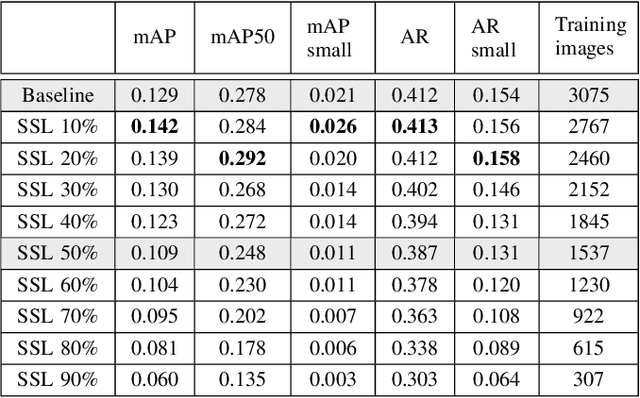

Abstract:One of the largest problems in medical image processing is the lack of annotated data. Labeling medical images often requires highly trained experts and can be a time-consuming process. In this paper, we evaluate a method of reducing the need for labeled data in medical image object detection by using self-supervised neural network pretraining. We use a dataset of chest X-ray images with bounding box labels for 13 different classes of anomalies. The networks are pretrained on a percentage of the dataset without labels and then fine-tuned on the rest of the dataset. We show that it is possible to achieve similar performance to a fully supervised model in terms of mean average precision and accuracy with only 60\% of the labeled data. We also show that it is possible to increase the maximum performance of a fully-supervised model by adding a self-supervised pretraining step, and this effect can be observed with even a small amount of unlabeled data for pretraining.

Generation of Artificial CT Images using Patch-based Conditional Generative Adversarial Networks

May 19, 2022

Abstract:Deep learning has a great potential to alleviate diagnosis and prognosis for various clinical procedures. However, the lack of a sufficient number of medical images is the most common obstacle in conducting image-based analysis using deep learning. Due to the annotations scarcity, semi-supervised techniques in the automatic medical analysis are getting high attention. Artificial data augmentation and generation techniques such as generative adversarial networks (GANs) may help overcome this obstacle. In this work, we present an image generation approach that uses generative adversarial networks with a conditional discriminator where segmentation masks are used as conditions for image generation. We validate the feasibility of GAN-enhanced medical image generation on whole heart computed tomography (CT) images and its seven substructures, namely: left ventricle, right ventricle, left atrium, right atrium, myocardium, pulmonary arteries, and aorta. Obtained results demonstrate the suitability of the proposed adversarial approach for the accurate generation of high-quality CT images. The presented method shows great potential to facilitate further research in the domain of artificial medical image generation.

A Survey of Left Atrial Appendage Segmentation and Analysis in 3D and 4D Medical Images

May 13, 2022

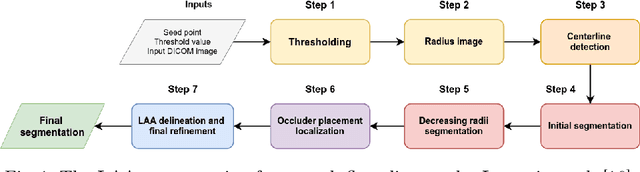

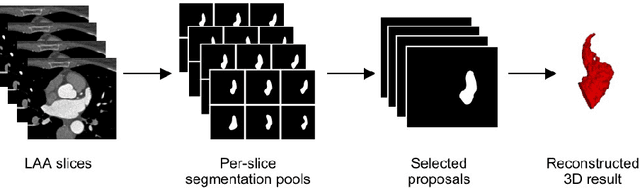

Abstract:Atrial fibrillation (AF) is a cardiovascular disease identified as one of the main risk factors for stroke. The majority of strokes due to AF are caused by clots originating in the left atrial appendage (LAA). LAA occlusion is an effective procedure for reducing stroke risk. Planning the procedure using pre-procedural imaging and analysis has shown benefits. The analysis is commonly done by manually segmenting the appendage on 2D slices. Automatic LAA segmentation methods could save an expert's time and provide insightful 3D visualizations and accurate automatic measurements to aid in medical procedures. Several semi- and fully-automatic methods for segmenting the appendage have been proposed. This paper provides a review of automatic LAA segmentation methods on 3D and 4D medical images, including CT, MRI, and echocardiogram images. We classify methods into heuristic and model-based methods, as well as into semi- and fully-automatic methods. We summarize and compare the proposed methods, evaluate their effectiveness, and present current challenges in the field and approaches to overcome them.

Epicardial Adipose Tissue Segmentation from CT Images with A Semi-3D Neural Network

Apr 27, 2022

Abstract:Epicardial adipose tissue is a type of adipose tissue located between the heart wall and a protective layer around the heart called the pericardium. The volume and thickness of epicardial adipose tissue are linked to various cardiovascular diseases. It is shown to be an independent cardiovascular disease risk factor. Fully automatic and reliable measurements of epicardial adipose tissue from CT scans could provide better disease risk assessment and enable the processing of large CT image data sets for a systemic epicardial adipose tissue study. This paper proposes a method for fully automatic semantic segmentation of epicardial adipose tissue from CT images using a deep neural network. The proposed network uses a U-Net-based architecture with slice depth information embedded in the input image to segment a pericardium region of interest, which is used to obtain an epicardial adipose tissue segmentation. Image augmentation is used to increase model robustness. Cross-validation of the proposed method yields a Dice score of 0.86 on the CT scans of 20 patients.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge