Marianne Aubin Le Quéré

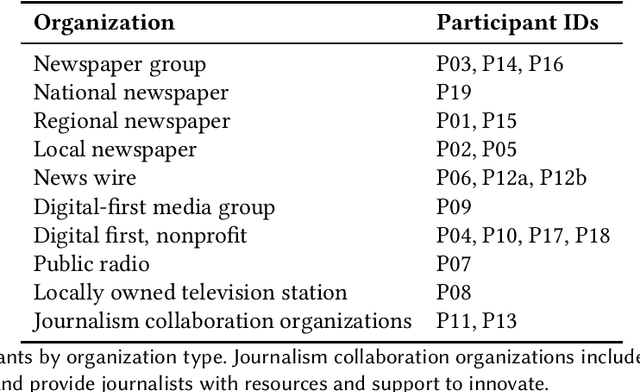

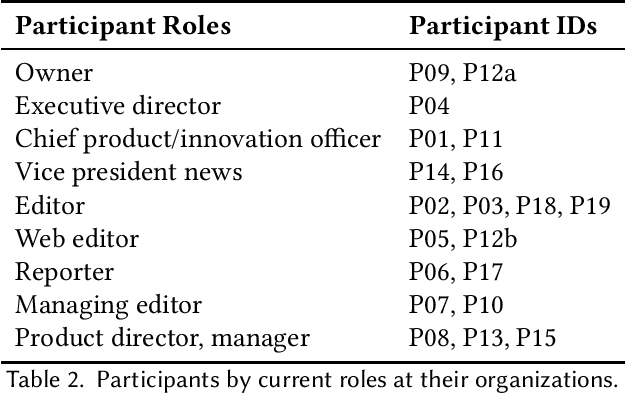

"Ownership, Not Just Happy Talk": Co-Designing a Participatory Large Language Model for Journalism

Jan 28, 2025

Abstract:Journalism has emerged as an essential domain for understanding the uses, limitations, and impacts of large language models (LLMs) in the workplace. News organizations face divergent financial incentives: LLMs already permeate newswork processes within financially constrained organizations, even as ongoing legal challenges assert that AI companies violate their copyright. At stake are key questions about what LLMs are created to do, and by whom: How might a journalist-led LLM work, and what can participatory design illuminate about the present-day challenges about adapting ``one-size-fits-all'' foundation models to a given context of use? In this paper, we undertake a co-design exploration to understand how a participatory approach to LLMs might address opportunities and challenges around AI in journalism. Our 20 interviews with reporters, data journalists, editors, labor organizers, product leads, and executives highlight macro, meso, and micro tensions that designing for this opportunity space must address. From these desiderata, we describe the result of our co-design work: organizational structures and functionality for a journalist-controlled LLM. In closing, we discuss the limitations of commercial foundation models for workplace use, and the methodological implications of applying participatory methods to LLM co-design.

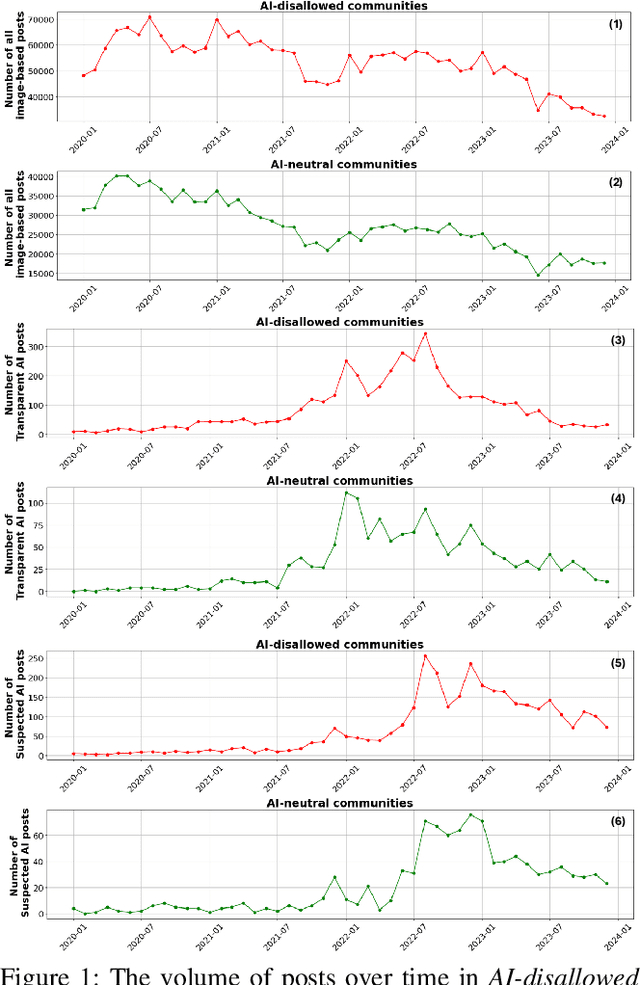

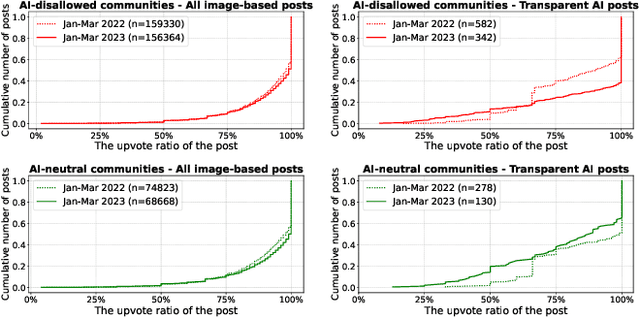

Examining the Prevalence and Dynamics of AI-Generated Media in Art Subreddits

Oct 09, 2024

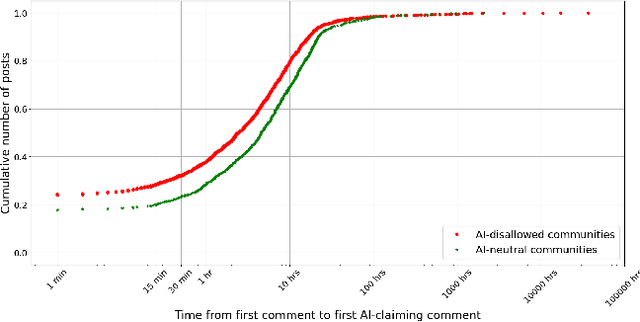

Abstract:Broadly accessible generative AI models like Dall-E have made it possible for anyone to create compelling visual art. In online communities, the introduction of AI-generated content (AIGC) may impact community dynamics by shifting the kinds of content being posted or the responses to content suspected of being generated by AI. We take steps towards examining the potential impact of AIGC on art-related communities on Reddit. We distinguish between communities that disallow AI content and those without a direct policy. We look at image-based posts made to these communities that are transparently created by AI, or comments in these communities that suspect authors of using generative AI. We find that AI posts (and accusations) have played a very small part in these communities through the end of 2023, accounting for fewer than 0.2% of the image-based posts. Even as the absolute number of author-labelled AI posts dwindles over time, accusations of AI use remain more persistent. We show that AI content is more readily used by newcomers and may help increase participation if it aligns with community rules. However, the tone of comments suspecting AI use by others have become more negative over time, especially in communities that do not have explicit rules about AI. Overall, the results show the changing norms and interactions around AIGC in online communities designated for creativity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge