Margaret Cheney

Linear Inverse Problems Using a Generative Compound Gaussian Prior

Jun 16, 2024

Abstract:Since most inverse problems arising in scientific and engineering applications are ill-posed, prior information about the solution space is incorporated, typically through regularization, to establish a well-posed problem with a unique solution. Often, this prior information is an assumed statistical distribution of the desired inverse problem solution. Recently, due to the unprecedented success of generative adversarial networks (GANs), the generative network from a GAN has been implemented as the prior information in imaging inverse problems. In this paper, we devise a novel iterative algorithm to solve inverse problems in imaging where a dual-structured prior is imposed by combining a GAN prior with the compound Gaussian (CG) class of distributions. A rigorous computational theory for the convergence of the proposed iterative algorithm, which is based upon the alternating direction method of multipliers, is established. Furthermore, elaborate empirical results for the proposed iterative algorithm are presented. By jointly exploiting the powerful CG and GAN classes of image priors, we find, in compressive sensing and tomographic imaging problems, our proposed algorithm outperforms and provides improved generalizability over competitive prior art approaches while avoiding performance saturation issues in previous GAN prior-based methods.

Two-Dimensional Frequency-Difference-of-Arrival Varieties

Mar 25, 2024Abstract:This paper studies Frequency-Difference-of-Arrival (FDOA) curves for the 2-dimensional, 2-sensor case. The primary focus of this paper is to give a description of curves associated to the FDOA problem from the algebro-geometric point of view. To be more precise, the complex projective picture of the family of FDOA curves for all possible relative velocities is described.

On Generalization Bounds for Deep Compound Gaussian Neural Networks

Feb 20, 2024Abstract:Algorithm unfolding or unrolling is the technique of constructing a deep neural network (DNN) from an iterative algorithm. Unrolled DNNs often provide better interpretability and superior empirical performance over standard DNNs in signal estimation tasks. An important theoretical question, which has only recently received attention, is the development of generalization error bounds for unrolled DNNs. These bounds deliver theoretical and practical insights into the performance of a DNN on empirical datasets that are distinct from, but sampled from, the probability density generating the DNN training data. In this paper, we develop novel generalization error bounds for a class of unrolled DNNs that are informed by a compound Gaussian prior. These compound Gaussian networks have been shown to outperform comparative standard and unfolded deep neural networks in compressive sensing and tomographic imaging problems. The generalization error bound is formulated by bounding the Rademacher complexity of the class of compound Gaussian network estimates with Dudley's integral. Under realistic conditions, we show that, at worst, the generalization error scales $\mathcal{O}(n\sqrt{\ln(n)})$ in the signal dimension and $\mathcal{O}(($Network Size$)^{3/2})$ in network size.

Deep Regularized Compound Gaussian Network for Solving Linear Inverse Problems

Nov 28, 2023Abstract:Incorporating prior information into inverse problems, e.g. via maximum-a-posteriori estimation, is an important technique for facilitating robust inverse problem solutions. In this paper, we devise two novel approaches for linear inverse problems that permit problem-specific statistical prior selections within the compound Gaussian (CG) class of distributions. The CG class subsumes many commonly used priors in signal and image reconstruction methods including those of sparsity-based approaches. The first method developed is an iterative algorithm, called generalized compound Gaussian least squares (G-CG-LS), that minimizes a regularized least squares objective function where the regularization enforces a CG prior. G-CG-LS is then unrolled, or unfolded, to furnish our second method, which is a novel deep regularized (DR) neural network, called DR-CG-Net, that learns the prior information. A detailed computational theory on convergence properties of G-CG-LS and thorough numerical experiments for DR-CG-Net are provided. Due to the comprehensive nature of the CG prior, these experiments show that our unrolled DR-CG-Net outperforms competitive prior art methods in tomographic imaging and compressive sensing, especially in challenging low-training scenarios.

A Compound Gaussian Network for Solving Linear Inverse Problems

May 19, 2023

Abstract:For solving linear inverse problems, particularly of the type that appear in tomographic imaging and compressive sensing, this paper develops two new approaches. The first approach is an iterative algorithm that minimizers a regularized least squares objective function where the regularization is based on a compound Gaussian prior distribution. The Compound Gaussian prior subsumes many of the commonly used priors in image reconstruction, including those of sparsity-based approaches. The developed iterative algorithm gives rise to the paper's second new approach, which is a deep neural network that corresponds to an "unrolling" or "unfolding" of the iterative algorithm. Unrolled deep neural networks have interpretable layers and outperform standard deep learning methods. This paper includes a detailed computational theory that provides insight into the construction and performance of both algorithms. The conclusion is that both algorithms outperform other state-of-the-art approaches to tomographic image formation and compressive sensing, especially in the difficult regime of low training.

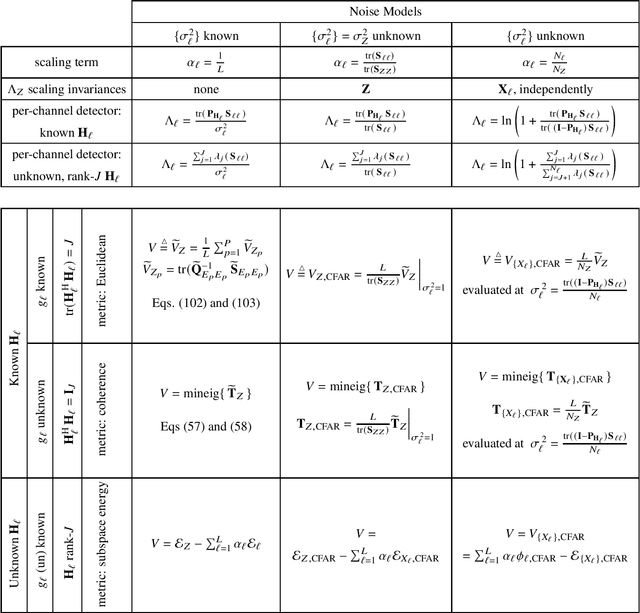

First-Order Statistical Framework for Multi-Channel Passive Detection

Feb 14, 2023

Abstract:In this paper we establish a general first-order statistical framework for the detection of a common signal impinging on spatially distributed receivers. We consider three types of channel models: 1) the propagation channel is completely known, 2) the propagation is known but channel gains are unknown, and 3) the propagation channel is unknown. For each problem, we address the cases of a) known noise variances, b) common but unknown noise variances, and c) different and unknown noise variances. For all 9 cases, we establish generalized-likelihood-ratio (GLR) detectors, and show that each one can be decomposed into two terms. The first term is a weighted combination of the GLR detectors that arise from considering each channel separately. This result is then modified by a fusion or cross-validation term, which expresses the level of confidence that the single-channel detectors have detected a common source. Of particular note are the constant false-alarm rate (CFAR) detectors that allow for scale-invariant detection in multiple channels with different noise powers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge