Marcos André Gonçalves

CTDGSI: A comprehensive exploitation of instance selection methods for automatic text classification. VII Concurso de Teses, Dissertações e Trabalhos de Graduação em SI -- XXI Simpósio Brasileiro de Sistemas de Informação

Jun 08, 2025Abstract:Progress in Natural Language Processing (NLP) has been dictated by the rule of more: more data, more computing power and more complexity, best exemplified by the Large Language Models. However, training (or fine-tuning) large dense models for specific applications usually requires significant amounts of computing resources. This \textbf{Ph.D. dissertation} focuses on an under-investi\-gated NLP data engineering technique, whose potential is enormous in the current scenario known as Instance Selection (IS). The IS goal is to reduce the training set size by removing noisy or redundant instances while maintaining the effectiveness of the trained models and reducing the training process cost. We provide a comprehensive and scientifically sound comparison of IS methods applied to an essential NLP task -- Automatic Text Classification (ATC), considering several classification solutions and many datasets. Our findings reveal a significant untapped potential for IS solutions. We also propose two novel IS solutions that are noise-oriented and redundancy-aware, specifically designed for large datasets and transformer architectures. Our final solution achieved an average reduction of 41\% in training sets, while maintaining the same levels of effectiveness in all datasets. Importantly, our solutions demonstrated speedup improvements of 1.67x (up to 2.46x), making them scalable for datasets with hundreds of thousands of documents.

A thorough benchmark of automatic text classification: From traditional approaches to large language models

Apr 02, 2025Abstract:Automatic text classification (ATC) has experienced remarkable advancements in the past decade, best exemplified by recent small and large language models (SLMs and LLMs), leveraged by Transformer architectures. Despite recent effectiveness improvements, a comprehensive cost-benefit analysis investigating whether the effectiveness gains of these recent approaches compensate their much higher costs when compared to more traditional text classification approaches such as SVMs and Logistic Regression is still missing in the literature. In this context, this work's main contributions are twofold: (i) we provide a scientifically sound comparative analysis of the cost-benefit of twelve traditional and recent ATC solutions including five open LLMs, and (ii) a large benchmark comprising {22 datasets}, including sentiment analysis and topic classification, with their (train-validation-test) partitions based on folded cross-validation procedures, along with documentation, and code. The release of code, data, and documentation enables the community to replicate experiments and advance the field in a more scientifically sound manner. Our comparative experimental results indicate that LLMs outperform traditional approaches (up to 26%-7.1% on average) and SLMs (up to 4.9%-1.9% on average) in terms of effectiveness. However, LLMs incur significantly higher computational costs due to fine-tuning, being, on average 590x and 8.5x slower than traditional methods and SLMs, respectively. Results suggests the following recommendations: (1) LLMs for applications that require the best possible effectiveness and can afford the costs; (2) traditional methods such as Logistic Regression and SVM for resource-limited applications or those that cannot afford the cost of tuning large LLMs; and (3) SLMs like Roberta for near-optimal effectiveness-efficiency trade-off.

A Strategy to Combine 1stGen Transformers and Open LLMs for Automatic Text Classification

Aug 19, 2024

Abstract:Transformer models have achieved state-of-the-art results, with Large Language Models (LLMs), an evolution of first-generation transformers (1stTR), being considered the cutting edge in several NLP tasks. However, the literature has yet to conclusively demonstrate that LLMs consistently outperform 1stTRs across all NLP tasks. This study compares three 1stTRs (BERT, RoBERTa, and BART) with two open LLMs (Llama 2 and Bloom) across 11 sentiment analysis datasets. The results indicate that open LLMs may moderately outperform or match 1stTRs in 8 out of 11 datasets but only when fine-tuned. Given this substantial cost for only moderate gains, the practical applicability of these models in cost-sensitive scenarios is questionable. In this context, a confidence-based strategy that seamlessly integrates 1stTRs with open LLMs based on prediction certainty is proposed. High-confidence documents are classified by the more cost-effective 1stTRs, while uncertain cases are handled by LLMs in zero-shot or few-shot modes, at a much lower cost than fine-tuned versions. Experiments in sentiment analysis demonstrate that our solution not only outperforms 1stTRs, zero-shot, and few-shot LLMs but also competes closely with fine-tuned LLMs at a fraction of the cost.

A Novel Two-Step Fine-Tuning Pipeline for Cold-Start Active Learning in Text Classification Tasks

Jul 24, 2024

Abstract:This is the first work to investigate the effectiveness of BERT-based contextual embeddings in active learning (AL) tasks on cold-start scenarios, where traditional fine-tuning is infeasible due to the absence of labeled data. Our primary contribution is the proposal of a more robust fine-tuning pipeline - DoTCAL - that diminishes the reliance on labeled data in AL using two steps: (1) fully leveraging unlabeled data through domain adaptation of the embeddings via masked language modeling and (2) further adjusting model weights using labeled data selected by AL. Our evaluation contrasts BERT-based embeddings with other prevalent text representation paradigms, including Bag of Words (BoW), Latent Semantic Indexing (LSI), and FastText, at two critical stages of the AL process: instance selection and classification. Experiments conducted on eight ATC benchmarks with varying AL budgets (number of labeled instances) and number of instances (about 5,000 to 300,000) demonstrate DoTCAL's superior effectiveness, achieving up to a 33% improvement in Macro-F1 while reducing labeling efforts by half compared to the traditional one-step method. We also found that in several tasks, BoW and LSI (due to information aggregation) produce results superior (up to 59% ) to BERT, especially in low-budget scenarios and hard-to-classify tasks, which is quite surprising.

TPDR: A Novel Two-Step Transformer-based Product and Class Description Match and Retrieval Method

Oct 05, 2023Abstract:There is a niche of companies responsible for intermediating the purchase of large batches of varied products for other companies, for which the main challenge is to perform product description standardization, i.e., matching an item described by a client with a product described in a catalog. The problem is complex since the client's product description may be: (1) potentially noisy; (2) short and uninformative (e.g., missing information about model and size); and (3) cross-language. In this paper, we formalize this problem as a ranking task: given an initial client product specification (query), return the most appropriate standardized descriptions (response). In this paper, we propose TPDR, a two-step Transformer-based Product and Class Description Retrieval method that is able to explore the semantic correspondence between IS and SD, by exploiting attention mechanisms and contrastive learning. First, TPDR employs the transformers as two encoders sharing the embedding vector space: one for encoding the IS and another for the SD, in which corresponding pairs (IS, SD) must be close in the vector space. Closeness is further enforced by a contrastive learning mechanism leveraging a specialized loss function. TPDR also exploits a (second) re-ranking step based on syntactic features that are very important for the exact matching (model, dimension) of certain products that may have been neglected by the transformers. To evaluate our proposal, we consider 11 datasets from a real company, covering different application contexts. Our solution was able to retrieve the correct standardized product before the 5th ranking position in 71% of the cases and its correct category in the first position in 80% of the situations. Moreover, the effectiveness gains over purely syntactic or semantic baselines reach up to 3.7 times, solving cases that none of the approaches in isolation can do by themselves.

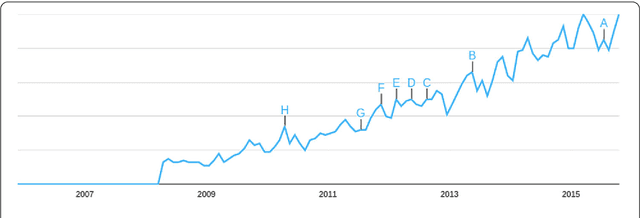

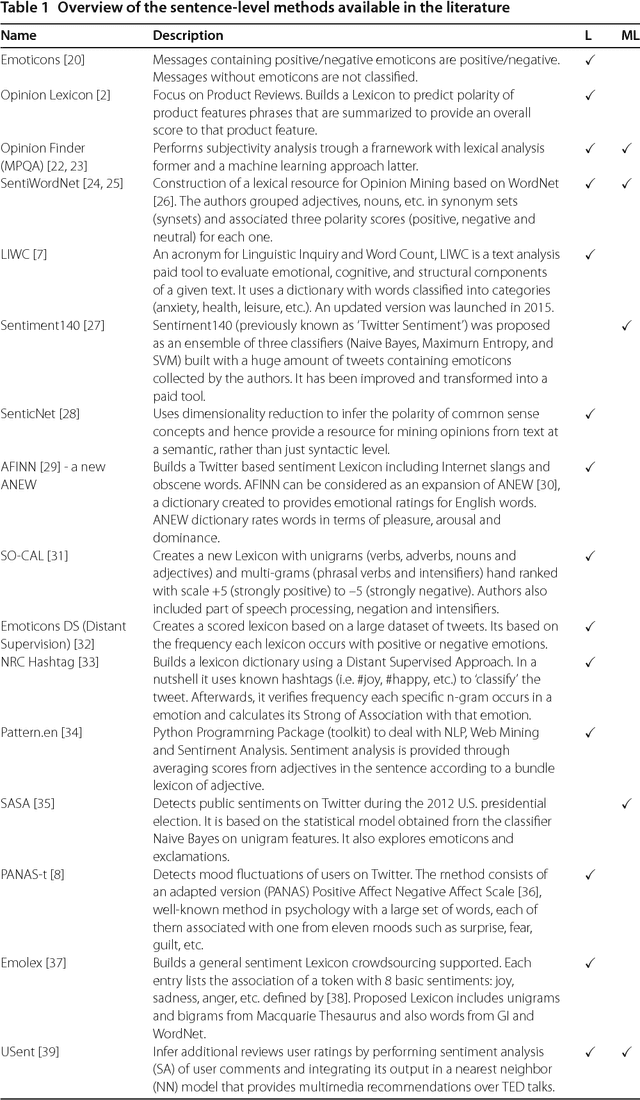

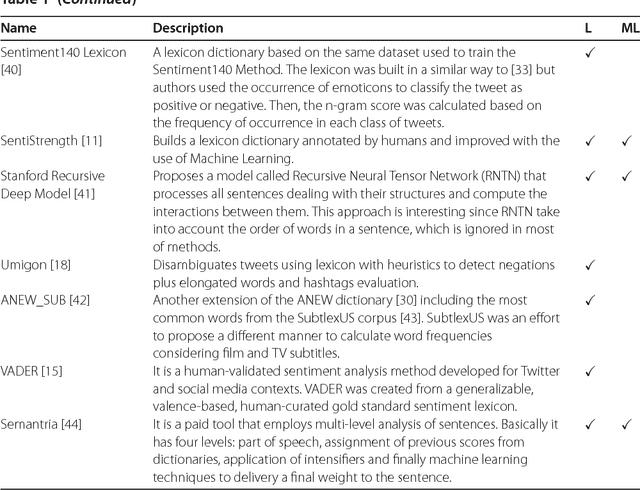

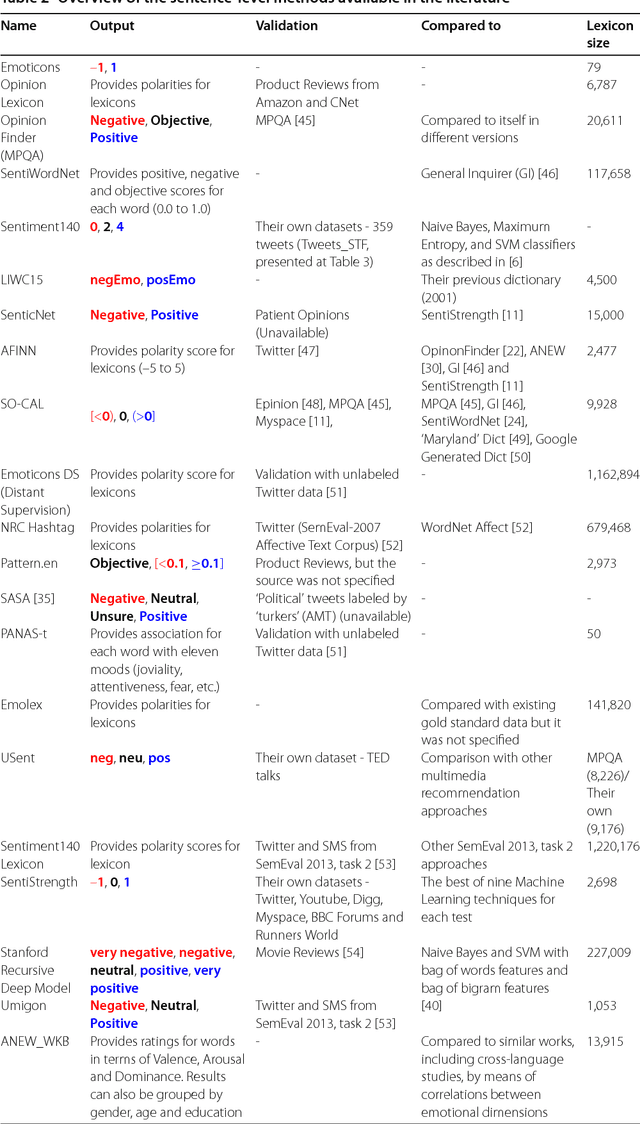

SentiBench - a benchmark comparison of state-of-the-practice sentiment analysis methods

Jul 14, 2016

Abstract:In the last few years thousands of scientific papers have investigated sentiment analysis, several startups that measure opinions on real data have emerged and a number of innovative products related to this theme have been developed. There are multiple methods for measuring sentiments, including lexical-based and supervised machine learning methods. Despite the vast interest on the theme and wide popularity of some methods, it is unclear which one is better for identifying the polarity (i.e., positive or negative) of a message. Accordingly, there is a strong need to conduct a thorough apple-to-apple comparison of sentiment analysis methods, \textit{as they are used in practice}, across multiple datasets originated from different data sources. Such a comparison is key for understanding the potential limitations, advantages, and disadvantages of popular methods. This article aims at filling this gap by presenting a benchmark comparison of twenty-four popular sentiment analysis methods (which we call the state-of-the-practice methods). Our evaluation is based on a benchmark of eighteen labeled datasets, covering messages posted on social networks, movie and product reviews, as well as opinions and comments in news articles. Our results highlight the extent to which the prediction performance of these methods varies considerably across datasets. Aiming at boosting the development of this research area, we open the methods' codes and datasets used in this article, deploying them in a benchmark system, which provides an open API for accessing and comparing sentence-level sentiment analysis methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge