Marcelo Ladeira

Multiobjective Evolutionary Component Effect on Algorithm behavior

Jul 31, 2023

Abstract:The performance of multiobjective evolutionary algorithms (MOEAs) varies across problems, making it hard to develop new algorithms or apply existing ones to new problems. To simplify the development and application of new multiobjective algorithms, there has been an increasing interest in their automatic design from their components. These automatically designed metaheuristics can outperform their human-developed counterparts. However, it is still unknown what are the most influential components that lead to performance improvements. This study specifies a new methodology to investigate the effects of the final configuration of an automatically designed algorithm. We apply this methodology to a tuned Multiobjective Evolutionary Algorithm based on Decomposition (MOEA/D) designed by the iterated racing (irace) configuration package on constrained problems of 3 groups: (1) analytical real-world problems, (2) analytical artificial problems and (3) simulated real-world. We then compare the impact of the algorithm components in terms of their Search Trajectory Networks (STNs), the diversity of the population, and the anytime hypervolume values. Looking at the objective space behavior, the MOEAs studied converged before half of the search to generally good HV values in the analytical artificial problems and the analytical real-world problems. For the simulated problems, the HV values are still improving at the end of the run. In terms of decision space behavior, we see a diverse set of the trajectories of the STNs in the analytical artificial problems. These trajectories are more similar and frequently reach optimal solutions in the other problems.

Faster Convergence in Multi-Objective Optimization Algorithms Based on Decomposition

Dec 21, 2021

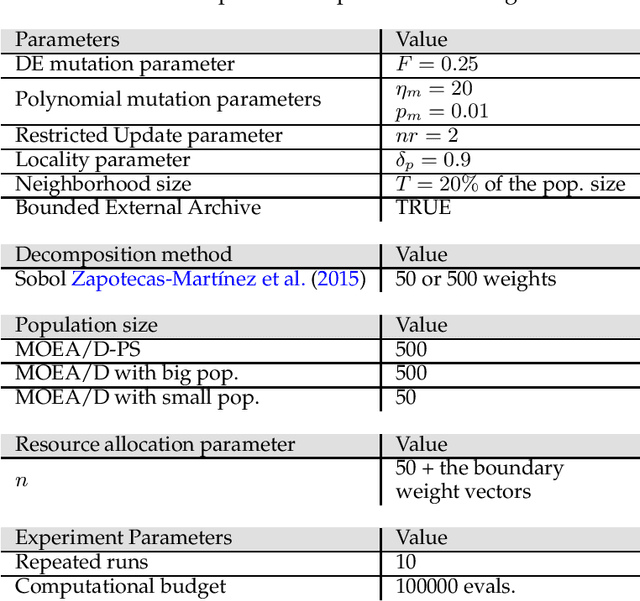

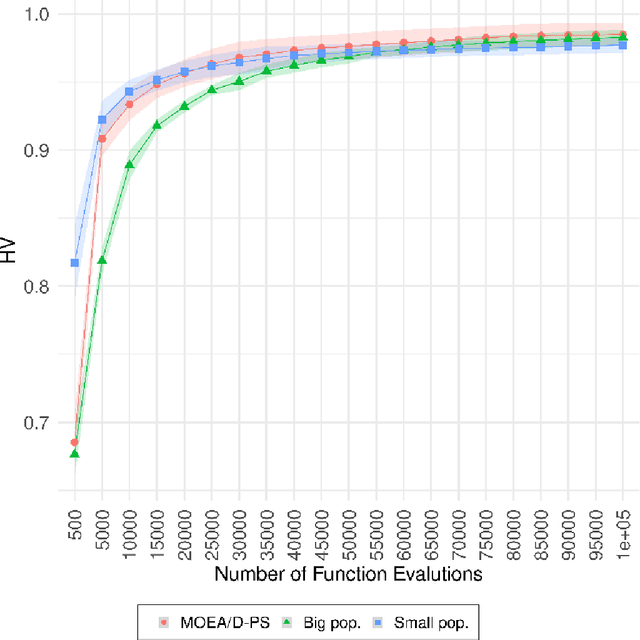

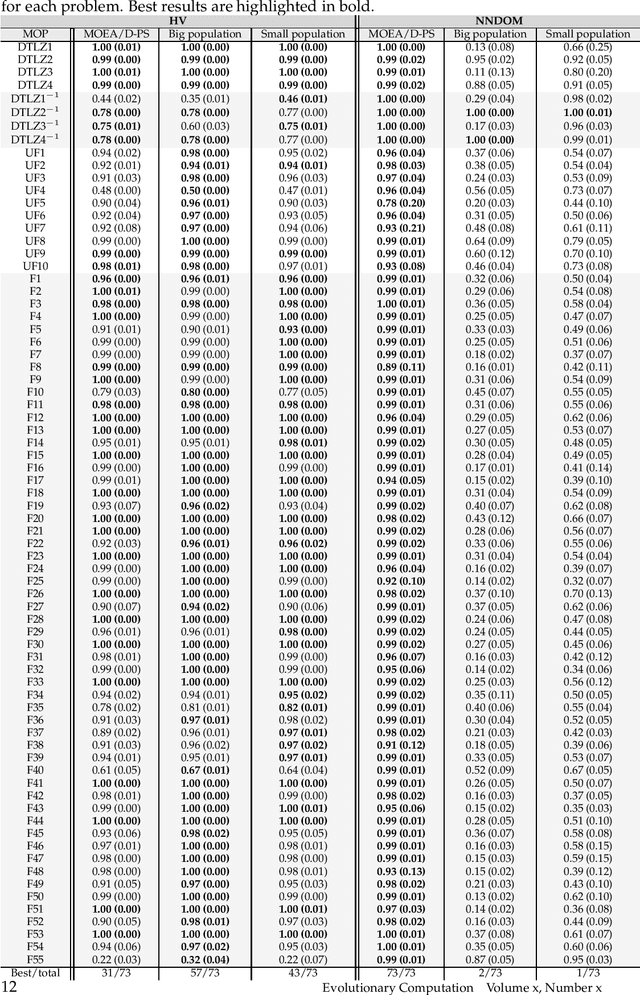

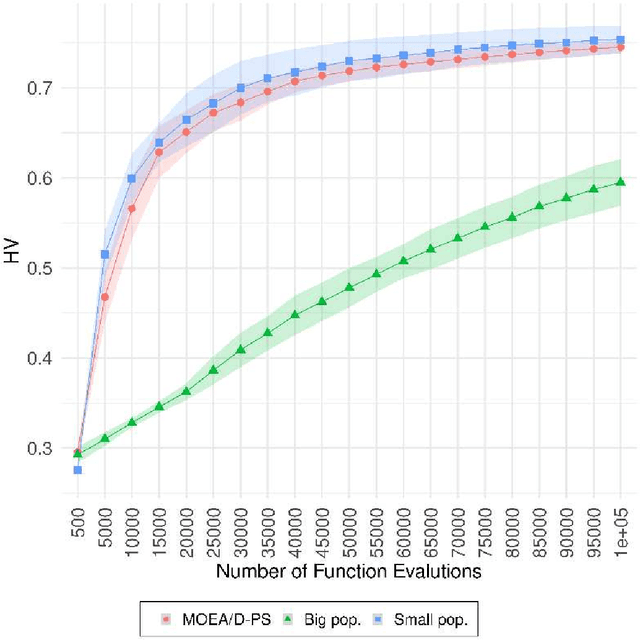

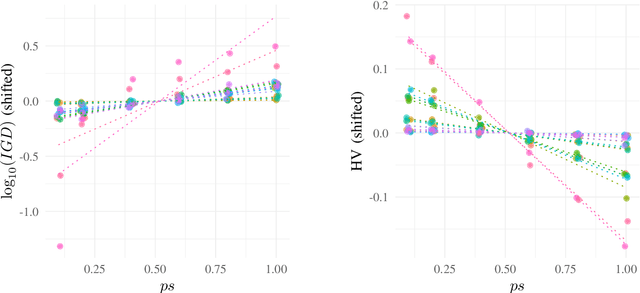

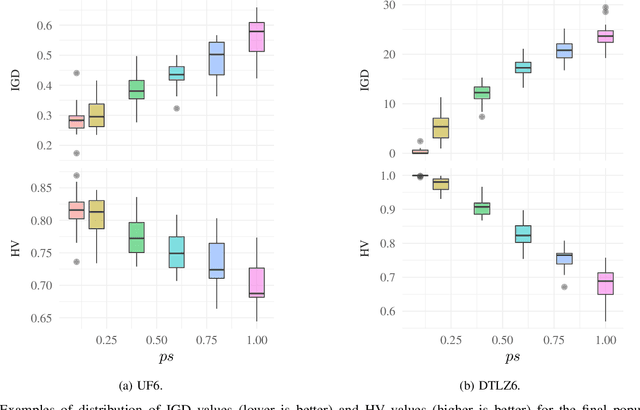

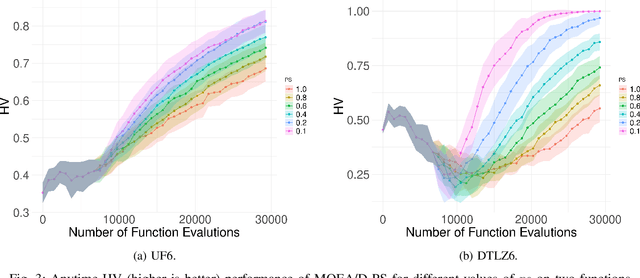

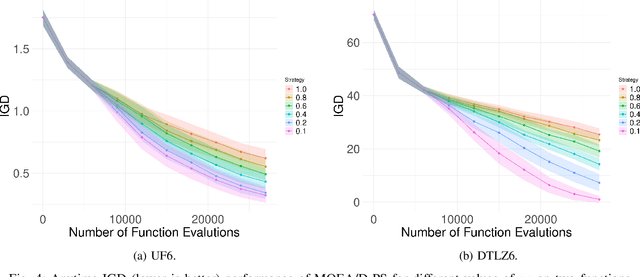

Abstract:The Resource Allocation approach (RA) improves the performance of MOEA/D by maintaining a big population and updating few solutions each generation. However, most of the studies on RA generally focused on the properties of different Resource Allocation metrics. Thus, it is still uncertain what the main factors are that lead to increments in performance of MOEA/D with RA. This study investigates the effects of MOEA/D with the Partial Update Strategy in an extensive set of MOPs to generate insights into correspondences of MOEA/D with the Partial Update and MOEA/D with small population size and big population size. Our work undertakes an in-depth analysis of the populational dynamics behaviour considering their final approximation Pareto sets, anytime hypervolume performance, attained regions and number of unique non-dominated solutions. Our results indicate that MOEA/D with Partial Update progresses with the search as fast as MOEA/D with small population size and explores the search space as MOEA/D with big population size. MOEA/D with Partial Update can mitigate common problems related to population size choice with better convergence speed in most MOPs, as shown by the results of hypervolume and number of unique non-dominated solutions, the anytime performance and Empirical Attainment Function indicates.

Exploring Constraint Handling Techniques in Real-world Problems on MOEA/D with Limited Budget of Evaluations

Nov 19, 2020

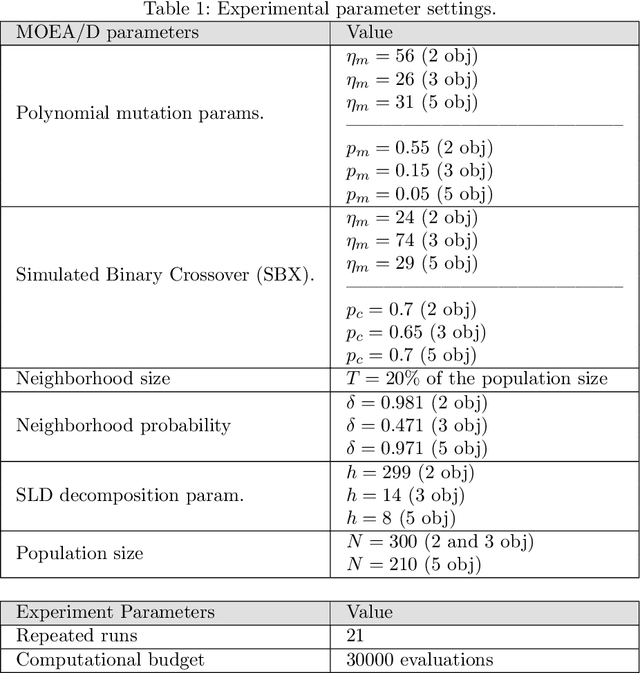

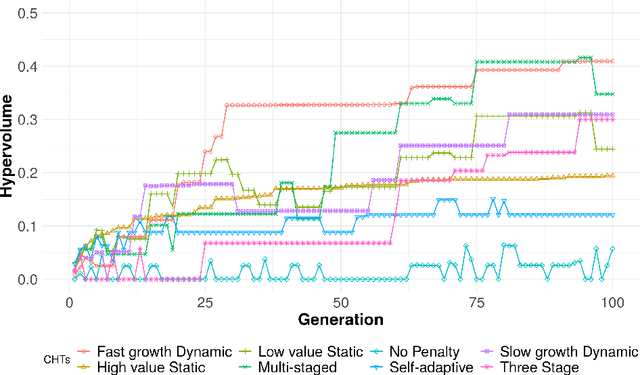

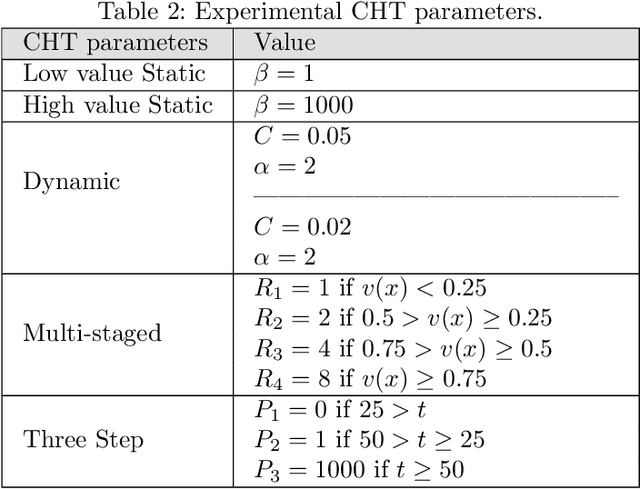

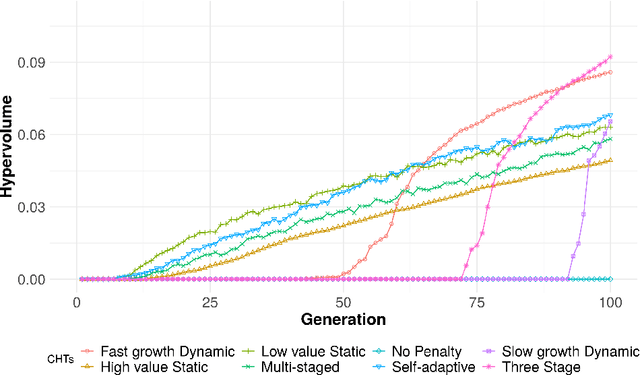

Abstract:Finding good solutions for Multi-objective Optimization (MOPs) Problems is considered a hard problem, especially when considering MOPs with constraints. Thus, most of the works in the context of MOPs do not explore in-depth how different constraints affect the performance of MOP solvers. Here, we focus on exploring the effects of different Constraint Handling Techniques (CHTs) on MOEA/D, a commonly used MOP solver when solving complex real-world MOPs. Moreover, we introduce a simple and effective CHT focusing on the exploration of the decision space, the Three Stage Penalty. We explore each of these CHTs in MOEA/D on two simulated MOPs and six analytic MOPs (eight in total). The results of this work indicate that while the best CHT is problem-dependent, our new proposed Three Stage Penalty achieves competitive results and remarkable performance in terms of hypervolume values in the hard simulated car design MOP.

MOEA/D with Random Partial Update Strategy

Jan 20, 2020

Abstract:Recent studies on resource allocation suggest that some subproblems are more important than others in the context of the MOEA/D, and that focusing on the most relevant ones can consistently improve the performance of that algorithm. These studies share the common characteristic of updating only a fraction of the population at any given iteration of the algorithm. In this work we investigate a new, simpler partial update strategy, in which a random subset of solutions is selected at every iteration. The performance of the MOEA/D using this new resource allocation approach is compared experimentally against that of the standard MOEA/D-DE and the MOEA/D with relative improvement-based resource allocation. The results indicate that using the MOEA/D with this new partial update strategy results in improved HV and IGD values, and a much higher proportion of non-dominated solutions, particularly as the number of updated solutions at every iteration is reduced.

Transfer Learning for Brain Tumor Segmentation

Dec 28, 2019

Abstract:Gliomas are the most common malignant brain tumors that are treated with chemoradiotherapy and surgery. Magnetic Resonance Imaging (MRI) is used by radiotherapists to manually segment brain lesions and to observe their development throughout the therapy. The manual image segmentation process is time-consuming and results tend to vary among different human raters. Therefore, there is a substantial demand for automatic image segmentation algorithms that produce a reliable and accurate segmentation of various brain tissue types. Recent advances in deep learning have led to convolutional neural network architectures that excel at various visual recognition tasks. They have been successfully applied to the medical context including medical image segmentation. In particular, fully convolutional networks (FCNs) such as the U-Net produce state-of-the-art results in the automatic segmentation of brain tumors. MRI brain scans are volumetric and exist in various co-registered modalities that serve as input channels for these FCN architectures. Training algorithms for brain tumor segmentation on this complex input requires large amounts of computational resources and is prone to overfitting. In this work, we construct FCNs with pretrained convolutional encoders. We show that we can stabilize the training process this way and produce more robust predictions. We evaluate our methods on publicly available data as well as on a privately acquired clinical dataset. We also show that the impact of pretraining is even higher for predictions on the clinical data.

Classification of EEG Signals using Genetic Programming for Feature Construction

Jun 11, 2019

Abstract:The analysis of electroencephalogram (EEG) waves is of critical importance for the diagnosis of sleep disorders, such as sleep apnea and insomnia, besides that, seizures, epilepsy, head injuries, dizziness, headaches and brain tumors. In this context, one important task is the identification of visible structures in the EEG signal, such as sleep spindles and K-complexes. The identification of these structures is usually performed by visual inspection from human experts, a process that can be error prone and susceptible to biases. Therefore there is interest in developing technologies for the automated analysis of EEG. In this paper, we propose a new Genetic Programming (GP) framework for feature construction and dimensionality reduction from EEG signals. We use these features to automatically identify spindles and K-complexes on data from the DREAMS project. Using 5 different classifiers, the set of attributes produced by GP obtained better AUC scores than those obtained from PCA or the full set of attributes. Also, the results obtained from the proposed framework obtained a better balance of Specificity and Recall than other models recently proposed in the literature. Analysis of the features most used by GP also suggested improvements for data acquisition protocols in future EEG examinations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge